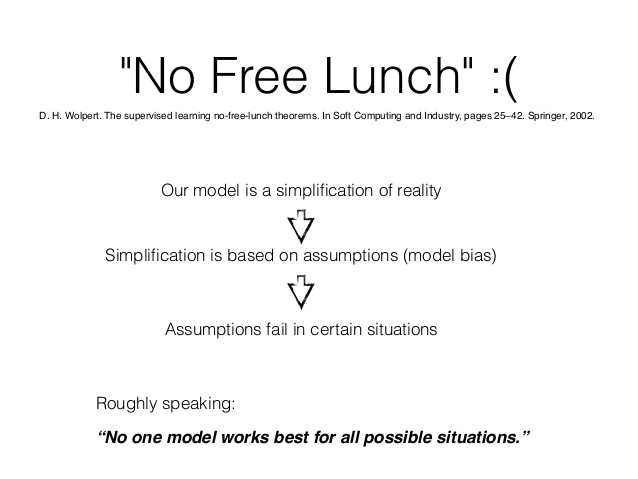

No Free Lunch Theorems (NFLTs): Two well-known theorems bearing the same name: One for supervised machine learning (Wolpert 1996) and one for search/optimization (Wolpert and Macready 1997).

The thing that they share in common is that they state that certain classes of algorithms have no “best” algorithm because on average, they’ll all perform about the same.

Mathematically put, the computational cost of finding a solution, averaged over all problems in the class, is the same for any solution method. No solution, therefore, offers a short cut.

Today’s menu:

- Inception

- NFL real-world implications

- Example problem

- Implementation

- Takeaways

- References

Once Upon A Time

The No Free Lunch Theorem (NFLT) is named after the phrase, there ain’t no such thing as a free lunch. It has origins in the mid-nineteenth century onwards, whereby bar and saloon owners would attract drinkers in with free food on the condition that they brought a drink.

In the “no free lunch” metaphor, each “restaurant” (problem-solving procedure) has a “menu” associating each “lunch plate” (problem) with a “price” (the performance of the procedure in solving the problem). The menus of restaurants are identical except in one regard – the prices are shuffled from one restaurant to the next. For someone who is as likely to order each plate as any other, the average cost of lunch does not depend on the choice of restaurant. There’s no place where it’s a free lunch.

The Whys And Hows

In theory, a novice in Data Science and an expert Kaggler, have equal chances of winning the Kaggle competition since the performance of the solution model does not depend on the choice of the algorithm or method.

But that doesn’t happen in the real world, right?

We know about the great algorithmic weapon, “XGBoost” and how it wins most of the Kaggle competitions that aren’t won with deep learning. Shouldn’t we just use XGBoost all the time? Doesn’t it have upper hand over other algorithms?

Or even better, what about the much-hyped, “Master Algorithm” that claims to be one-size-fits-all for the machine learning algorithms?

“Never assume the obvious is true.”

The question to ask is:

“What is the basic set of assumptions that are specific enough to make learning feasible in a reasonable amount of time, general enough to be applicable to a large class kind of machine learning algorithms?”

The core observation for understanding theorem is to know that an NFL theorem considers applying two algorithms over all possible problems. In practice, we are probably only interested in a small subset of all possible functions.

If an algorithm performs well on a certain class of problems then it necessarily will perform poorly on the set of all remaining problems.

Alice and Bob discuss what’s in it for us over lunch.

Assume we have a large dataset from which we drew a sample which might allow us to train a machine learning model that predicts values for any of the required predictor columns.

Alice: To make a plant predictor algorithm, let’s first list out some major characteristics of plants.

Bob: Plants have green-coloured leaves, stems, roots, flowers, fruits, thorns and can’t move.

Bob: Well, it could also be a tree. We would require more attributes to ascertain if it’s a plant.

Alice: Let’s include some more: life span, average height, absence of trunk and branches, and if it has autotrophic nature.

Bob: This is still not an exhaustive list, but these attributes are enough to determine if a given being is a plant or not.

Alice: Agreed. We need not map all possible attributes of plants to tell them apart from other beings.

Let’s take a look at the sample training set that Alice and Bob gathered.

| Item | Has trunk | Has branch | Has flowers | Has fruits | Avg height | Lifespan | Is a plant |

| Banana tree | Yes | Yes | Yes | Yes | 12-25 ft | 5-6 years | No |

| Tulsi | No | No | Yes | No | 30-60 cm | 1 year | Yes |

| Rose plant | No | No | Yes | No | 5-6 ft | 35 years | Yes |

| Mango tree | Yes | Yes | Yes | Yes | 35–40 m | 300 years | No |

| Aloe vera | No | Yes | No | No | 60–100 cm | 5-25 years | Yes |

Bob: Now, we have the training set: different living beings in rows, their different attributes in columns, with the predictor column being Yes or No, if it’s a plant or not, based on the above set of hypotheses. Any classification ML algorithm could predict the results for the test dataset. Isn’t it?

Alice: Yes, but according to NFL theorem, the only way to arrive at an optimised solution is just to use the whole mapping with all possible mapping functions. No shortcuts. No compressing the data by ignoring the attributes. So, how does the model work here? Shouldn’t that be applicable to our problem as well?

Bob: Yes, we could correctly model a plant predictor with the smaller dataset since we did not assume a uniform distribution over all the problems. Hence, the “No free lunch theorem” does not apply when we don’t follow the assumptions it asks us to make. We can now assess performances of different algorithms, keeping in mind the important trade-offs, for the best algorithm.

Time To Get Little Technical

Let’s take the training dataset of our plant predictor model in variable trainfeats and test dataset in testfeats.

Now, pass this as an argument in different machine learning classifiers namely: Naive bayes, Decision tree, Support Vector Clustering and Stochastic Gradient Descent.

We have chosen our trusted NLTK library in Python to get a whole lot of options to choose from for our prediction model.

Get the accuracies and compare them.

(Assuming data cleaning and other preliminaries are done beforehand)

accuracies = []

for x in range(10):

random.shuffle(train)

cutoff = int(len(train) * 0.01)

trainfeats = train[:cutoff]

testfeats = train[cutoff:]

# Naive bayes

NBClassifier = NaiveBayesClassifier.train(trainfeats)

# Decision tree

DTClassifier = DecisionTreeClassifier.train(trainfeats)

# Support Vector Clustering

SVCClassifier= SklearnClassifier(LinearSVC(), sparse=False)

SVCClassifier.train(trainfeats)

# Stochastic Gradient Descent

SGDClassifier_classifier = SklearnClassifier(SGDClassifier())

SGDClassifier_classifier.train(trainfeats)

# Taking Naive Bayes classifier as our first model for measuring the accuracy

accuracy = nltk.classify.util.accuracy(NBClassifier,testfeats)

accuracies.append(accuracy)

print ((accuracy * 100))

On comparing the results obtained from different ML models, one would find that the pre-defined notions that existed before the start of this experiment, such as one OG model performing better than the other, would be all proven wrong. That is, until and unless we pass our training data on a desired set of algorithms and perform the model evaluation ourselves, we cannot for certain point out one algorithm that would give better results than the rest.

Key Takeaways

How well the algorithm will do is determined by how aligned the algorithm is with the actual problem at hand. Depending on the problem, it is important to assess the trade-offs between speed, accuracy, and complexity of different models and algorithms and find a model that works best for that particular problem.

- There are no universal solutions to ML problems..

- All ML approaches are equally good if we do not place strong assumptions on the input data.

- For every ML algorithm, there exists a sample or sample class where it outperforms some other method.