|

Listen to this story

|

Most of the world that stumbled upon AI-generated images this past year may make the mistake of believing that the buzzword ‘generative’ was never-heard-before. But anyone who knows a little more about AI, would be familiar with the fact that the origins of generative AI was with the advent of GANs.

GANs, a beginning in generative AI

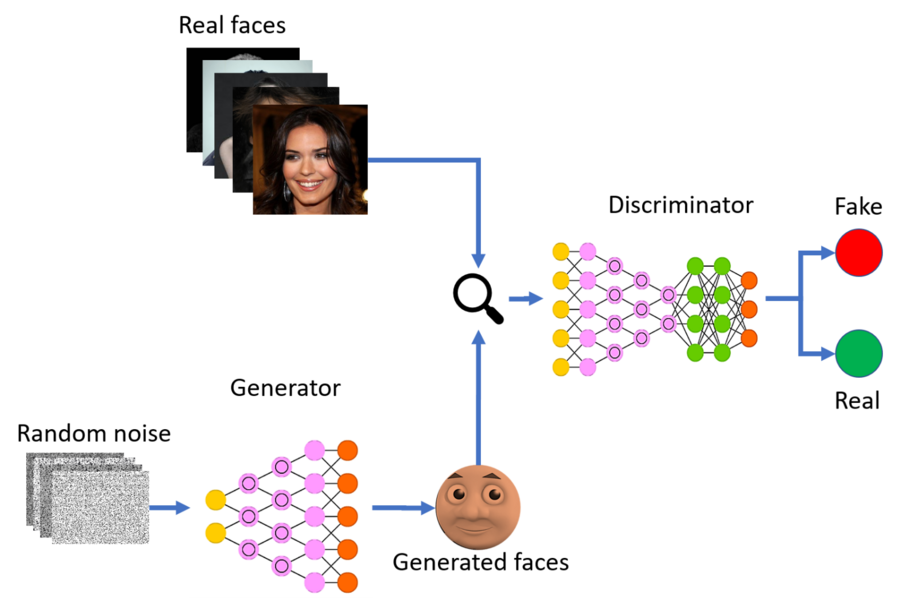

In 2014, a group of researchers, including former Google Brain research scientist Ian Goodfellow, his professor and Turing awardee Yoshua Bengio and others released a paper on Generative Adversarial Networks or GANs. They decided to use neural networks in an imaginative manner – they would pit two networks against each other that would constantly try to outwit the other. Both would be trained on the same data set of images and eventually generate a new fake image that would be sufficiently convincing.

GANs gave deep learning models something it didn’t have before – imagination. And for this, GANs will be looked back at as one of the biggest steps taken towards gifting machines with a human-like consciousness. The paper caused an earthquake of sorts in the community turning Goodfellow into a celebrity instantly.

In an exclusive chat with Analytics India Magazine, AI stalwart Bengio raved about the distance that generative models had come since. On the recent advancements made by text-to-image generators like OpenAI’s DALL.E and StabilityAI’s Stable Diffusion, Bengio stated, “One of the things that impressed me the most is the progress in generative models.”

Origins of diffusion models

Bengio also praised the rapidly increasing body of work of diffusion models. Introduced in 2015 in a paper titled, ‘Deep Unsupervised Learning using Nonequilibrium Thermodynamics’, diffusion models were the next step that shaped generative models.

Diffusion models worked faster and generated images that improved upon the quality produced by GANs.

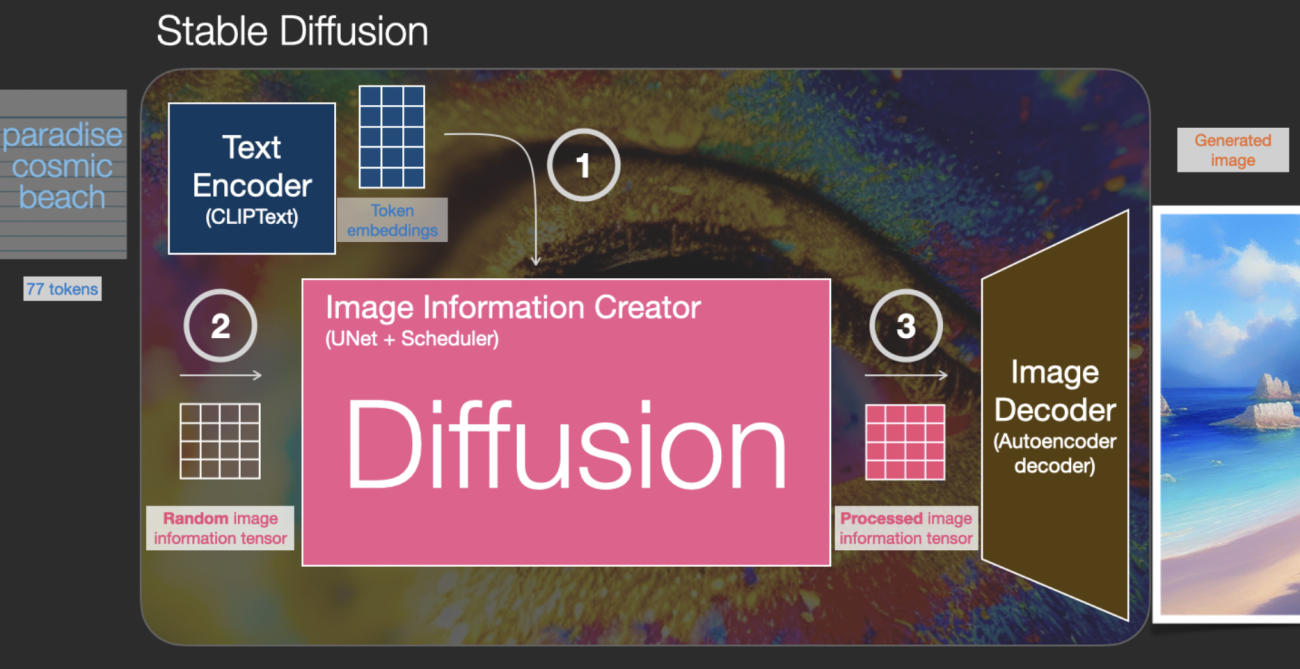

These models worked on a denoising technique that took corrupted images and re-synthesise the image until the final clean image was produced. This is how Stable Diffusion works — it is trained to add and remove small amounts of noise from images repeatedly until it generates the final output.

Breakthroughs leading to modern generative models

To Bengio and other familiar members in the AI community, none of these concepts were new. “Some of the ideas behind this date back to more than ten years ago,” he stated. Bengio has left a trail of papers that demonstrate this. In 2013, Bengio, Li Yao and a couple of other researchers published a paper on denoising titled, ‘Generalized Denoising Auto-Encoders as Generative Models’ that spoke about the importance of denoising and contractive autoencoders.

All major text-to-image generators around today, including DALL.E 2, Google’s Imagen and Stable Diffusion, use diffusion models.

A vital breakthrough achieved last year contributed to their prominence.

In December 2021, a paper titled, ‘High-Resolution Image Synthesis with Latent Diffusion Models’ introduced latent diffusion models. These models used an autoencoder to compress the images into a comparatively smaller latent space during training. Then, the autoencoder is used to decompress the final latent representation that eventually generates the output image. This constant repetition of the diffusion process in latent space cut down the generation time for images and costs by a huge margin.

Latent diffusion models could also do other things that generative models previously weren’t capable of, like image inpainting, super-resolution and semantic scene synthesis.

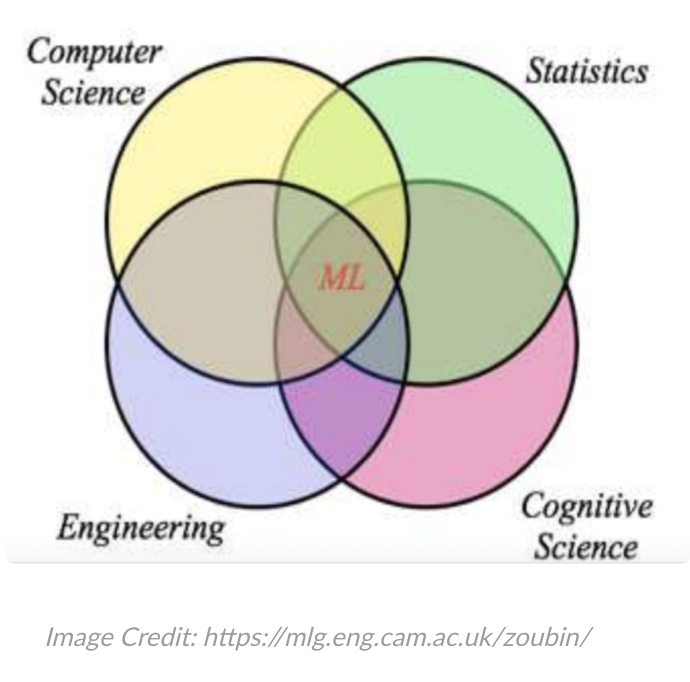

Return in Probabilistic ML and other concepts

Bengio has also followed the space of probabilistic machine learning ardently for a while and firmly believes that advancements in the area can solve some of the challenges with deep learning. “I am quite excited by the progress in the area of probabilistic machine learning,” he noted.

Probabilistic machine learning designed AI models in a way that can help machines understand what learning is through experiences. It uses statistics to predict the possibility of future results through random occurrences or actions. For example, a probabilistic classifier can assign a probability of say 0.8 to the ‘Cat’ class indicating that it is very confident that the animal in the image is a cat. If one can connect the dots, the applications of this can be immediately made in self-driving vehicles.

There’s a big advantage to probabilistic machine learning – it gives a clear idea of how confident or uncertain the machine is about the accuracy of the prediction.

According to Bengio, a bunch of these older ideas have the potential of making a comeback in modern-day AI like old wine in a new bottle. There’s another one that he believes could be transformative – causality. While the chain of thoughts around cause and effect came around in Pre-Socratic times, deep learning researchers recognised them much later because of the challenges that arise due to the lack of causal representations in ML models.

“One of the biggest limitations of current machine learning, including deep learning, is the ability to properly generalise to new settings like new distributions – what we call ‘out of distribution generalisation’, and humans are very good at that,” said Bengio. He said there are good reasons to think that humans are good at that because they have causal models of the world. “If you have a good causal model, you can generalise to new settings,” he added.