Large Neural Networks are difficult to use in production environments as they are memory intensive and are slow during inference. Most successful Deep Learning Models such as Transformers are being followed by their Lite Versions which dramatically speed up inference trading off accuracy. In this article, let’s explore Least Squares Quantization, an algorithm to speed up large neural networks by quantizing them while reducing the accuracy gap from the non-quantized model.

Hadi Pouransari, Zhucheng Tu, Oncel Tuzel, researchers at Apple, introduced this approach in a paper- Least Squares Binary Quantization of Neural Networks, on 23rd March 2020.

Inference Time Reduction

We all agree that smaller models are better for practical purposes in memory usage and inference time. But the performance of smaller models is often low when compared to large models. We need to speed up the larger models without modifying them to an extent that hits the performance. The following are the Methods to improve the run time of models.

Hardware Optimization

Hardware is used for model inference. This can boost performance significantly. GPUs and TPUs(Tensor Processing Unit) are examples of this kind of optimization.

Compiler Optimization

Compiler Optimization means fusing operations or implementing them in a hardware aware manner to reduce computational complexity. Dense and Sparse Matrix-vector multiplications are an example of such Optimization.

Model Optimization

Modifying the model architecture or compressing the model leads to an increase in performance. Such optimizations come under model Optimization. Least squares quantization is an example of Model Optimization.

Quantization

We know that using low precision numbers speed up the arithmetic operations on them. Hence Precision of weights and activations of models is reduced to speed up models. This can be done post-training or during training to allow the model to adjust for the low precision. Precisions can also be varied, and a mixed precision model can be made i.e each layer can be of different precisions.

Quantization is the process of mapping the high precision values (a large set of possible values) to low precision values(a smaller set of possible values). Quantization can be done on both weights and activations of a model. Quantization reduces the complexity of operations on these values. 1 bit Quantization(each scalar in a tensor is represented by 1 bit) can reduce complex convolutional layers into XNOR gates and counting the number of 1’s.

There are several ways to do quantization. Scaled Quantization is the proposed method in the paper. This method decomposes the variable matrix into two parts.One part is the scale(X1) and Other part is the matrix of the same dimensions as the original one. This new matrix consists(S) of only {-1,+1}.

Thus we reduced the space of this variable. Following sections will make it easier to understand the scaled quantization.

Least Squares Quantization

Intuitively, quantization leads to a reduction in accuracy as the event space of the variables is reduced. But this accuracy drop can be reduced if our quantization achieves better approximation of the original variables. To enforce this, we need to optimize another objective function apart from the model’s objective function.

This new function is the Quantization error.We need to reduce this quantization error. Frobenius norm is one of the ways to calculate such error. If x is the original variable and xq is the quantized version of this variable, then Quantization error is || x- xq ||2

Let’s see an example of 1 bit scaled Quantization.

- x is the input variable to quantization

- xq is the output of quantization.

- v is the scale of quantization.

- s(x) is a function that gives -1 or +1.

Example: [-5 -4 -3 3 4 5] This vector when quantized becomes 4* [-1 -1 -1 1 1 1].

The least Squares Optimization of this quantization is

Solving this equation would result in v being the mean of x. s(x) is the sign function.

This can be generalized to k bit Quantization. The Difference is that we will have k pairs of v’s and s functions.

For k bit quantization we can find v’s and s’s by following the greedy algorithm mentioned below.

This is analogous to Gradient Boosting; at each step, we approximate the value then we calculate a residual and try to approximate the residual again. This process is repeated K times.

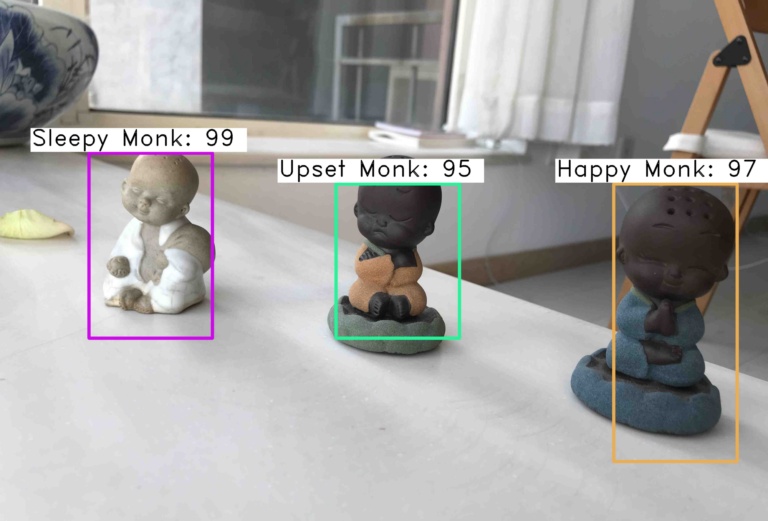

Quantization Example

Enough theory about quantization. Let’s see how it affects a model on the CIFAR 100 dataset.

CIFAR100 contains colour images that need to be classified into one of 100 classes.

Setup

!git clone https://github.com/apple/ml-quant.git %cd /content/ml-quant !pip install -U pip wheel !pip install -r requirements.txt !pip install flit import os os.environ['FLIT_ROOT_INSTALL'] = '1' !flit install -s

Let’s see how a full precision resnet model performs on this dataset.We can train resnet using the following command

python examples/cifar100/cifar100.py --config examples/cifar100/cifar100_fp.yaml --experiment-name cifar100-fp

Now let us see how a quantized model compares to this model. We will use knowledge distillation to teach the quantized model. Full precision model can be used as reference for this.

To make the quantized model refer to the full precision model, we need to edit the config file and set the teacher path.

Command to train quantized model

!python examples/cifar100/cifar100.py --config examples/cifar100/cifar100_ls1_weight_ls2_activation_kd.yaml --experiment-name cifar100-ls2

Results

From these results, we can see that the quantized model almost achieved the same results as the original full precision ResNet model on CIFAR 100 dataset.

Conclusion

Several Real-world use cases of Machine Learning require products with the latency of a few seconds. Trading off Accuracy for this speed is possible with techniques like Quantization. We should explore such techniques to broaden our Machine Learning toolkit.