|

Listen to this story

|

In February this year, when we first experimented with the AI-chatbot ChatGPT attempting UPSC, which is widely regarded as one of the toughest exams in the world, it failed miserably – out of 100 questions (Paper 1), the chatbot could answer only 54 questions correctly. ChatGPT’s inability to pass the UPSC prelims became a source of pride for many aspirants.

But, since we did that story, a lot of new updates and developments have happened in the world of AI. Most notably, OpenAI released GPT-4, which is the most advanced Large Language Model (LLM) to date.

The previous version of ChatGPT was powered by GPT3.5, and a few months back OpenAI made GPT-4 accessible through ChatGPT Plus.

Now, the Indian Civil Services prelims examination is also just around the corner. In this spirit, we thought, can GPT-4 clear UPSC?

GPT-4 takes UPSC (Attempt 2)

We conducted the same experiment again, but this time, we asked the same 100 questions to GPT-4, and this time, it got 86 questions right.

Here, it’s important to note that Prelims consists of two papers-General Studies Paper-I and General Studies Paper-II, we stuck to Paper-I only in both cases (attempt 1 & 2).

While the cut off for previous year (2021) was 87.54 marks, considering only paper 1, GPT-4 scored 162.76 marks, which would mean ChatGPT Plus (powered by GPT-4) cleared UPSC.

In the previous experiment, ChatGPT gave 46 answers wrong, and in that terms, we have seen a huge improvement with GPT-4 as it got only 14 answers wrong. Having said that, this was not something completely unexpected either.

When OpenAI released the technical paper of GPT-4, it did not mention any information about the architecture (including model size), hardware, training compute, dataset construction, training method etc, resulting in a furore among researchers.

But interestingly, OpenAI did reveal that they tested GPT-4 on a diverse set of benchmarks, including simulating exams that were originally designed for humans.

(Source: GPT-4 technical paper)

In the technical paper, OpenAI also notes that GPT-4 outperforms GPT-3.5 ( ChatGPT) on most exams tested. Hence, it’s not surprising that GPT-4 scored better in UPSC than ChatGPT.

Some interesting observations

One of the reasons why ChatGPT got so many answers wrong was that it hallucinates. However, according to OpenAI, GPT-4 is more creative and less likely to make up facts than its predecessor. This has played an important role in GPT-4’s improved performance in the UPSC exam.

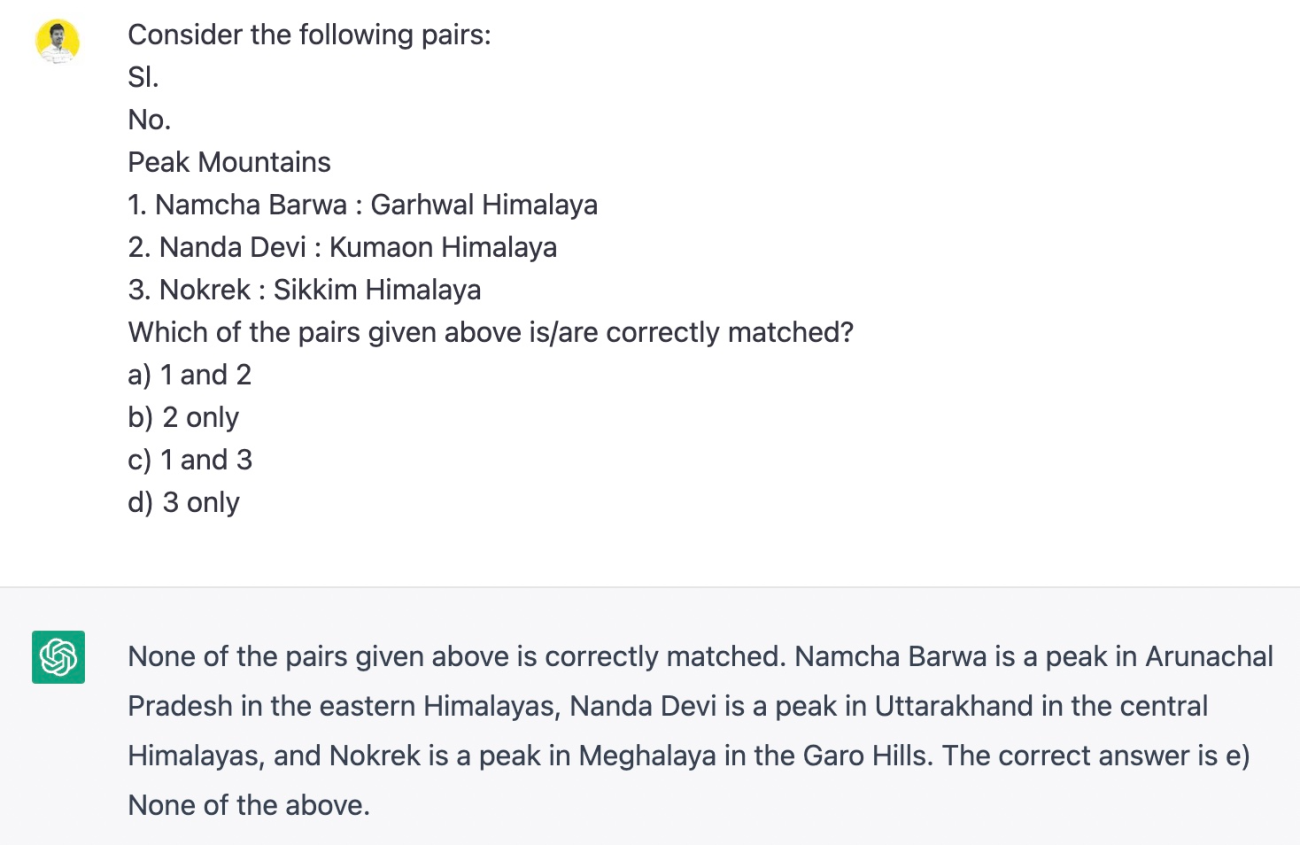

When we made ChatGPT take the civil services exam the first time, we found that in certain cases, ChatGPT created its own alternatives.

(ChatGPT response)

But unlike its predecessor, we did not observe any similar occurrences with GPT-4. Nonetheless, GPT-4 does exhibit some level of hallucination, albeit to a much lesser degree.

Additionally, many argued that one of the plausible reasons for ChatGPT’s failure to clear UPSC could be attributed to its training data. ChatGPT, along with GPT-4, has been trained on data only up to September 2021, thereby lacking knowledge about events that occurred after 2021. Hence, despite the limitation, GPT-4 did fairly well unlike its predecessor.

Surprisingly, both models answered history-related questions incorrectly, despite it being an area where they are expected to perform well.

( GPT-4 response)

Besides, it is important to note that it was just a fun experiment and no concrete judgments should be made based on these results.

While GPT-4 cleared exams such as GRE and LSAT, it failed in English literature. Similarly, ChatGPT, despite having all the knowledge in the world, failed in an exam designed for a sixth grader.

On an ending note, it’s also important to note that by altering the inquiry, we could prompt GPT-4 to arrive at accurate responses. This implies that in some instances, rephrasing the same question could lead GPT-4 to provide correct answers, and vice versa. However, in the experiment, only the bot’s initial responses were considered.