Preprocessing data is an essential step before building a Deep Learning model. When creating a deep learning project, it is not always that we come across clean and well-formatted data. Therefore while doing any operation with the data, it is mandatory to clean it and put it in a formatted way. Data preprocessing is the process of preparing the raw data and making it suitable for a machine or deep learning model and it is also the first and crucial step while creating a model. Using the new revolutionary technologies such as Artificial Intelligence and Deep Learning for smart decision making and driving business growth but without applying the right data processing techniques, it is of no real use.

Several machine learning algorithms as well as Deep Learning Algorithms are generally unable to work with categorical data when fed directly into the model. These categories must be further converted into numbers and the same is required for both the input and output variables in the data that are categorical. If you are in the field of data science, you must have probably heard about the term “One-hot Encoding”. The Sklearn documentation defines it as “to encode categorical integer features using a one-hot scheme”. But what is it exactly?

What is One Hot Encoding?

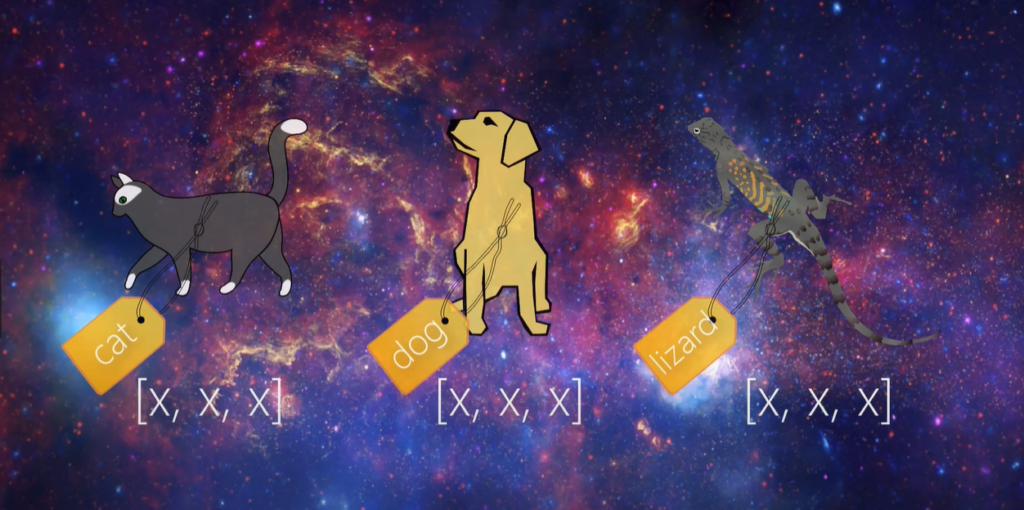

As a machine can only understand numbers and cannot understand the text in the first place, this essentially becomes the case with Deep Learning & Machine Learning algorithms. One hot encoding can be defined as the essential process of converting the categorical data variables to be provided to machine and deep learning algorithms which in turn improve predictions as well as classification accuracy of a model. One Hot Encoding is a common way of preprocessing categorical features for machine learning models. This type of encoding creates a new binary feature for each possible category and assigns a value of 1 to the feature of each sample that corresponds to its original category.

One hot encoding is a highly essential part of the feature engineering process in training for learning techniques. For example, we had our variables like colors and the labels were “red,” “green,” and “blue,” we could encode each of these labels as a three-element binary vector as Red: [1, 0, 0], Green: [0, 1, 0], Blue: [0, 0, 1]. The Categorical data while processing, must be converted to a numerical form. One-hot encoding is generally applied to the integer representation of the data. Here the integer encoded variable is removed and a new binary variable is added for each unique integer value. During the process, it takes a column that has categorical data, which has been label encoded and then splits the following column into multiple columns. The numbers are replaced by 1s and 0s randomly, depending on which column has what value. While the method is helpful for some ordinal situations, some input data does not have any ranking for category values, and this can lead to issues with predictions and poor performance.

The sample document by SK-Learn defines the process as :

“The input to the encoding transformer should be an array of integers or strings, denoting the values taken on by categorical i.e discrete features. The features are then encoded using a one-hot aka ‘one-of-K’ or ‘dummy’ encoding scheme. This creates a binary column for each category and returns a sparse matrix or dense array, depending on the sparse parameter specified”

The One Hot Encoding technique creates a number of additional features based on the number of unique values in the categorical feature. Every unique value in the category is added as a feature. Hence the One Hot Encoding is known as the process of creating dummy variables. This technique helps create better classifiers and is very effective when combined in a Deep Learning classification model.

An Example of One Hot Encoding in Deep Learning

Suppose we have data with categorical variables, and we want to perform binary classification for the same using a Deep Learning model, therefore to feed the model with data that enables it to make a classification decision, we would require to perform One Hot Encoding during data processing. Here we need to determine whether or not a customer will continue with the bank or not, which makes it a binary classification problem, to be either 0 for no or 1 for yes as our output from the model.

[[619 ‘France’ ‘Female’ … 1 1 101348.88]

[608 ‘Spain’ ‘Female’ … 0 1 112542.58]

[502 ‘France’ ‘Female’ … 1 0 113931.57]

…

[709 ‘France’ ‘Female’ … 0 1 42085.58]

[772 ‘Germany’ ‘Male’ … 1 0 92888.52]

[792 ‘France’ ‘Female’ … 1 0 38190.78]]

As we can see we have two columns which are categorical data, the country of residence and the gender of the customer. So in this case of classification, we would be required to encode these categorical data into simpler labels for a better understanding by our model for classification.

Encoding the gender column first we will get,

[[619 ‘France’ 0 … 1 1 101348.88]

[608 ‘Spain’ 0 … 0 1 112542.58]

[502 ‘France’ 0 … 1 0 113931.57]

…

[709 ‘France’ 0 … 0 1 42085.58]

[772 ‘Germany’ 1 … 1 0 92888.52]

[792 ‘France’ 0 … 1 0 38190.78]]

As we can see, the system has randomly assigned a label to each of the categorical features. When compared with the label above, we can understand that here 0 stands for Female and 1 stands for Male. We can do the same with our location column,

[[1.0 0.0 0.0 … 1 1 101348.88]

[0.0 0.0 1.0 … 0 1 112542.58]

[1.0 0.0 0.0 … 1 0 113931.57]

…

[1.0 0.0 0.0 … 0 1 42085.58]

[0.0 1.0 0.0 … 1 0 92888.52]

[1.0 0.0 0.0 … 1 0 38190.78]]

We can observe now that all our columns have now been converted into a binary form. This data now can be processed with feature scaling and feeding into an Artificial Neural Network for prediction using classification. Hence, we can understand the sheer importance and essence of the One Hot Encoding Process!

Problems Faced with One Hot Encoding

One of the biggest problems faced by the process is the Dummy Variable Trap Problem. Dummy Variable Trap is a scenario where the variables present become highly correlated to each other. Sometimes performing One-Hot Encoding results in a Dummy Variable Trap as the outcome of one variable can easily be predicted with the help of the remaining variables. The Dummy Variable Trap, therefore, leads to another problem known as multicollinearity. Multicollinearity occurs only when there is a dependency between the independent features. Multicollinearity is a serious issue in machine learning models like Linear Regression and Logistic Regression. So, in order to overcome the problem of multicollinearity, one of the dummy variables has to be dropped. This method helps drastically reduce the Variance Inflation Factor, which has to be >5 when preparing data for building the model.

When To Use One Hot Encoding?

We should prefer using the One Hot Encoding method when :

- The categorical features present in the data is not ordinal (like the countries above)

- When the number of categorical features present in the dataset is less so that the one-hot encoding technique can be effectively applied while building the model.

We should not use the One Hot Encoding method when :

- When the categorical features present in the dataset are ordinal i.e for the data being like Junior, Senior, Executive, Owner.

- When the number of categories in the dataset is quite large. One Hot Encoding should be avoided in this case as it can lead to high memory consumption.

Conclusion

One Hot Encoding is an effective data transformation and preprocessing technique that helps our Models understand the data better. It comes with its own set of advantages and setbacks and hence one when performing the same needs to pay attention to the kind of data it will be processing, focusing on the end results and output from the typical model. Such newly emerged representation techniques have been the key to better represent the data and improve the accuracy and learning of the models being created.

End Notes

In this article, we understood what the One Hot Encoding technique is and how it works. We also looked at an example of where it can be used and for the kind of data, the method is to be effectively inculcated. I would like to encourage the reader to further explore the technique for its numerous applications while creating a model and explore its components further.