Data collection is often seen as a one-time event and is neglected in favour of building better model architecture. As a result, hundreds of hours are lost in fine-tuning models based on imperfect data. According to Andrew Ng, we “need to move from a model-centric approach to a data-centric approach.”

Model-centric approach

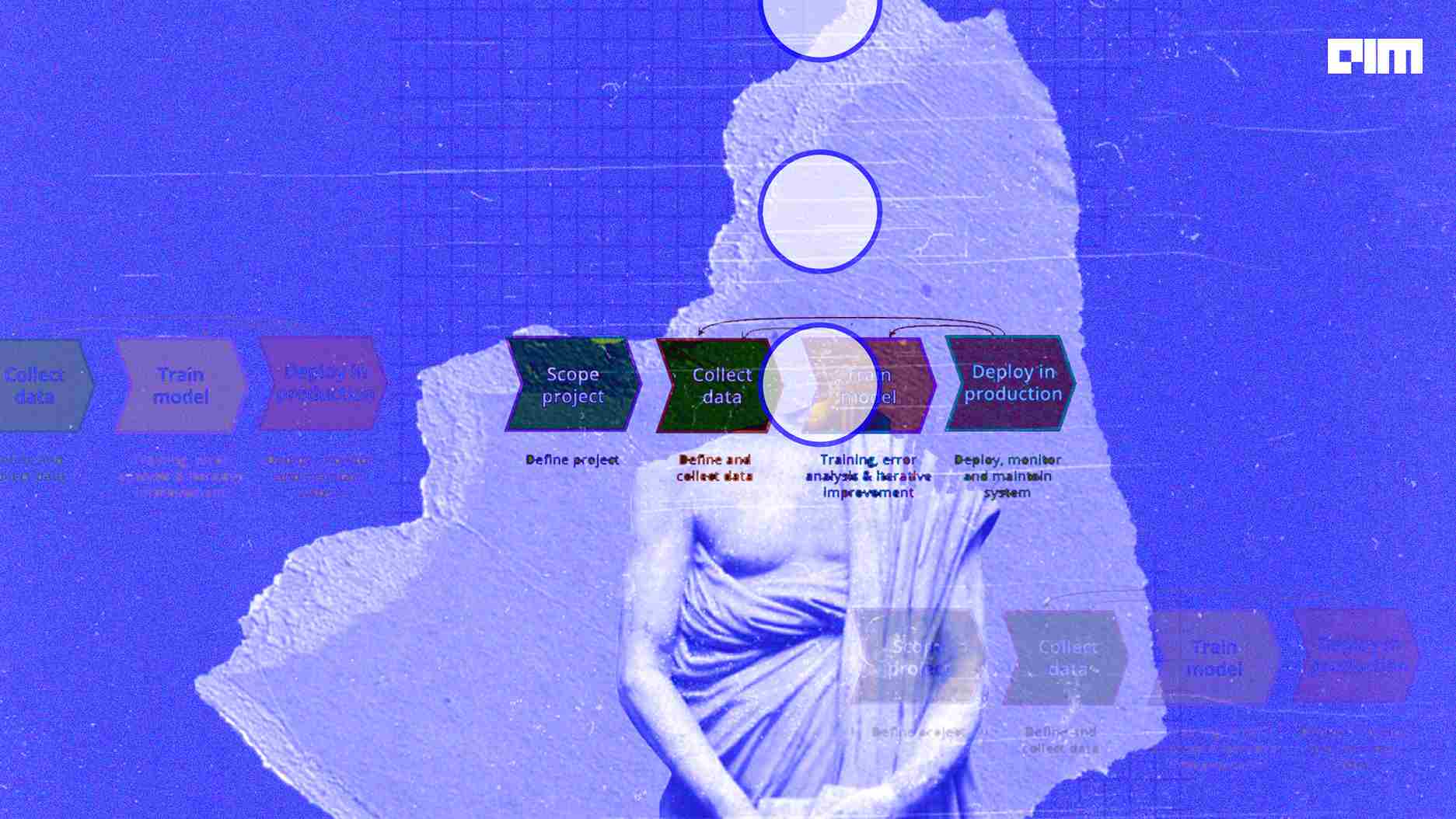

Over the last decade, most AI applications have employed a model-centric (or software-centric) approach–designing empirical tests to develop the best model architecture and training procedure to improve the performance of a model. This consists of fixing data and optimising and developing new programs to learn from the data better.

Recently, there's been more and more talk about data-centric vs model centric approaches. Interesting paper highlighting the issue in practice –> ' “Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI ' https://t.co/tpAO2qHVDH

— Sebastian Raschka (@rasbt) April 15, 2021

Data-centric approach

Given the extensive set of techniques available today, theoretical advancement in AI could be furthered by focusing on data instead of software architectures. Indubitably, data has a significant influence over what a model learns.

That said, a data-centric approach isn’t the same as a data-driven approach. While the former deals with using data to understand what needs to be created, the latter refers to a system for collecting, deconstructing, and extracting insights from data.

A data-centric AI system emphasises programming and iterating datasets rather than the code, and represents a shift of interest from big data to good data. It entails spending more time on systematically changing and improving datasets to improve the accuracy of ML applications. It doesn’t necessarily emphasise the size of the dataset but is more concerned with the quality of the data. To wit, data doesn’t need to be expansive but labelled correctly and carefully selected to cover the bases.

Benefits of a data-centric approach

A one-size-fits-all AI system to extract insights from data across the industries is not practical. Instead, the organisations are better off developing data-centric AI systems with data that reflects exactly what the AI needs to learn.

While an internet company may have the data of millions of users for their AI system to learn from, industries such as healthcare lack sizable datasets. For instance, an AI system may have to learn to detect a rare disease from just a few hundred samples.

Moving from a model-centric to a data-centric approach can help bypass the supply problem of AI talent and eliminate the need for hiring a large team for a smaller project.

Why now?

The performance of modern models have improved drastically, but such models don’t generalise to a wider set of domains and more complex problems. The most effective way to build and maintain domain knowledge is to focus on data and not the model. In other words, data management should be prioritised over software development.

Since AI systems require maintenance over a long time, we need to establish ways to manage the data for AI systems—for which there are no existing procedures, norms, or theory.

How to measure the quality of data

Consistent labelling: Inconsistent labels can introduce noise in the data but can be mitigated by introducing appropriate guidelines.

According to Andrew Ng, one can avoid labelling discrepancies in the following ways: (1) have two independent labelers label a sample of images; (2) have a metric for where labelers disagree and inconsistencies appear; (3) routinely revise labelling guidelines until there aren’t any discrepancies.

Accuracy: This is an assessment of how close a label is to the Ground Truth data—which is a subset of training data that has been labelled by an expert so that it can be used to test the accuracy of the annotator. Benchmark datasets are used to ascertain how accurate the overall quality of data is.

Review: It involves an expert visually spot-checking annotations, although sometimes all labels are double-checked.

Noisy data: The smaller a dataset is, the more noisy data it’s likely to have. In larger datasets, the noisy labels are usually averaged out. However, there is no margin for error for critical use cases like medical image analysis, and even a small amount of noisy data can lead to significant errors.