The article here presents some of the key details and characteristics of a Capsule Network, and how it improves upon the standard industry benchmark networks, mainly Convolution Neural Networks by overcoming its limitations and introducing new techniques. This article aims to provide an intuitive understanding of Capsule Networks and is not a coding tutorial.

Image recognition by Neural Networks

Neural networks have come a long way in recognizing images. From a basic neural network to state-of-the-art networks like InceptionNet, ResNets and GoogLeNets, the field of Deep Learning has been evolving to improve the accuracy of its algorithms. The algorithms are consuming more and more data, layers are getting deeper and deeper, and with the rise in computational power more complex networks are being introduced.

Though all these have resulted in gradually improving the accuracy and speed of image recognition algorithms, the basic building blocks are more or less the same – convolution layers, pooling layers, regularization (dropouts, etc), and different network architectures. All the advanced networks used in solving problems related to image recognition can be treated as different variations of Convolution Neural Networks (CNN). Capsule Networks presents an entirely different way of solving the problem of image recognition (and its extended use cases).

Problems with Convolution Neural Networks (CNNs)

How does a human brain learn to recognize images? If you want to train a human brain to recognize images of, say, dogs (assume that the person being trained has never seen a dog) – you’ll have to show that person multiple images of different dogs, and then they’ll be able to recognize any dog. This is how we learn from images. This is how a CNN also learn – it ‘sees’ and gets ‘trained’ on millions of images, and learns to recognize any new image which is shown to it by comparing it with similar images it has already ‘seen’ (well, actually it’s not this simple, there is a lot of mathematics involved but the concept is roughly the same).

Let’s change the problem statement a bit. If you train a human to recognize the images of ‘dogs’, in which all the dogs are looking to their left and ask them to identify a dog in an image where the dog is looking to its right, humans will be able to recognize it. A CNN cannot pass this test (unless it has been trained on a few of the images of dogs looking both left and right).

This doesn’t sound like ‘artificial intelligence’, but the fact is that the CNN needs to be trained explicitly on all possible combinations in the training images so that it learns on all of them – that’s why image augmentation exists – which modifies, rotates, invert, crop, zoom, and does a variety of other transformations on all the images to generate every possible combination, which is then fed into the network so that the network can learn better. Needless to say, this is very computationally expensive and needs lots of data. This is the problem of Invariance – CNNs can handle translational invariance but not rotational invariance.

In the image below – a human brain sees the Statue of Liberty from one angle (one image) and can recognize all the images below as that of the same object. CNNs do not have this capability

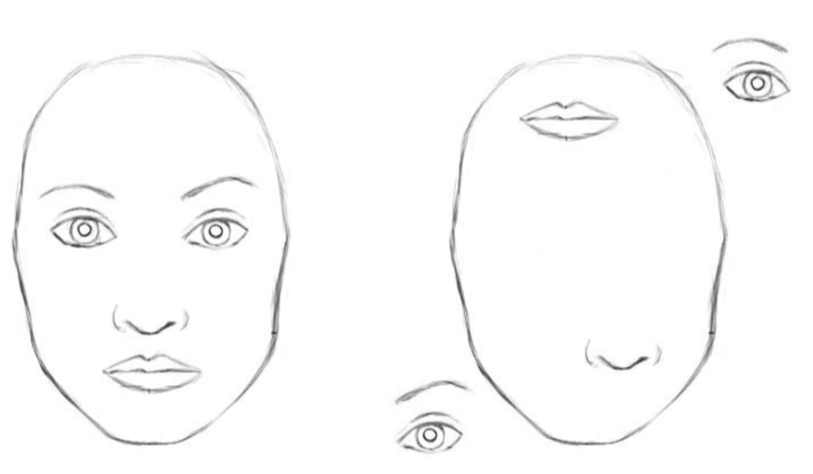

Another problem – CNN works by identifying certain basic shapes in initial layers, and gradually moves up through the layers to create activation units which respond to parts of the image. For example, a CNN trained on human faces learns to recognize lower level features in initial layers (edges, circles, basic shapes, etc) and moves up to recognize higher-level features like nose, ears, eyes, lips etc I later layer to generate a probability that if all these features are present, the image has a very high probability of being a face.

Why is this a problem – a CNN trained like this will classify both the images below as a human face, since it detects the presence of all the parts. It does not take into account the arrangement of these individual objects that make up an image.

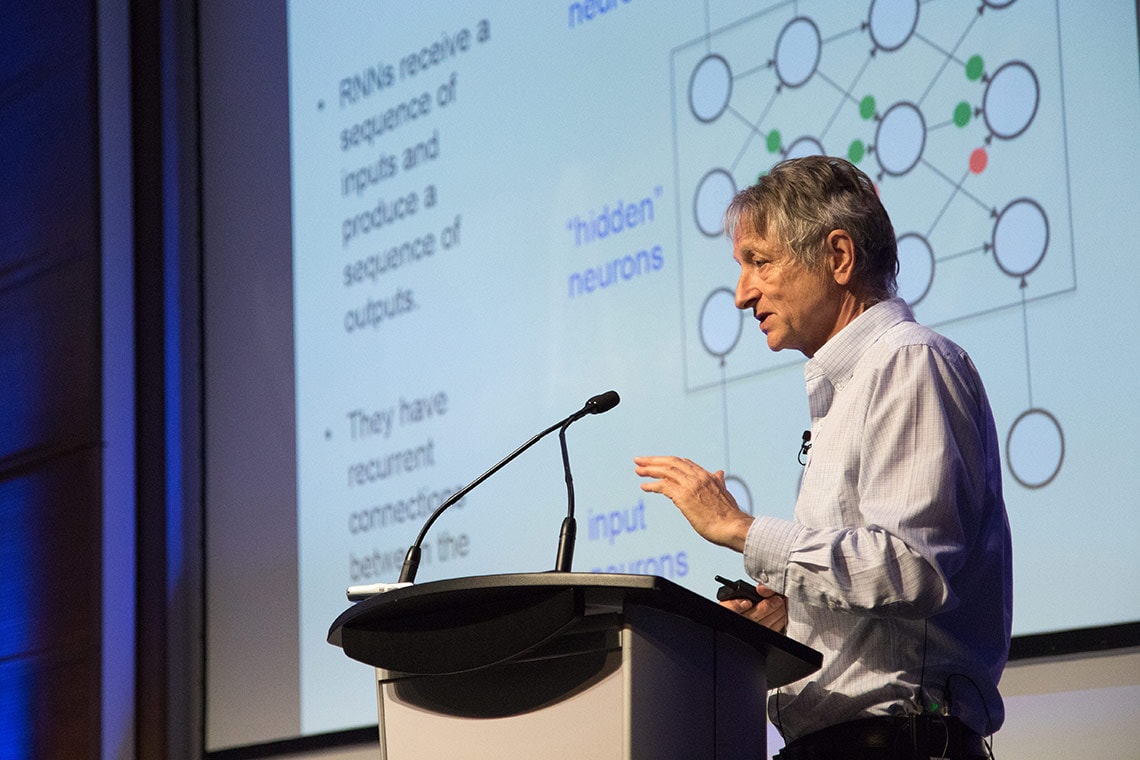

This is not how image recognition should work. Geoffery Hinton (Inventor of Capsule Network) outlines this in his talk about “What is wrong with Convolution Neural Networks”.

Problems with MaxPooling

“The pooling operation used in convolutional neural networks is a big mistake and the fact that it works so well is a disaster.”

Layers need to communicate with each other – Maxpooling layers works like a messenger between two layers of a CNN and transfers the activation information from one layer to the next layer. It tells the layers about the presence of a part, but not the spatial relation between the parts. The MaxPooling layer strips off this information to create translational invariance – the ability of a network to detect an object even wherever it lies in the image.

Capsule Networks use Dynamic Routing between Capsules in layers to pass on the information from one layer to the next layer (in place of MaxPooling layer in CNN) [More on Dynamic Routing later]

Also, Maxpooling does not provide ‘ViewPoint Invariance” – the ability to make the model invariant to changes in viewpoint. Capsule Networks outperform everything else when it comes to problems involving viewpoint invariance.

Example: SmallNORB images from different viewpoints. The dataset contains a bunch of objects’ images captured from different angles, and the objective is to identify the object with a model trained on the same images viewed from another viewpoint. A Capsule Network trained to detect objects in this database increased the model accuracy by a whopping 45% over traditional CNN models.

What is a Capsule Network and how does it overcomes these limitations?

Capsule Networks are a new class of network that relies more on modelling the hierarchical relationships in understanding an image to mimic the way a human brain learns. This is completely different from the approach adopted by traditional neural networks.

Before continuing to the algorithm for a CapsNet, here are few terms that must be understood to understand how a Capsule Network works

Hierarchy of parts

A human brain learns and analyzes visual images in a hierarchy of parts – we first learn the boundaries, then the shapes and figures which contributes to the individual components (ex. nose, eyes, etc), the components then make up parts (ex. face) and these parts then combine to create the final image (ex. Body).

For example, to define a face we define the position of eyes relative to face but not relative to the body of the person. To define a body we define the position of the face relative to the body. The individual parts make up the face, and these objects (face, hands, legs) make up the body. This hierarchical representation is known as the ‘hierarchy of parts’ in a Capsule Network. A Capsule Network works by identifying the instantiation parameters (see: Inverse Graphics philosophy) associated with each part in a capsule and then passing the information along to capsules in the next layer. In the face example, there would be individual capsules for each of the parts, and together they will predict the presence of a face given that the parameters like rotation, spatial positions etc are also predicting the presence of a face in the capsule in the next layer.

Inverse Graphics Philosophy

How does a graphic designer generate a video game character or an object? It tells the rendering engine the details of the object – for example, its orientation in space, its dimensions, its relative position to other objects, etc. The rendering engine takes these inputs which are from the designer’s viewpoint and generates a model of the object which can be viewed from any viewpoint. In short, using the parameters and properties like shape, size, colour etc, the rendering engine can generate the entire object.

Capsule network does the same thing in reverse (inverse graphics) – it takes an image and learns its activation vectors (length, orientation, pose, relative position etc). This helps in creating a representation of an object in terms of parameters which can be learnt by the network, and these parameters are utilized for predicting the objects in capsules.

Equivariance & Viewpoint Invariance

Convolutional Neural Networks are translational invariant, but not rotational invariant. This means they can detect objects with the same orientation in a different part of images, but cannot detect objects if they are rotated

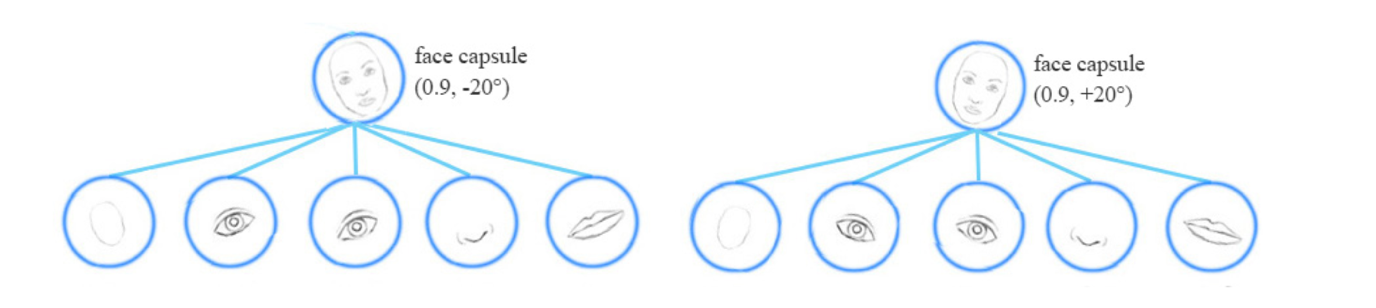

Capsule networks are Equivariant – which means you do not have to feed separate images of a rotated face to the network to train it (example shown in the image below). In fact, one of the parameters (activation vectors) that the capsules learn is rotation – how many degrees an object is rotated. This is a huge thing since this reduces the number of images needed to train a capsule network as compared to a convolution neural network

Here is a comparison between detection of rotated faces by a CNN and by a Capsule Network. The CNN model needs to learn from 3 different samples from three different types of images (rotation: 00, +200, -200), whereas the capsule network learns the rotation of the face too (00, +200, -200) in a single capsule, apart from other features. It does not need to be trained explicitly on rotated faces.

CNN MODEL – needs to be trained on all orientations of a face.

CapsNet – detects the rotation and leans it as one of the activation vectors

Routing by agreement (Dynamic Routing)

Dynamic routing is the brain of Capsule networks. This is what makes Capsule Networks so accurate. A CNN learns the feature and uses its presence to generate the final probability of the presence of an image comprising of the objects containing the detected features. A Capsule Network not only detects the features, but also detects its orientation (among other things) and how they are related, and the probability that this feature belongs to a higher-level object in the hierarchy of parts. Each capsule determines the presence of a lower level feature, along with its activation parameters (length, thickness, rotation, position w.r.t other features) and calculates the probability of the feature predicting a higher-level object, and once it detects that the capsule in layer L has the highest probability of predicting an object A in layer L+1 and not object B, all outputs of the capsule in layer L goes to the capsule of object A in layer L+1.

Through dynamic routing, lower level capsules (in layer L) get feedback from higher-level capsules (in layer L+1) about what to pay attention to. The lower level capsule will send its output to the higher level capsule whose output is similar.

How does a Capsule Network take all these ideas to implementation?

The Algorithm: How to calculate the forward pass in the network from layer ‘l’ to layer ‘l+1’

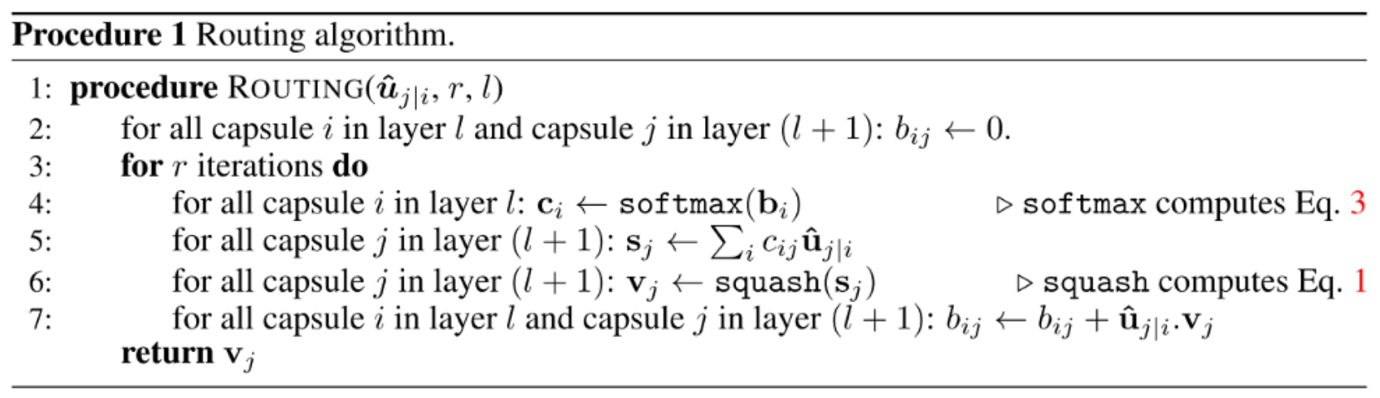

Source: The original paper by Geoffrey Hinton. Read the original paper to dig deeper

Capsules in layer ‘l’ are denoted by ‘i’ and those in next layer ‘l+1’ are denoted by ‘j’

Uj|i : Output of the capsules in layer ‘l’

r: Number of routing iterations

Outputs: vj : vector of a higher level capsule j in layer ‘l+1’

Explanation of Steps:

bij: initialized to 0, this value will be updated and will be stored in cij

Repeat r times:

- cij = softmax(bij) : all routing weights for a lower level capsule i (this is done for all lower level capsules I in layer ‘l’). This converts all the weights into probabilities between 0 and 1. In the first pass, since all bij are equal to 0, all cij will be equal to 0.5. What this means lower level capsules have no idea which higher level capsule will best fit their output

- sj : Calculated for all higher level capsules j in layer ‘l+1’ once we have all cij. Line 5 in the algorithm calculates a linear combination of input vectors, weighted by cij which were calculated in previous step. This is essentially scaling down input vectors and adding them together.

- vj: Squashing function applied to sj – This step ensures that the direction of the vector sj is preserved while its length is restricted to (0,1) to denote probability which can be used later in the network..

- Updating initial weights bij : This is the main component of a routing algorithm. This operation looks at each higher level capsule j in layer ‘l+1’ and then examines each input to update the corresponding weight bij.

bij = bij + uji.vj

The new weight equals old weight plus the dot product of the current output of the capsule j in layer l+1 (vj) and the input to this capsule from a lower level capsule I (vij). This dot product by definition looks at the similarity between input to the capsule and the output from the capsule. Also, the lower level capsule will send its output to a higher level capsule whose output is similar. This similarity is captured by the dot product of these two vectors. - The algorithm repeats ‘r’ times to update the weights

Outlook

Capsule Networks are still evolving, and due to their complex nature and computational cost, they have not been widely implemented in production, though they have shown great promise. One of the key benefits of Capsule Network is that they tend to move away from the black-box model of a neural network, and represents more concrete features which can be interpreted to understand what and how is the network learning. Hopefully, researchers will continue making CapsNets better and faster so that it will be the baseline for solving any image-based deep learning problem.