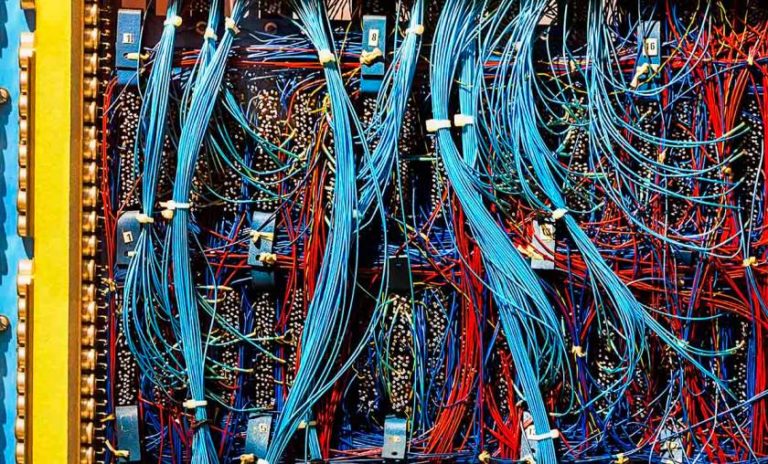

In computer architecture, the combination of the CPU and main memory, to which the CPU can read or write directly utilising specific instructions, is deemed as the central component. Any transfer of data to or from the CPU/memory combination, for instance, by reading data from a disk drive, is considered I/O.

Today, the applications are perpetually changing, and we see an increase in sophistication of research applications. For example, GPUs and CPUs (with many cores) have brought about enhanced machine learning and AI applications.

With complex and larger workflows, it seems like a compute problem, but in fact, it all presents different I/O patterns down to the file system. The file systems across many enterprises may not be structured to cope with these changes. Apart from the many challenges that an application developer has to worry about, to match memory block size with file systems to get maximum efficiency can be very challenging.

Furthermore, it’s even more difficult for them to do that when they’re running on, say 100 nodes or doing multiple different models and algorithms in the same application.

Bad I/O can come from a number of places such as third-party tools and libraries, particularly legacy code. Compute infrastructure and data storage has changed a lot over the last few decades. What were good software practices ten years ago may not necessarily be relevant now. There are still applications that assume that storage is fast and local, and that causes a lot of problems.

Similarly, other misunderstandings about IT infrastructure can cause problems. For example, an application may run well in one environment, but it might run poorly in another environment, particularly if they are working with a partner organisation with a different IT set up. So if a company upgrades to a new IT infrastructure, brings in a new cluster or moves to a hybrid cloud, their assumptions may not work anymore. Therefore, continuously monitoring and occasionally profiling your applications is pretty important for making sure that you do not run into any of these traps.

Value In High-Performance Computing

Companies are looking to solve I/O related challenges. For example, recently Altair acquired an I/O profiling firm Ellexus, a company which analyses input/out (I/O) patterns in real-time to locate system bottlenecks and rogue applications. This way, the solution protects shared storage by finding bad I/O patterns quickly, which keeps tabs on cloud resource usage. I/O performance is of great importance to software vendors, high-performance computing organisations and anywhere where big data meets big compute.

Altair made this strategic acquisition because the HPC community is interested in analysing proper I/O patterns. I In high-performance computing systems, parallel I/O architectures usually have complex hierarchies with multiple layers that collectively constitute an I/O stack. It may include high-level I/O libraries, I/O middleware and parallel file systems such as PVFS and Lustre. Therefore, it is important to understand the complex I/O hierarchies of high-performance storage systems including storage caches, HDDs, and SSDs, to implement state-of-the-art compiler/runtime system technology that targets I/O intensive HPC applications.

A lot of companies are looking to containerise their applications, usually with singularity containers in the HPC community to make sure that they have the right files to run their applications. Following on from that is application correctness where companies can determine why an application works for them but not for others. Perhaps a team has got the wrong version of Python installed or maybe there is a config file that is missing. All these could be quickly and easily picked up if you look at what is required to run the application.

Detecting Dependencies

Detecting dependencies is but the number one thing that you want to do with I/O profiling. Long before companies start to look at the I/O patterns and performance, companies need to know which files have been accessed across which network locations. Companies need to do this for containerisation and migration.

The vast majority of applications aren’t exascale and do not get re-written and re-optimised for every machine that comes along. Also, the codes may have evolved in 10-15 years. It, therefore, gets tricky for some companies to come along with a new file system and optimise their application again, for not just compute side attributes but also for the file system attributes to optimise the I/O patterns.

It is important for them to keep on top of application file-system dependencies and I/O patterns. There are tools to help users understand the way they access data, where users can run and maintain distributed, scientific and HPC applications. Only by understanding what you are running can you be fast, agile and cloud-ready.

Once a company has got to the point where their application is correct, they can look at the performance and efficiency. Hence, understanding the resources to make sure that they have the right type of storage for their application and that their temporary files are in the right place.

Profiling I/O is also important so that you know how the application is performing over time and how the performance of the storage is affecting that application. Companies can then move on from profiling to monitoring I/O, which is a passive activity for making sure they understand what is going on in a broader sense.