|

Listen to this story

|

On big, difficult tasks, training a neural network using an algorithm such as error back-propagation normally takes a long time. The learning rate is a parameter in such algorithms. It is a hyper-parameter that governs the amount of alteration of the weights in the network concerning the loss gradient. The lower the value, the slower it moves downhill. While adopting a low learning rate may be a good option to ensure that the algorithm doesn’t miss any local minima, it may also imply that the convergence is proportionally increased. This article will focus on the reasoning behind the lower value of the learning rate. Following are the topics to be covered.

Table of contents

- Refresh about gradient descent and learning rate

- Effect of learning rate

- How to choose a learning rate?

- Experimenting with learning rate

In big and complicated situations, a high learning rate reduces generalisation accuracy and delays training. Let’s talk about the effect on the learning rate.

Refresh about gradient descent and learning rate

The gradient descent algorithm is an optimization algorithm that focuses on minimizing the cost function or the loss function. As shown in the below pictorial representation, it moves from one data point to another and calculates the slope of the point. It iterates the process until it reaches a global minimum for the loss function. The number of data points to be learned is controlled by a parameter known as the learning rate. The lesser the learning rate, the more the data points included for the algorithm to learn.

So, according to this, one just needs to keep the learning rate as minimum as possible, right?. No, there is a catch here: the lesser is better but not the lowest since, the lower learning rate affects the time and accuracy of the model.

Are you looking for a complete repository of Python libraries used in data science, check out here.

Effect of learning rate

There are several strategies for automatically changing the learning rate parameter, although these methods often focus on enhancing convergence speed rather than generalization accuracy. To speed up training, many neural network practitioners now employ the highest learning rate that enables convergence. However, for big and complicated issues, a high learning rate reduces generalization accuracy and delays training. However, once the learning rate is modest enough, subsequent size reductions squander computing resources without improving generalization accuracy.

The error gradient is calculated at the current location in weight space when employing a gradient descent learning method, and the weights are modified in the opposite direction of this gradient in an attempt to reduce the mistake. However, while the gradient indicates which direction the weights should be carried in, it does not define how far the weights may be safely moved in that direction until the error stops falling and begins growing again.

As a result, an excessively fast learning rate frequently advances too far in the “right” direction, resulting in overshooting a minimal error surface and lowering accuracy. An excessively high learning rate takes longer to train since it is constantly overshooting its goal and “unlearning” what it has learnt, necessitating costly backtracking or producing fruitless oscillations. Because the weights can never settle down enough to travel to a minimum before bouncing back out, this instability frequently leads to poor generalization accuracy.

Once the learning rate is low enough to prevent such overcorrections, it may move through the error landscape in a pretty smooth fashion, eventually settling on a minimum.

A reduced learning rate can make this route more smooth, which can enhance generalization accuracy greatly. However, there comes the point where lowering the learning rate any further is simply a waste of time, resulting in many more steps than are required to walk the same path to the same minimum.

How to choose a learning rate?

The generalized accuracy and training speed improves when the learning rate is reduced, but the difficulty is how to select a learning rate that is modest enough to obtain acceptable generalization accuracy without wasting computational resources.

Rule out larger learning rate

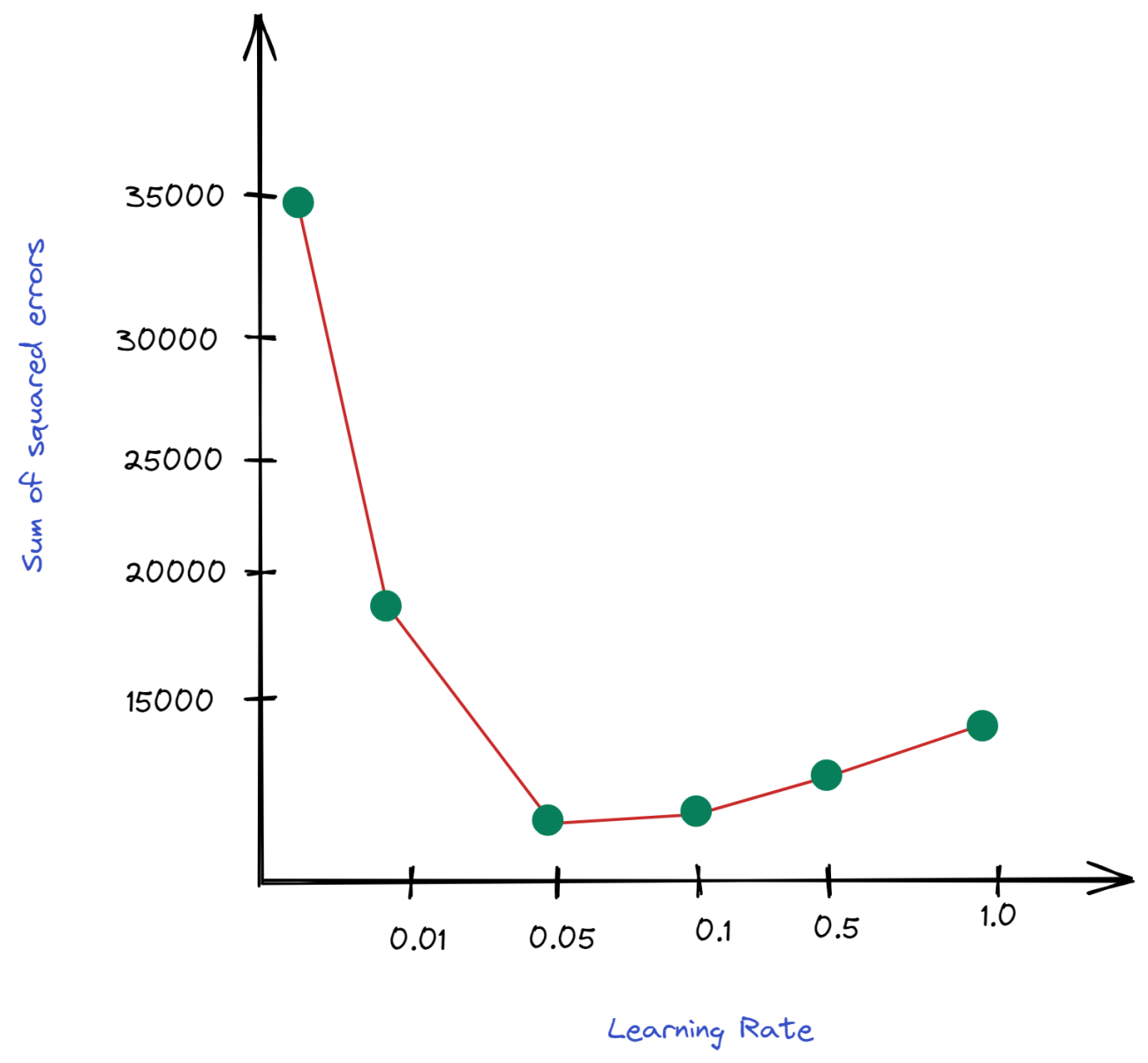

To begin, it is necessary to rule out learning rates that are so enormous that they train slower and are less accurate than a lesser learning rate. This may be accomplished by assessing either the generalization accuracy on a hold-out set or, more conveniently, the total sum squared error determined during the training phase.

The above graph represents a fictional scenario where the total sum of squared error for each learning rate for a training epoch of 200 is shown. The 0.05 learning rate has the minimum error, suggesting that it is the “fastest” learning rate in terms of the total sum of squared errors decreasing. In this case, any learning rate greater than 0.05 may be rejected because it is slower and most certainly less accurate and hence has no benefit above the “fastest” learning rate.

To prevent very high learning rates, it is feasible to train a collection of neural networks for only one epoch using learning rates of varying magnitudes (using the same initial weights and order of presentation for each network). The learning rate that produces the lowest sum of squared errors is therefore regarded as the “fastest,” and any learning rates greater than that are removed from further evaluation.

Maximizing Accuracy

The “fastest” learning rate is not necessarily the most accurate, but it is usually always as accurate as any bigger, slower learning rate. On big, complicated tasks, a learning rate slower than the “fastest” one frequently leads to greater generalization accuracy. Hence it is generally worthwhile to spend more training time using slower learning rates to improve accuracy.

The most basic strategy is to train the neural network continuously for different learning rates and to continue the process if the maximum accuracy does not increase. While this approach does need numerous training of the neural network, the training is done initially with the quickest learning rate and so proceeds quite rapidly. Subsequent passes are slower, but just a few passes should be needed before the accuracy plateaus. All of these passes will normally take roughly the same amount of time as the next slower learning rate.

Stopping Criteria

It is critical to employ the proper halting criteria for the above strategy to perform well. While convergence is frequently described as achieving a given degree of the sum of squared error, this criterion is prone to overfitting and necessitates the user selecting the error threshold. Hold-out set accuracy, on the other hand, should be utilized to determine whether the neural network has been trained long enough for a specific learning rate.

When the learning rate is at least as low as the “fastest” learning rate, the generalization accuracy normally rises gradually, begins to backtrack when it reaches a plateau, and then falls back as the network overfits.

Experimenting with learning rate

This article will use a dense neural network with a single hidden layer with 50 nodes for the input layer and 3 nodes for the output. The data would be custom data for the experimentation purpose.

Let’s start with the importing of the dependencies

from sklearn.datasets import make_blobs import tensorflow as tf from tensorflow.keras.layers import Dense from tensorflow.keras.models import Sequential from tensorflow.keras.optimizers import SGD from tensorflow.keras.utils import to_categorical import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split as tt_split

If using the Google Colab notebook, use Tensorflow’s Keras API for building the neural network.

The data creation is a simple task one can refer to the notebook attached in the references section.

As explained above, building a single hidden layer neural network with a ReLU activation function for the hidden layer and softmax for the output layer. The loss function used is categorical cross entropy since it is a multiclass problem, and the evaluation metric would be the area under the ROC curve.

model = Sequential()

model.add(Dense(50, input_dim=2, activation='relu', kernel_initializer='he_uniform'))

model.add(Dense(3, activation='softmax'))

opt = SGD(learning_rate=lrate)

eval=tf.keras.metrics.AUC(

num_thresholds=200,

curve='ROC',

summation_method='interpolation',

name='auc_score')

model.compile(loss='categorical_crossentropy', optimizer=opt, metrics=eval)

history = model.fit(trainX, trainy, validation_data=(testX, testy),batch_size=50, epochs=200, verbose=0, use_multiprocessing=True)

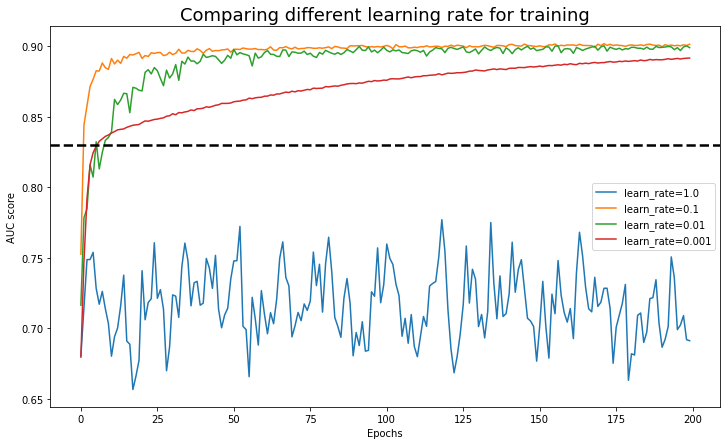

The model will be trained on different learning rates, and the output will be stored in the variable history. Now it’s trained and validated, comparing the evaluation metric for different learning rates.

In the case of training, we can observe that the learning rate of 1.0 certainly is not the best. If lower down the learning rate is from 1.0 to 0.1, the AUC score jumps from 0.75 to 0.90, but the problem with this learning rate is that with an increase in epochs it becomes almost constant, which is not the best. If we move to a learning rate of 0.01, initially, the score is lower, but after 100 epochs, the score is approximately equal to the first one. Since the learning rate of 0.001 covers a lot more data points, so it takes more time to achieve the results as compared to the other two.

Similarly, in the case of validation, the same pattern could be observed. So, in conclusion, the best learning rate for this neural network is 0.01.

Conclusion

The learning rate parameter regulates the amount of learning in the given resources for the model. With this parameter, the model will learn to calibrate the best estimation for the function in the given number of layers and nodes per layer. The optimal learning rate is one of the keys to building an optimal neural network. With this article, we have understood the rate of learning should always be lower.