|

Listen to this story

|

Neural networks are a network of neurons designed to carry out the desired tasks. The overall neural network architecture for certain tasks is very simple and some of them are very complex. The complexity and the depth of neural networks depend on the tasks to be achieved and in some cases, even complex or deep layer neural networks are prone to underfit as they may not have the ability to capture the different patterns of the data. So in this article let us see a method called bias regularization of neural networks and whether it helps to capture the different patterns or leads the model to underfit.

Table of Contents

- What is underfitting in neural networks?

- What is bias regularization in neural networks?

- Case study on regularization in neural networks

- Summary

What is Underfitting in neural networks?

Any developed neural network has to yield considerable training and testing parameters and underfitting in neural networks occurs when the neural network developed does not have the ability to capture the patterns and changes in the data at different scales. Underfitting also occurs when there is data scarcity and the model does not have the ability to capture the minimum number of data points in the plane to consider it a reliable model.

The main causes of a neural network underfit will be due to the model’s simplicity and few layers to propagate which makes the model saturate on its loss and accuracy parameters for each iteration and may lead to almost the same values of the accuracy of loss over the iterations (epochs) mentioned for the neural network to fit and converge optimally for better results.

What is bias regularization in neural networks?

Before understanding what is bias regularization in neural networks let us understand what is bias with respect to neural networks and what role it has to play with respect to the neural networks.

What is bias in neural networks?

Bias in neural networks is generally uniform errors that are induced in the neural networks to show generic performance for the data it is fit rather than to yield unrealistic models. So with respect to the neural networks, bias is induced with the choice of weights so that the weight updation during the process of model convergence is right and not inclined to certain data patterns. Now let us understand what bias regularization is in neural networks.

Bias Regularization in neural networks

Bias regularization with respect to neural networks is the process of adding some factors to the bias parameter that helps us to yield better results from the model and perform better for unseen data. But if the bias factor is not optimal the model will propagate through the layers quickly and yield lower training and testing parameters and thereby resulting in underfitting.

This is where bias regularization is applied to neural networks which are very complex to converge faster and yield better accuracy. But the technique of bias regularization may not be suitable for all types of data and scenarios and the application of bias regularization in neural networks may lead to underfitting.

Let us understand through a case study how bias regularization in neural networks may cause underfitting.

Case study of bias regularization in neural networks

There are different types of regularizations possible in neural networks like kernel regularizers, bias regularizers, and activity regularizers applied at respective layers of neural networks. But this article let us see how to implement the bias regularization technique at different layers of neural networks and analyze the performance of the neural network.

Here a hand sign digit classification dataset is used with 2 digits to classify either as 1 or 0. A subplot of the data was visualized after suitable preprocessing as shown below.

plt.figure(figsize=(15,5))

for i in range(1,6):

plt.subplot(1,5,i)

plt.imshow(X_train[i,:,:],cmap='gray')

plt.title('Sign language of {}'.format(Y_train[i]))

plt.axis('off')

plt.tight_layout()

plt.show()

With proper preprocessed data, model building was taken up. Initially, a model was built without any regularization in it as shown below.

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D,MaxPool2D,Flatten,Dense,Dropout

import warnings

warnings.filterwarnings("ignore")

model1=Sequential()

model1.add(Conv2D(128,(3,3),activation='relu',input_shape=(64,64,1)))

model1.add(MaxPool2D(pool_size=(2,2)))

model1.add(Conv2D(64,(3,3),activation='relu'))

model1.add(MaxPool2D(pool_size=(2,2)))

model1.add(Conv2D(32,(3,3),activation='relu'))

model1.add(MaxPool2D(pool_size=(2,2)))

model1.add(Flatten())

model1.add(Dense(256,activation='relu'))

model1.add(Dense(125,activation='relu'))

model1.add(Dense(64,activation='relu'))

model1.add(Dense(1,activation='sigmoid'))

model1.summary()

model1.compile(loss='binary_crossentropy',optimizer='adam',metrics=['accuracy'])

With proper data preprocessing and compilation, the model was fitted for the split data and the model was evaluated for training and testing loss and accuracy as shown below.

model1_res=model1.fit(Xtrain1,Y_train,epochs=10,validation_data=(Xtest1,Y_test),batch_size=32)

model1.evaluate(Xtrain1,Y_train) ## training loss and training accuracy

model1.evaluate(Xtest1,Y_test) ## testing loss and testing accuracy

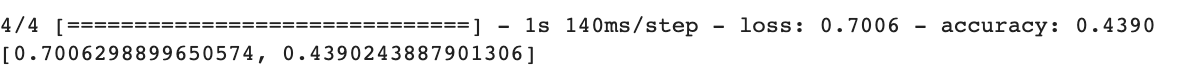

Here we can see that the testing accuracy is lesser than the training accuracy and the model is already showing signs of underfitting. So let us see if the bias regularization technique helps us to reduce the underfitting of the model or will it lead to further underfitting.

Introducing Bias Regularization just after the flattened layer

model2=Sequential() model2.add(Conv2D(128,(3,3),activation='relu',input_shape=(64,64,1))) model2.add(MaxPool2D(pool_size=(2,2))) model2.add(Conv2D(64,(3,3),activation='relu')) model2.add(MaxPool2D(pool_size=(2,2))) model2.add(Conv2D(32,(3,3),activation='relu')) model2.add(MaxPool2D(pool_size=(2,2))) model2.add(Flatten()) model2.add(Dense(256,activation='relu',bias_regularizer=regularizers.l1(0.01))) model2.add(Dense(125,activation='relu')) model2.add(Dense(64,activation='relu')) model2.add(Dense(1,activation='sigmoid'))

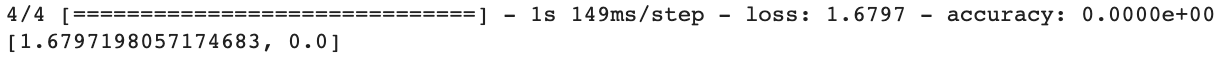

So this is how the bias regularization is added just after the flattened layer and the model should be compiled and fitted in the same way as the model1 is fitted for the split data and evaluated and it was seen that bias regularization made the model yield no accuracy or the model seriously under fitted for adding bias regularization after the Flattening layer but the testing loss of the model reduced a bit when compared to the model without any regularization.

model2.evaluate(Xtrain1,Y_train) ## training loss and training accuracy

model2.evaluate(Xtest1,Y_test) ## training loss and training accuracy

Introducing Bias Regularization at 2 random layers

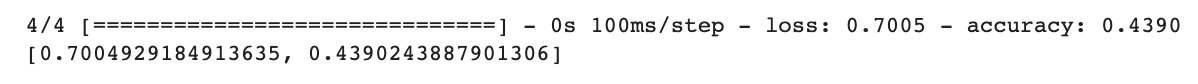

model3=Sequential() model3.add(Conv2D(128,(3,3),activation='relu',input_shape=(64,64,1))) model3.add(MaxPool2D(pool_size=(2,2))) model3.add(Conv2D(64,(3,3),activation='relu',bias_regularizer=regularizers.l1(0.01))) model3.add(MaxPool2D(pool_size=(2,2))) model3.add(Conv2D(32,(3,3),activation='relu')) model3.add(MaxPool2D(pool_size=(2,2))) model3.add(Flatten()) model3.add(Dense(256,activation='relu',bias_regularizer=regularizers.l2(0.01))) model3.add(Dense(125,activation='relu')) model3.add(Dense(64,activation='relu')) model3.add(Dense(1,activation='sigmoid'))

The model was compiled and fitted for the split data for 10 epochs and in the similar way the model was evaluated for train and test loss and accuracy.

model3.evaluate(Xtrain1,Y_train)

model3.evaluate(Xtest1,Y_test)

Introducing Bias Regularization just before the Output Layer

model4=Sequential() model4.add(Conv2D(128,(3,3),activation='relu',input_shape=(64,64,1))) model4.add(MaxPool2D(pool_size=(2,2))) model4.add(Conv2D(64,(3,3),activation='relu')) model4.add(MaxPool2D(pool_size=(2,2))) model4.add(Conv2D(32,(3,3),activation='relu')) model4.add(MaxPool2D(pool_size=(2,2))) model4.add(Flatten()) model4.add(Dense(256,activation='relu')) model4.add(Dense(125,activation='relu')) model4.add(Dense(64,activation='relu',bias_regularizer=regularizers.l1(0.01))) model4.add(Dense(1,activation='sigmoid'))

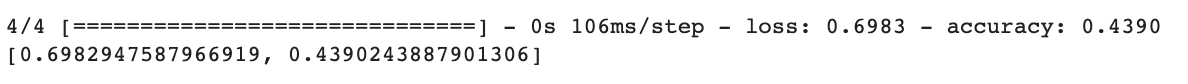

Here the bias regularization was added just before the output layer and the model was compiled and fitted for the split data for 10 epochs and the model was evaluated for train and test loss and accuracy as shown below.

Here the bias regularization was added just before the output layer and the model was compiled and fitted for the split data for 10 epochs and the model was evaluated for train and test loss and accuracy as shown below.

model4.evaluate(Xtrain1,Y_train)

model4.evaluate(Xtest1,Y_test)

Here we can see that adding the bias regularization just before the output layer yields approximately equal but the training and testing accuracy do not see any changes in values when bias regularization is added just before the output layer.

Key Outcomes of Bias Regularization

Bias Regularization is used to obtain better accuracy and reduce the model overfitting if any. But it is very important to use it only when required as sometimes it may cause the model to seriously underfit as seen in the case study. So bias regularization is required only for complex neural networks or very deep layer models. For a simpler model architecture bias regularization is not required. In many cases, bias regularization in neural networks is used just before the output layer, and in that case, simpler neural networks like the one used in this case study may improve the loss parameter but not the accuracy parameter and probably when considered for complex neural networks it would reduce overfitting.

Summary

Bias regularization is generally used to prevent the overfitting of complex neural networks and in this article, it was clearly seen that if bias regularization is used for a simpler data and simple model architecture it may yield the same parameters of the model without any regularization or it may cause the model to underfit yielding an unreliable data and not performing better for unseen data. So with this overview, it can be said that bias regularization is suitable only for huge data or complex models and not for simple data and simpler model architectures.