Generative modelling is an unsupervised learning method to tease out patterns in input data and output data instances resembling the original data. Generative adversarial networks (GANs) pit a generator model against a discriminator model. While generative models churn out new data instances, discriminator models differentiate between data instances. Both models try to one-up each other to optimise the output. The advances in parameterizing these models using deep neural networks, combined with progress in stochastic optimization methods, have enabled scalable modelling of complex, high-dimensional data including images, text, and speech.

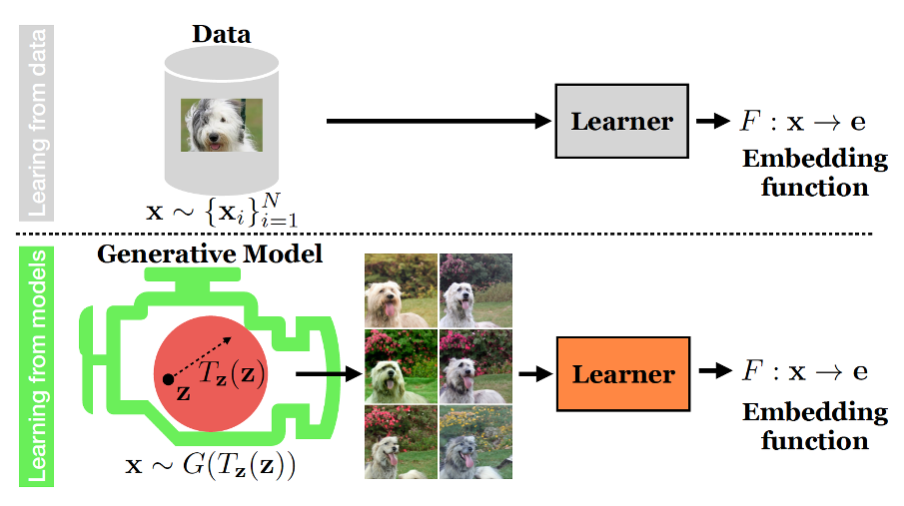

Generative models have the potential to produce photorealistic images that look identical to training data. So, if we have good enough generative models, do we still need datasets? MIT researchers Ali Jahanian, Xavier Puig, Yonglong Tian, and Phillip Isola have investigated this question in the setting of learning general-purpose visual representations from a black-box generative model rather than directly from data. The findings showed that a contrastive representation learning model trained on synthetic data could learn visual representations that compete with, if not outperform, those learned from real data.

Deep generative models could offer the most promising developments in AI https://t.co/m0OStj1fEV

— Stewart Rogers (@TheRealSJR) March 21, 2022

Challenges

Training machine-learning models to perform image classification tasks requires a gigantic amount of data. Datasets can cost millions of dollars to create. Additionally, datasets carry biases that can put a damper on model performance.

In the ICLR 2022 conference paper titled, “Generative models as a data source for multiview representation learning”, the researchers proposed a method for training a machine learning model that uses a special type of machine-learning model to generate realistic synthetic data that can train another model for downstream vision tasks.

Generative models require far less memory to store or share than a dataset. Using synthetic data can help in working around privacy and usage rights concerns. Generative models can also be edited to remove specific attributes, such as race or gender.

Generating synthetic data

The researchers linked a pretrained generative model to a contrastive learning model. According to Ali, the contrastive learner could instruct the generative model to generate different views of an object and then learn to identify that object from multiple angles. The generative model provides different views of the same thing and helps the contrastive method to learn better representations.

“Given an off-the-shelf image generator without any access to its training data, we train representations from the sample’s output by this generator. We compare several representation learning methods that can be applied to this setting, using the latent space of the generator to generate multiple “views” of the same semantic content. We show that for contrastive methods, this multiview data can naturally be used to identify positive pairs (nearby in latent space) and negative pairs (far apart in latent space),” the researchers said in the paper.

The resultant representations either matched or in some cases outperformed those learned directly from real data. “We knew that this method should eventually work; we just needed to wait for these generative models to get better and better. We were especially pleased when we showed that this method sometimes does even better than the real thing,” said Ali Jahanian, a research scientist in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and lead author of the paper.

Read more about synthetic data here.

Roadmap

The paper suggested several techniques for dealing with visual representation learning. Real-world data falls short for learning about corner cases. For example, if researchers are developing a computer vision model for a self-driving car, real-world data would not include examples of a person or an animal running down a highway. Consequently, the model would never learn what to do in this situation. Synthetically generating that corner case data could improve the performance of machine learning models in some high-stakes situations. The researchers also want to improve generative models to create more sophisticated images.