In natural language processing, word embedding is a learned representation for text where words that have the same meaning have a similar representation. This term is used for the representation of words for text analysis with the goal of improved performance in the task. There are different models used for word embedding tasks. In this article, we will discuss the two most popular word embedding models, Word2Vec and Glove. First, we will understand the fundamentals of these models and then we will see how quickly they can be implemented in Python using the API provided by Gensim. In the end, we will compare both of these models based on the various important parameters. The major points to be covered in the article are listed below.

Table of Contents

- What is Word2Vec?

- Training Procedure for Word2vec Model

- Implementing Word2Vec in Python

- What is GloVe?

- Training Procedure for GloVe models

- Implementing GloVe in Python

- Comparing Word2vec vs GloVe

Let us begin with understanding the Word2Vec technique.

What is Word2Vec?

Word2Vec is a technique used for learning word association in a natural language processing task. The algorithms in word2vec use a neural network model so that once a trained model can identify synonyms and antonyms words or can suggest a word to complete a partial incomplete sentence. Word2vec uses a list of numbers that can be called vectors to represent any distinct word. The cosine similarity between the vectors is used as the mathematical function for choosing the right vector which indicates the level of semantic similarity between the words.

Training Procedure for Word2vec Model

Word2Vec is a family of models and optimizers that helps to learn word embeddings from a large corpus of words. Representation of words using Word2Vec can be done in two major methods.

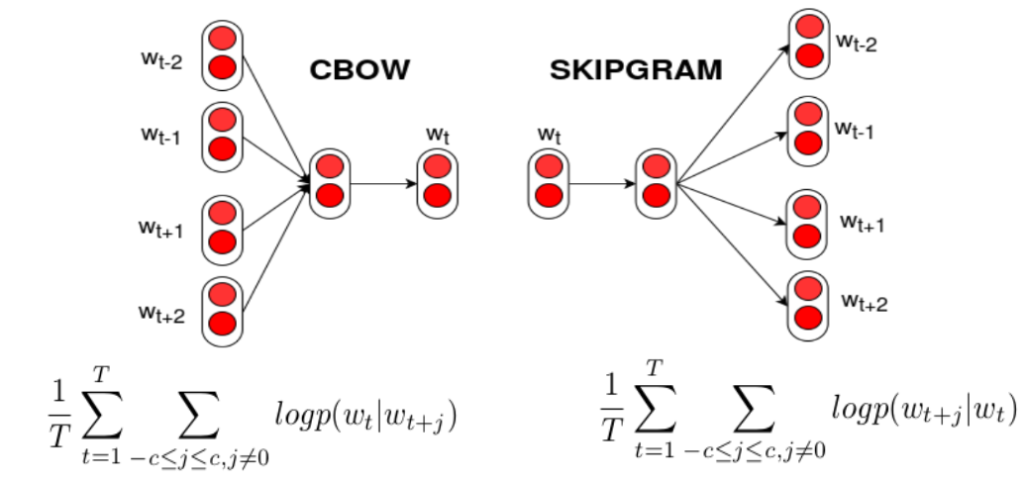

- Continuous Bag of Words (CBOW) Method – This method helps in completing a partial incomplete sentence by predicting the words that can be fitted into the middle of the sentence based on the surrounding context of the words. The context of prediction depends on the few words before and after the predicted word. These methods are called bag-of-words methods because the order of words in the context is not important.

- Skip-Gram Method – This method is used for making predictions of the context words or surrounding words given a current word in the same sentence. The Skip-gram model takes each word of the large corpus as the input and the hidden or embedding layer using the embedding weights predicts the context words.

The basic architecture of CBOW and Skip-Gram models are represented below.

Implementing Word2Vec in Python

Using the following lines of code we can implement a pre-trained word2vec model:

from gensim.models import Word2Vec

import gensim.downloader as api

v2w_model = v2w_model = api.load('word2vec-google-news-300')

sample_word2vec_embedding=v2w_model['computer'];Where the word2vec model is trained on google news. Instead of using the gensim framework pre-trained model, we can use the spacy or TensorFlow frameworks also. The reader can also go to this link for Guide To Word2vec Using Skip Gram Model

What is GloVe?

GloVe word is a combination of two words- Global and Vectors. In-depth, the GloVe is a model used for the representation of the distributed words. This model represents words in the form of vectors using an unsupervised learning algorithm. This unsupervised learning algorithm maps the words into space where the semantic similarity between the words is observed by the distance between the words. These algorithms perform the Training of a corpus consisting of the aggregated global word-word co-occurrence statistics, and the result of the training usually represents the subspace of the words in which our interest lies. It is developed as an open-source project at Stanford and was launched in 2014.

Training Procedure for GloVe Model

The glove model uses the matrix factorization technique for word embedding on the word-context matrix. It starts working by building a large matrix which consists of the words co-occurrence information, basically, The idea behind this matrix is to derive the relationship between the words from statistics. The co-occurrence matrix tells us the information about the occurrence of the words in different pairs.

Let’s have a look at the below image:

Here we have a matrix which is formed by considering the unique words from two sentences given on the left side of the image and in this we can see that the word “the” has accrued 3 times with cat fast and hat so the value in the matrix for the combination is 3 every time.

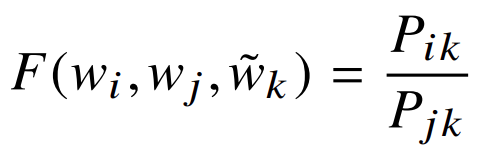

Now using the matrix we can compute the ratio of probabilities between any two pairs of words. For an examples lets say probabilities of (cat/fast) = 1 and (cat/the)=0.5; the ratio of these probabilities will be 2 and by this ratio, we can infer that ‘fast’ is more relevant than ‘the’ on the basis of probabilities the glove method can be represented mathematically as

Implementing GloVe in Python

Using the following line of code we can use a pre-trained GloVe model for word embedding

import gensim.downloader as api

glove_model = api.load('glove-twitter-25')

sample_glove_embedding=glove_model['computer'];We can also use the pre-trained model hosted on the standards link. The reader can also go to this link for Hands-On Guide To Word Embeddings Using GloVe.

Comparing Word2Vec vs GloVe

We can compare Word2Vec and GloVe based on the following parameters:-

- Training Procedures

Word2vec is a predictive model which is trained to predict the context words from the target (skip-gram method) or a target word given a context (CBOW method). To make predictions these models use the trainable embedding weights. These weights are used for mapping the words to their corresponding embeddings.

The Glove is a technique where the matrix factorization is performed on the word-context matrix. As we have discussed in the procedure of GloVe and the construction of the matrix here in the procedure for each “word” in the rows we see this word in some “context” (the columns) in a large corpus. The number of “contexts” can be very large. So we factorize this matrix to make it a lower-dimensional matrix, where each row is a vector representation for each word.

- Loss Functions

The loss function for training the word2vec model is related to the predictions made by the model which means as the training makes the model accurate this will result in better word embeddings.

In the glove method, we try to make a lower-dimensional matrix which means a better word embedding can be obtained by making the reconstruction loss lower or as lower as we can do. This loss can be explained as most of the variance in the high-dimensional data.

- Learning Methods

Word2Vec employs a three-layer neural network where the by-product of the network is the word vector using this word vector this network performs the word pair classification task.

Where the is the GloVe we aim to force the model to use the co-occurrence matrix for learning the linear relationship between the words.

- Generate Output

The one noticeable thing about the word2vec generated word embedding is that it can hold the word vectors such as “king” – “man” + “woman” -> “queen” or “better” – “good” + “bad” -> “worse” together or close in the vector space where the GloVe can not understand such linear relationship between the words in the vector space. Somehow now we are able to make GloVe understand such linear relationships.

The gloVe can observe the weightage of word-word co-occurrence probabilities that have the potential for encoding some form of meaning.

Word2Vec works on the co-occurrence probabilities so that the probability of any word surrounding the target can be maximized and the accuracy can be enhanced.

- Computation Time

In the practice, Word2Vec employs negative sampling by converting the softmax function as the sigmoid function. This conversion results in cone-shaped clusters of the words in the vector space while GloVe’s word vectors are more discrete in the space which makes the word2vec faster in the computation than the GloVe.

Final Words

In this article, we understood what Word2Vec and GloVe models are. We discussed their applications as word embedding techniques along with the procedures to train these models. We also saw how gensim provides an API for using these models easily with quick implementations. In the end, we compared both models based on various important parameters. Hope this article helped in understanding the basic differences between both of these popular models.

References: