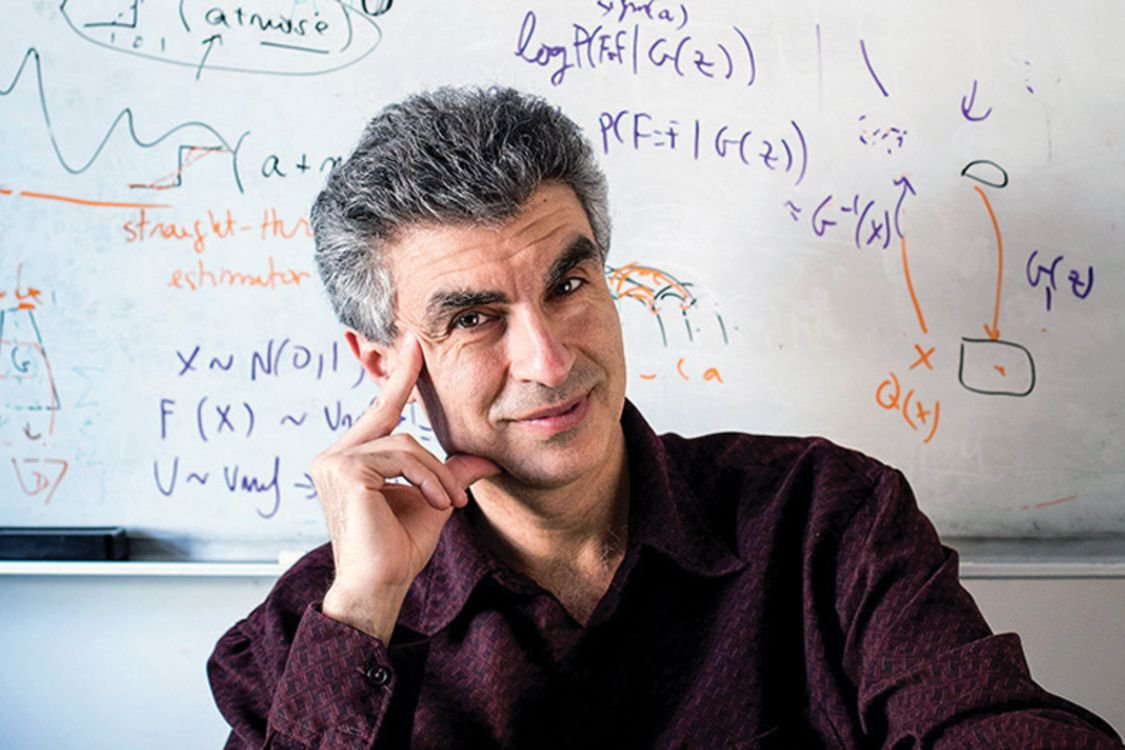

Recently, two researchers from the University of Montreal, Yoshua Bengio and Anirudh Goyal proposed new inductive biases that are meant to boost the deep learning performance. This paper focuses mainly on those inductive biases that concern mostly higher-level and sequential conscious processing. To be specific, this research’s main idea is to bridge the gap between human cognitive abilities and current techniques of deep learning.

Although the deep learning technique has achieved various groundbreaking results, there have always been controversies around it. For instance, have the main principles needed for deep learning techniques in order to achieve human-level performance been discovered? Or do one need to pursue a completely different research direction with deep learning techniques to achieve the kind of cognitive competence displayed by humans?

Through this research, the Canadian Computer Scientist and his team tried to understand the main gap between current deep learning and human cognitive abilities to answer these questions and suggest research directions for deep learning. The research was aimed at bridging the gap towards human-level AI.

In the present scenario, deep learning ventures leverages several key inductive biases, and the technique has achieved much accuracy across a variety of tasks and applications. Their key hypothesis was that deep learning succeeded in part because of a set of inductive biases. However, it required additional ones in order to go from good in-distribution generalisation in highly supervised learning tasks to strong out-of-distribution generalisation and transfer learning to new tasks with low sample complexity.

The researchers stated, “Deep learning already exploits several key inductive biases, and this work considers a larger list, focusing on those which concern mostly higher-level and sequential conscious processing.”

The New Inductive Biases

The researchers first proposed an inductive bias that maintains several learning speeds — with more stable aspects learned more slowly, and more non-stationary or novel ones learned faster, and pressure to discover stable aspects among the quickly changing ones.

The next inductive bias is meant for high-level variables. It is a joint distribution between high-level concepts that can be represented by a sparse factor graph. According to the researchers, the proposed inductive bias is closely related to GWT’s bottleneck of conscious processing. One can also make links with the original von Neumann architecture of computers.

Further, the next inductive bias is almost a consequence of the biases on causal variables and the bias on the sparsity of the factor graph for the joint distribution between high-level variables. They said, “It states that causal chains used to perform learning (to propagate and assign

credit) or inference (to obtain explanations or plans for achieving some goal) are broken down into short causal chains of events which may be far in time but linked by the top-level factor graph over semantic variables.”

Moving Forward

According to Bengio and Goyal, the ideas presented in this research are still in their early maturation stages. They mentioned that one of the big remaining challenges in line with the ideas discussed here remains to learn a large-scale encoder, that is mapping low-level pixels to high-level causal variables, and a large-scale causal model of these high-level variables. An ideal scenario for this would be model-based reinforcement learning, where the causal model would learn the stochastic system dynamics.

Another major challenge that they mentioned is to unify in a single architecture both the declarative knowledge representation like a structural causal model and the inference mechanism that may be implemented with attention and modularity, like with Recurrent Independent Mechanisms (RIMs) and their variants.

Read the paper here.