All the machine learning algorithms are somewhere related to mathematics. Some of them are completely based on mathematics and some of them are a combination of logic and mathematics. So to understand machine learning algorithms, we are required to know some of the basic mathematical concepts. Eigendecomposition is a very basic concept in mathematics that gives way to factorizing matrices and can be utilized in various parts of machine learning. In this article, we are going to discuss eigendecomposition and how we can perform it using a normal use case. The major points to be discussed in the article are listed below.

Table of content

- What is eigendecomposition

- Mathematics behind eigendecomposition

- Eigendecomposition in machine learning

- Implementation of eigendecomposition

Let’s first discuss eigendecomposition.

What is Eigendecomposition?

Mathematically, Eigen decomposition is a part of linear algebra where we use it for factoring a matrix into its canonical form. After factorization using the eigendecomposition, we represent the matrix in terms of its eigenvectors and eigenvalues. One thing that needs to be covered is that we can only factorize square matrices using eigendecomposition.

Are you looking for a complete repository of Python libraries used in data science, check out here.

Mathematics behind Eigendecomposition

We can generate a better understanding of eigendecomposition using mathematical expressions. Let’s say there is a nonzero vector v of dimension A is an eigenvector of a matrix N of size A X A. then the following equation needs to be satisfied:

Nv = 𝜆v,

Where 𝜆 is scalar. If the above is satisfied then 𝜆 can be called the eigenvalue corresponding to v. geometrically, we can say that an eigenvector corresponding to an eigenvalue of any matrix is the vector that matrix shrinks, and the eigenvalue corresponding to that eigenvector defines the amount of shrink. The above equation can be yielded for the eigenvalue as follows:

() = det(N -i) = 0

Let’s say that N is a square matrix and has an independent eigenvector qi that is linear where i – 1, …a. Then we can factorize N as follows:

N = QQ-1

Where Q is a square matrix with the dimensions of A X A and its column is an eigenvector of N. is a diagonal matrix.

Eigendecomposition in machine learning

In the above, we have already discussed that eigenvectors are the vectors that are non-zero and can be stretched by a matrix while its direction is not changing and eigenvalues define the amount of stretch on eigenvectors. In normalization, we can say that various linear operations or various operations can provide great results while applying to the eigenvalues, not on the matrix. And talking about machine learning we find that various operations need values, not matrices. So these operations can be applied to the eigenvalues.

We mainly find the usage of eigendecomposition in the dimensional reduction part of machine learning. As discussed above, the eigenvectors are the vectors that are being stretched to a point and values are the amount of stretch. The dimensional reduction also works on concentrating data in one or different spaces and the principal component analysis method mainly uses the eigendecomposition. This method works in a flow as follows:

- PCA method centers the data on a point

- Computes the covariance matrix

Covariance is the measure of the relation between two points and after finding the matrix, if they get multiplied then multiplication gives a vector. The slope of the determined vector slowly converges to the maximum variance if multiplication is repeated. Ultimately here we are performing the eigendecomposition and finding the eigenvector and eigenvalues. Let’s see how we can do this in python codes.

Implementation of Eigendecomposition

There are many ways to do so and to perform this we just need a matrix. We can perform this using the numpy library in the following way

Defining the matrix

import numpy as np

data = np.array([[2.5, 2.4, 2.3], [0.5, 0.7, 0.9], [2.2, 2.9, 2.7]])

data

Output:

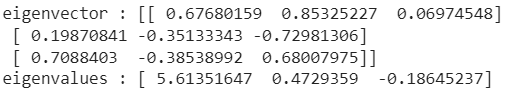

Extracting eigenvalue and vector

values, vectors = np.linalg.eig(data)

print('eigenvector :',vectors)

print('eigenvalues :',values)

Output:

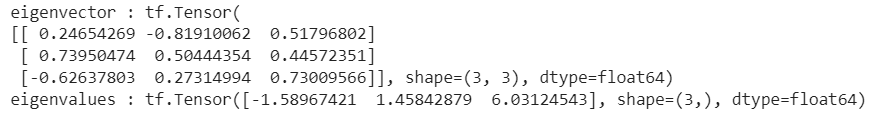

We can also use the TensorFlow library for factorization of the matrix using eigendecomposition in the following way

import tensorflow as tf

values, vectors = tf.linalg.eigh(data)

print('eigenvector :',vectors)

print('eigenvalues :',values)

Output:

Now one of the major uses of this decomposition is found in the principal component analysis. The sklearn module PCA applies it in the background. Let’s say we are performing dimensional reduction using the PCA then we can extract these values using the following way

Fitting the PCA on matrix

from sklearn.decomposition import PCA

import numpy as np

print(data)

pca = PCA()

pca.fit(data)

Output:

Extracting eigenvectors and values

print('eigenvectors :',pca.components_)

print('eigenvalues :',pca.explained_variance_)

Output:

Here we can see that this module from sklearn uses components and variance in place of values and vectors because it becomes easier for every other dimensionality reduction technique. For the PCA module, we can compare them as eigenvectors and eigenvalues.

Final words

In the article, we have discussed the eigendecomposition that is decomposing a square matrix into its eigenvalue and eigenvector. Along with this, we have discussed how it gets utilized in machine learning and how we can factorize matrices using the python language.