Self-supervision is an unsupervised learning technique where the data provides the supervision itself. This has been gaining popularity over the last few years while developing machine learning techniques. It allows neural language models to enhance the natural language understanding (NLU) by allowing the model to learn from the vast amount of unannotated text at the pre-training stage. Most importantly, self-supervision plays a key role in advancing state-of-the-art natural language processing (NLP) tasks.

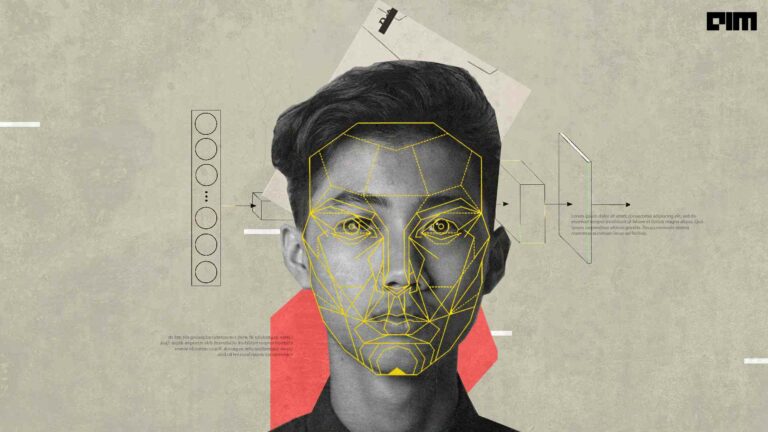

There is a huge difference between the thinking capabilities of human beings and machines and researchers are trying hard to fill this gap. For instance, when we hear a sentence which contains homonyms such as, “Alice always ties her hair back in a band and she enjoys listening to songs by rock bands like the Beatles,” or, “Bob is an exceptional bass player and he does not like to eat bass.” In these two sentences, humans instantly understand the difference between the words “band” and “bass” which are used in different circumstances. This is where machine learning and artificial intelligence techniques fall short most of the time.

To mitigate such issues, researchers from Israeli research company AI21 Labs recently developed a machine learning model to gain the transparency of human used sentences. The novel method is named as SenseBERT which applies self-supervision directly on the level of a word’s meaning.

Behind the Model

In the above image, the difference between a simple BERT model and SenseBERT model is shown such that the latter includes a masked-word supersense prediction task which is pre-trained jointly with the original masked-word prediction task of BERT.

The researchers trained a semantic level language model by adding a masked-word sense prediction task as an auxiliary task in BERTs pretraining. This helps in predicting the missing words meaning jointly with the standard word-form level language model. In order to retain the ability to self-train on unannotated text, WordNet was used. This methodology can be said as pre-trained to predict not only the masked words but also their WordNet supersenses.

WordNet is a large lexical database of English where nouns, verbs, adjectives, and adverbs are grouped into sets of cognitive synonyms (synsets), each expressing a distinct concept This dataset is a useful tool for computational linguistics and natural language processing.

How SenseBERT Is Better Than Existing Self-Supervision Techniques

Compared with the existing self-supervision techniques, SenseBERT achieves significantly improved lexical understanding by attaining a state-of-the-art result on the Word in Context (WiC) task. According to the researchers, the existing self-supervision techniques operate at the word-form level, which serves as a surrogate for the underlying semantic content. While SenseBERT is a method which employs self-supervision directly at the word sense level.

Why Use This

According to the researchers, a BERT model trained with the current word-level self-supervision along with the implicit task of disambiguating word meanings exhibits high supersense misclassification rates and often fails to extract the lexical semantics. This shortcoming can be easily overcome by applying the novel SeenseBERT model.

Outlook

SenseBERT has significantly improved lexical disambiguation abilities. Semantic networks are popular among AI and natural language processing for several tasks such as representing data, support conceptual edition, revealing structures, among others because of its ability to represent knowledge and support reasoning. Bidirectional Encoder Representations from Transformers (BERT) is one of the most popular and major breakthroughs in transfer learning methods of deep learning models. In one of our articles, we also discussed Vision-and-Language BERT (ViLBERT), an extension model of BERT to learn task-agnostic joint representations of image content as well as natural language.

Read the paper here.