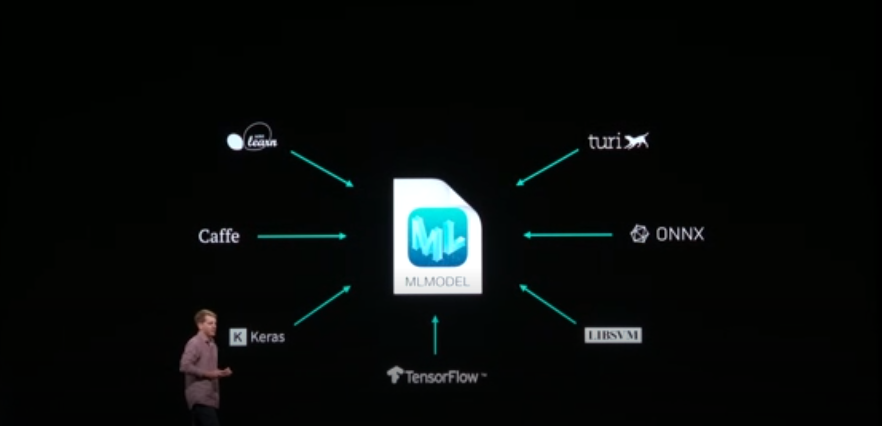

Apple has been revolutionising personal technology for over three decades now. Apple’s obsession with cutting edge technology have made them pioneers in the most advanced fields such as machine learning. At the ongoing Apple Worldwide Developers Conference,San Jose, CA, Apple released Core ML 3 and other exciting tool upgrades for the machine learning community.

On Device Personalisation With Core ML 3

Core ML forms the foundation for domain-specific frameworks and functionality. Core ML supports Vision for image analysis, NLP, and GameplayKit for evaluating learned decision trees. It is built on top of low-level primitives like Accelerate and BNNS, as well as Metal Performance Shaders and supports the acceleration of more types of advanced, real-time machine learning models.

With over 100 model layers now supported with Core ML, the ML team at Apple believes that apps can now use state-of-the-art models to deliver experiences that deeply understand vision, natural language and speech like never before.

And for the first time, developers can update machine learning models on-device using model personalization. This cutting-edge technique gives developers the opportunity to provide personalized features without compromising user privacy.

Other features include:

- Improved landmark detection, rectangle detection, barcode detection, object tracking, and image registration. Use the new Document Camera API to detect and capture documents using the camera

- Analyze natural language text and deduce its language-specific metadata for a deep understanding. This framework can be used with Create ML to train and deploy custom NLP models

- On-device speech recognition for 10 languages as well as speech saliency features such as pronunciation information, streaming confidence, utterance detection, and acoustic features

Machine Learning Modeling With CreateML

The new, user-friendly Create ML app that allows developers to build, train, and deploy machine learning models with no machine learning expertise required. Create ML lets the user to view model creation workflows in real time. The developers can even train models from Apple with their custom data, with no need for a dedicated server.

With Create ML, a dedicated app for machine learning development, developers can build machine learning models without writing code. Multiple-model training with different datasets can be used with new types of models like object detection, activity and sound classification.

With CreateML one can:

- Build models for object detection, activity and sound classification, and providing recommendations. Take advantage of word embeddings and transfer learning for text classification

- Train multiple models using different datasets, all at the same time

- Test models before deploying them in production with evaluation support that’s unique to each training task

Along with the above features, Apple also has demonstrated their AR/VR capabilities and gave a preview of their new OS Catalina. The 5 day event concludes today.