The pipeline of a machine learning project consists of various stages with each stage having its own fair share of significance in influencing the outcome or some prediction.

The changes that are made to these components of a pipeline say, during its training, computations can be performed locally. This update has to be transmitted so that every training step incorporates it.

At the fundamental level, the data that is being transmitted during the training step, also plays a key role in the outcome. The data bandwidth that needs to be transferred and the associated accuracy of the model.

And that’s why the ML team has to walk a tightrope when it comes to maintaining accuracy with torrential inflows of data.

Whenever a model is deployed for training, initially there is a forward pass where loss functions are evaluated and then there is a backward pass where the gradients required to make up for the loss are generated. These gradients are then updated by pushing them to servers. These servers aggregate the updates from all the users and makes changes to the global model.

This procedure is repeated over several times in the course of training a model. Which means more data is generated with every training step and the delay in transmitting the changes between the users in an ML ecosystem will pile up the computational redundancies and eventually ends up getting expensive.

Hyeontak Lim and his peers at Google Brain propose a scheme, 3LC, which finds a balance between various goals of an ML ecosystem like accuracy and computational overhead.

3LC, a lossy compression technique to compress the data while preserving the accuracy of the model.

So, why compress the data when there has been such massive advancements in the way data is traded be it on the hardware or software end?

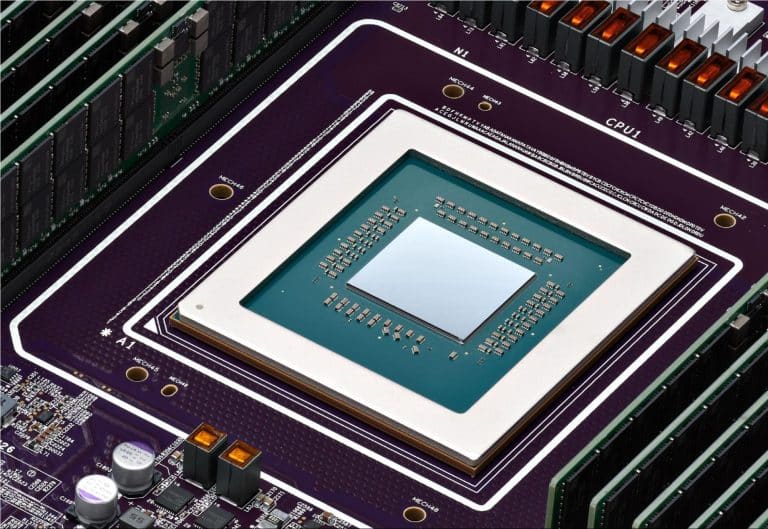

The researchers observe that the deep learning performance of current state-of-the-art GPUs have increased by 25 times whereas the interconnected bandwidth for data transfer has increased only by 15x. This deficiency, is believed to add more strain to the communication(sharing changes in the pipeline across teams) within an ML ecosystem in the near future.

Idea Behind 3LC

- Large datasets with longer training time will lead to heavy lifting on computation end. To shrug some weight and cut the corners, localised state changes should be transmitted quickly across the server.

- Faster transmission means making a trade-off with the bandwidth of data that can be sent.

- Transmitting more data within the same bandwidth requires compressing data without loss in accuracy.

- Even a small reduction in bandwidth has benefits overall. Then 3LC can be tested for its extremities in case of cheaper data transmission and its impact on design of cluster GPUs and other hardware accelerators.

Features of 3LC model

- Uses 0.3-0.8 bits for each real number state change on average i.e reduction of traffic or incoming data compression by 39-107x

- No loss in accuracy

- Light on computation

3LC scheme imbibes two compression approaches- Quantization and Sparsification

3-value quantization with sparsity multiplication represent each state change with 3 values {-1,0,1}. A single byte of data contains a group of five 3-values, encoded in base-35.

In other words, less space- more data, reduced traffic and alleviated computational brute force.

And, achieving a balance between algorithmic efficiency, computational efficiency, communication bandwidth and applicability for machine learning ecosystem.

The prototype of 3LC is implemented on TensorFlow using its built-in vectorized operators. The design of 3LC is such that there is no redundant compression. When a trainer uploads their own model state changes to the server, the changes get synchronised across the system. This avoids individual compression by each trainer. Once a training step is completed and the state variables updated then the same kind of compression will be avoided;a decentralised system at work.

Deep neural networks usually operate on large datasets or data streams. These compression methodologies reduce the memory requirement while maintaining model’s speed and accuracy during distributing training steps.

Check the results here