Currently, one of the most explored and favoured machine learning techniques by the biggest and brightest AI brains, Reinforcement Learning is a term known to practically everyone working in the field of AI. The process of learning by reinforcement is by itself a strong sign of intelligence that we humans can easily relate to. We have already discussed reinforcement learning with a very popular algorithm called Thompson Sampling in one of our previous articles.

Meanwhile, feel free to check out our latest hackathon in Machinehack – Predict The Cost Of Used Cars – Hackathon By Imarticus. The hackathon is conducted in partnership with Imarticus Learning. Participate now and win exciting prizes.

In this article, we will explore another popular algorithm that implements reinforcement learning called the Upper Confidence Bound or UCB.

What is UCB

Unlike Thompson sampling that we discussed in one of our previous articles which is a probabilistic algorithm meaning that the success rate distribution of the bandits was calculated based on the probability distribution. UCB is a deterministic algorithm meaning that there is no factor of uncertainty or probability.

We will use the same MultiArmed Bandit Problem to understand UCB. If you are not familiar with the Mult-Armed Bandit Problem(MABP), please go ahead and read through the article – The Intuition Behind Thompson Sampling Explained With Python Code.

UCB is a deterministic algorithm for Reinforcement Learning that focuses on exploration and exploitation based on a confidence boundary that the algorithm assigns to each machine on each round of exploration. (A round is when a player pulls the arm of a machine)

Inside UCB

We will try to understand UCB as simple as possible. Consider there are 5 bandits or slot machines namely B1, B2, B3, B4 and B5.

Given the 5 machines, using UCB we are going to devise a sequence of playing the machines in a way to maximize the yield or rewards from the machines.

Given below are the intuitive steps behind UCB for maximizing the rewards in a MABP:

Step 1: Each machine is assumed to have a uniform Confidence Interval and a success distribution. This Confidence Interval is a margin of success rate distributions which is the most certain to consist of the actual success rate distribution of each machine which we are unaware of in the beginning.

Step 2: A machine is randomly chosen to play, as initially, they have all the same confidence Intervals.

Step 3: Based on whether the machine gave a reward or not, the Confidence Interval shifts either towards or away from the actual success distribution and the also converges or shrinks as it has been explored thus resulting in the Upper bound value of the confidence Interval to also be reduced.

Step 4: Based on the current Upper Confidence bounds of each of the machines, the one with the highest is chosen to explore in the next round.

Step 5: Steps 3 and 4 are continued until there are sufficient observations to determine the upper confidence bound of each machine. The one with the highest upper confidence bound is the machine with the highest success rate.

Learn The Math Behind UCB

Given below is the algorithm inside UCB that updates the Confidence bounds of each machine after each round.

Step 1: Two values are considered for each round of exploration of a machine

- The number of times each machine has been selected till round n

- The sum of rewards collected by each machine till round n

Step 2: At each round, we compute the average reward and the confidence interval of the machine i up to n rounds as follows:

Average reward :

Confidence Interval :

Step 3: The machine with the maximum UCB is selected.

UCB:

Implementing UCB with MultiArmed Bandits Problem

Importing the dataset

We will use a simple dataset with 200 observations for 5 machines. Click here to download the sample or create your own by generating random numbers.

import pandas as pd

data = pd.read_csv(“UCBbandits.csv”)

Importing necessary libraries

import math

import matplotlib.pyplot as plt

import pandas as pd

Implementing UCB

Since we have to iterate through each observation of each of the 5 machines, we will start by initializing the number of observations and machines.

observations = 200

machines = 5

Now we will initialize the two necessary variables discussed in the algorithm as follows:

numbers_of_selections_of_each_machine = [0] * machines

sums_of_rewards_for_each_machine = [0] * machines

We will also define two more variables prior to the algorithm, one to store the sequence of the machines that are selected at each round and another variable to store the total rewards produced by the algorithm.

machines_selected = []

total_rewards = 0

Now let’s begin our algorithm, we will iterate over each machine in each observation starting with B1 (indexed 0) and with a maximum upper bound value of zero.

At each round, we will check if a machine(bandit) has been selected before or not. If yes, the algorithm proceeds to calculate the average rewards of the machine, the delta and the upper confidence. If not, that is if the machine is being selected for the first time then it sets a default upper bound value of 1e400.

After each round, the machine with the highest upper bound value is selected, the number of selections along with the actual reward and sum of rewards for the selected machine is updated.

After all the rounds are completed, we will have a machine with a maximum upper bound value.

The algorithm can be coded as follows:

for n in range(observations):

bandit = 0

max_upper_bound = 0

for i in range(machines):

if (numbers_of_selections_of_each_machine[i] > 0):

average_reward = sums_of_rewards_for_each_machine[i] / numbers_of_selections_of_each_machine[i]

di = math.sqrt(3/2 * math.log(n + 1) / numbers_of_selections_of_each_machine[i])

upper_bound = average_reward + di

else:

upper_bound = 1e400

if upper_bound > max_upper_bound:

max_upper_bound = upper_bound

bandit = i

machines_selected.append(bandit)

numbers_of_selections_of_each_machine[bandit] = numbers_of_selections_of_each_machine[bandit] + 1

reward = data.values[n, bandit]

sums_of_rewards_for_each_machine[bandit] = sums_of_rewards_for_each_machine[bandit] + reward

total_rewards = total_rewards + reward

Visualizing the results

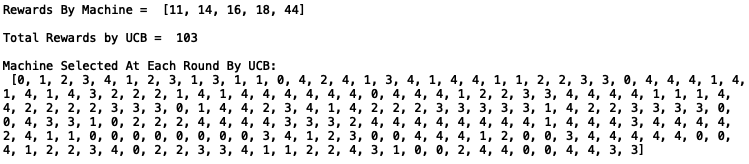

print("\n\nRewards By Machine = ", sums_of_rewards_for_each_machine)

print("\nTotal Rewards by UCB = ", total_rewards)

print("\nMachine Selected At Each Round By Thompson Sampling : \n", machine_selected)

Output:

Visualising the rewards of each machine

plt.bar(['B1','B2','B3','B4','B5'],sums_of_rewards_for_each_machine)

plt.title('MABP With UCB')

plt.xlabel('Bandits')

plt.ylabel('Rewards By Each Machine')

plt.show()

Output:

Visualising the selections of each machine

plt.bar(['B1','B2','B3','B4','B5'],numbers_of_selections_of_each_machine)

plt.title('Histogram of machines selected')

plt.xlabel('Bandits')

plt.ylabel('Number Of Times Each Bandit Was Selected To Play')

plt.show()

Output:

Here is what the complete code with proper indentation looks like: