Deep Learning has seeped in almost every organisation and their day-to-day activities — right from the health sector to the music industry. This subset of machine learning is expected to reach $28.83 billion and expand at a CAGR of 48.4% during the period of 2018-2023. In this article, we list down seven deep learning methods that an AI enthusiast must know.

(The list is in alphabetical order)

1| Back-Propagation

Introduced in the 1970s, back-propagation or backward propagation of errors is a supervised learning algorithm which is used for training artificial neural networks. The method works by searching for the minimum value of the error function in weight space by using a technique known as gradient descent. This means that the method calculates the gradient of the error function with respect to the neural network weights. It is analogous to calculate the delta rule for a multilayer feedforward network.

Learn more from here.

2| Batch Normalisation

Normalisation is a crucial part while preparing data for machine learning models. Introduced in 2015, Batch Normalisation is a technique for improving the performance and stability of an artificial neural network. This technique is used in deep neural networks by standardising the inputs into a layer for each mini-batch. This method helps in stabilising the learning process as well as reducing the number of training periods which are required to train deep neural networks.

Learn more from here.

2| Continuous Bag of Words (CBOW) Model

Fig: Continuous Bag of Words model (Source)

Continuous bag of words is a simple modification to WordtoVec. In Continuous Bag of Words (CBOW) the model tries to predict the current word from a given context where the context is represented by multiple words. For instance, we can assume “Bob” and “car” as context words and “drives” as the centre or target word. This method helps in improving natural language processing tasks such as part-of-speech tagging, dependency parsing, etc.

Learn more from here.

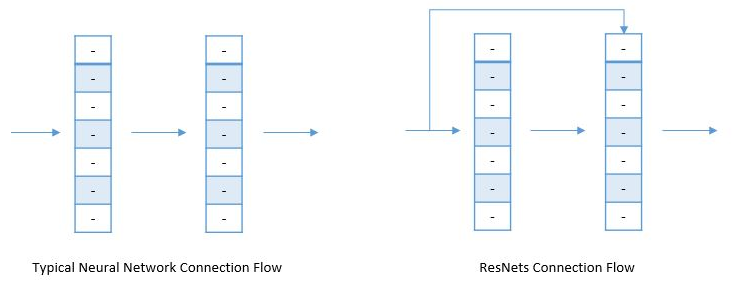

4| ResNet

ResNet or Residual Neural Network is used to improve the training of networks in substantially deeper neural networks. This method works by breaking down deep neural networks into small chucks of networks which are connected through skip or other shortcut connections in order to form a bigger network.

Fig: ResNet Connection Flow(Source)

5| Stochastic Gradient Descent

Stochastic Gradient Descent is one of the most popular optimisation technique in machine learning and deep learning. In this technique, few samples are selected in a random way instead of taking the whole dataset for each iteration i.e. it uses only a batch size of one, to perform each iteration. The sample is randomly shuffled and selected for performing the iteration.

Learn more from here.

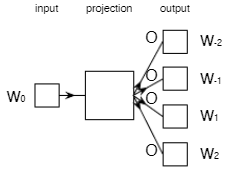

6| Skip Gram

Fig: Skip Gram model (Source)

Skip gram is a simple modification to WordtoVec and can be said as the reverse method of Continuous bag of words. The objective function of this method is to maximize the likelihood of the prediction of contextual words given the centre word. In this method, the model predicts the surrounding context words with given a center word. For instance, taking the previous example, “Bob” and “car” will be taken as target words and “drives” as a context word. Just like a continuous bag of words model, this method helps in improving natural language processing tasks such as part-of-speech tagging, dependency parsing, etc.

Learn more from here.

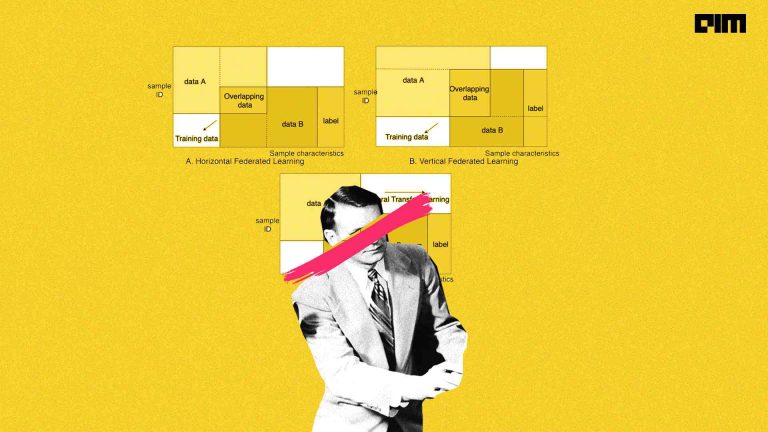

7| Transfer Learning

Transfer learning is one of the most popular approaches in deep learning and machine learning problems. In this approach, pre-trained models are used as a starting point where this method utilises the gained information while solving a problem and later implying it to another problem which is different but related. It helps in optimising progress as well as performance for the second task.

Learn more from here.