- Published on January 18, 2021

- In Deep Tech

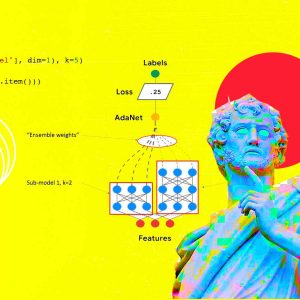

AdaBoost Vs Gradient Boosting: A Comparison Of Leading Boosting Algorithms

📣 Want to advertise in AIM? Book here

From Hollywood studios to book publishers, copyright holders are pushing back against AI training.

As AI reshapes markets, VC firms like Sequoia Capital are backing rival startups, raising fresh questions.

IAIRO is trying to create an ecosystem in India with funded labs, strong mentors, ambitious peers, and a culture of

Wikipedia’s partnerships with Microsoft, Meta, Perplexity, Mistral, and Amazon have ushered it into the AI era.

The previous global AI summits have delivered failed promises of self-regulation by Big Tech and voluntary agreements lacking teeth.

EY research shows that 62% of employees in India are already using AI regularly at work.

The cost of such failures is enormous, forcing teams to rethink how early and how holistically they test.

Policy advisors and civil society groups accuse Big Tech of serving corporate interests with a hawkish AI push.