|

Listen to this story

|

Back in the day, when Mark Zuckerberg launched the basic skeletal structure of Facebook, the company’s founders formed a hypothesis to judge the progress that the site was making – if a new user made seven new friends within their first ten days, it was a good sign. The team formed the conclusion after continuous experimentation to impact the metric and measuring the result to prove a link between the two.

Seemingly simple, the final hard numbers that the team arrived at, was precious data. During this time, Facebook was a social network in its most unadulterated form.

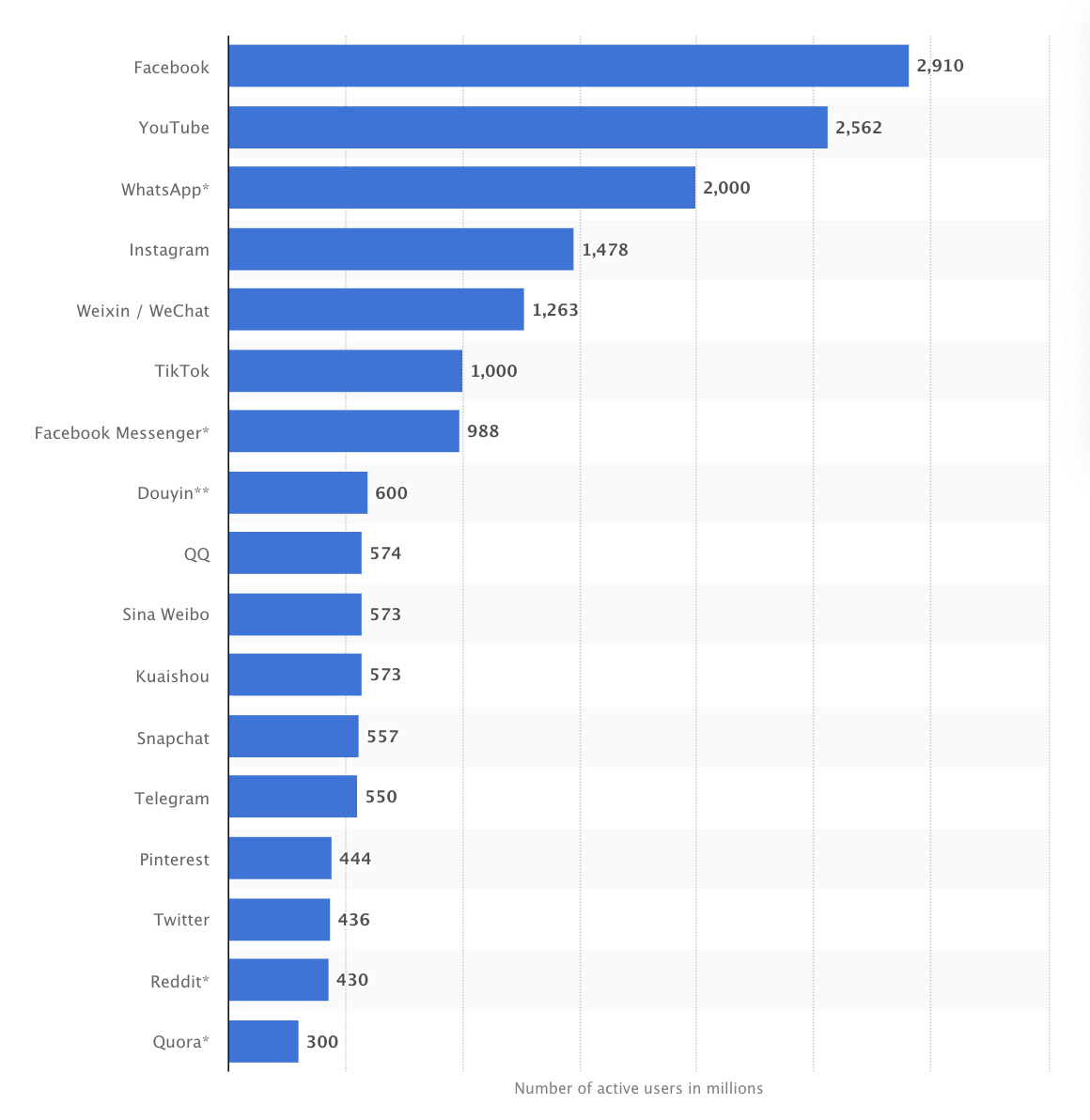

Come 2021, Facebook had evolved into Meta, symbolising the sharp turn it had taken to building a metaverse. The brand Meta would now own Facebook, Instagram and world’s most popular instant messaging application, WhatsApp. While present-day Facebook struggles with an increasingly competitive space and lesser advertising spending, the website still has 2.94 billion monthly active users.

According to a report published in 2020, Facebook was generating 4 petabytes or a million gigabytes of data everyday. WhatsApp users exchanged close to 65 billion messages per day. The data deluge from all the networking apps owned by Facebook has contributed to a treasure trove of big data.

As Meta tries to build its much-publicised metaverse, while Facebook attempts to find its footing amidst “younger” social apps like TikTok and Snapchat, detractors are accusing the tech giant of being ‘soulless’. So has the largest social networking platform actually managed to lose base with its consumers?

Big data, big models

The erstwhile analytics chief of Facebook, Ken Rudin, explained the company’s dependence on data saying, “Big Data is crucial to the company’s very being.”

The initial perception that more data could only mean better analytics, fit in perfectly with Facebook’s access to data. More data does help with training AI models by introducing more features to the datasets. The more the variables, the richer the datasets to train the AI models. The datasets add more raw materials that can be used as features. Additionally, it gives more fields that can be combined later to make derived variables.

As ML models grew bigger in scale, the trend was that they became more capable and more accurate. And with this, large language models or LLMs entered the picture. Companies started racing to build the bigger large language model with no upper limit on the number of parameters. Last year in October, NVIDIA and Microsoft introduced the Megatron-Turing NLG with 530 billion parameters. This was quickly hailed as “the largest and most powerful generative language model”.

Does big data mean quality?

For big tech firms with deep pockets, acquiring data and training these models meant spending close to USD 100 million, which worked well for them on the cost-benefit front. To Meta, which was a data storehouse, it would be even more natural to build large language models. But the problem with large language models was this — more parameters did not necessarily translate into more efficient models. While there isn’t absolute clarity over the alchemy of good AI models, there was plenty of logic to justify that size isn’t everything.

According to Julien Simon, the chief evangelist at open-source platform Hugging Face, ML models function like the human brain which has an average of 86 billion neurons and 100 trillion synapses. Expectedly, not all neurons are dedicated to language. In contrast, the much talked about GPT-4 is expected to have around 100 trillion parameters. Simon states that just like Moore’s Law with the semiconductor industry, large language models are also starting to show similar results. The bigger the model, the higher the costs and risks, lower the returns and more the complexity.

Meta’s big models

Despite all the pushback, the heat around large language models has somewhat stayed alive. Until as recently as May this year, Meta released its Open-Pretrained Transformer or OPT- 175B to match Google’s GPT3, which also has 175 billion parameters of its own. The new model was trained on 800 gigabytes of data.

Last week, Meta released a prototype chatbot BlenderBot3, built on the OPT-175 billion. The model for the updated version was 58 times bigger than the BlenderBot 2, Meta wrote in its blog. Meta released the chatbot to the public to collect feedback and within a few days, BlenderBot 3 had made several false and jarring statements in its conversations with the public. For one, it described Meta CEO as “too creepy and manipulative”. Second, it claimed anti-semitic conspiracy theories as truth and then stated that it still believed that Trump was the US President. While impressive because of their scale, these large language models have flawed software that seeps in.

Scaling down

However, Meta may finally be changing its tune. A couple of days ago, Meta AI researchers Surya Ganguli and Ari Morcos published a paper titled ‘Beyond neural scaling laws: beating power law scaling via data pruning’. The paper proves that indiscriminately collecting a large amount of uncurated data can be highly inefficient. The study suggests that the quality of data can be improved drastically using a new unsupervised method of data ranking. This new approach is cheaper, simpler and a scalable technique that demonstrates similar efficiency levels as more expensive supervised methods.

Atlas, Meta AI’s newly released large language model trained on question-answering and fact-checking tasks is evidence that the company may be pulling back on building bigger models. The model achieved 42% accuracy on natural questions based on only 64 examples, beating Google AI’s PaLM, a 540 billion parameter model. Despite being trained on 50 times fewer parameters than PaLM, Atlas edged out the other by 3 percent.

Future of data usage

Big data can be a blessing or cumbersome and confusing. In Meta’s case, the change in direction hasn’t necessarily helped lighten the burden. A Credit Suisse report stated that moving towards the metaverse will push data usage by 20 times around the world by 2032. Meanwhile, Meta continues to build models that would fit in their metaverse. The difference between Facebook’s data-informed approach from its days of yore is that it eventually became a more data-driven approach since its explosion as a social network. Data is hardly the problem for Meta, but they might have to re-learn lessons on how to use it well.