📣 Want to advertise in AIM? Book here

“Only 30% of software engineering happens on the laptop. The real 70% starts after you commit the code,” says Jyoti

From groundwater and slopes to carbon sinks, tools like CatBoost are enabling Indian scientists to extract insights and drive sustainability.

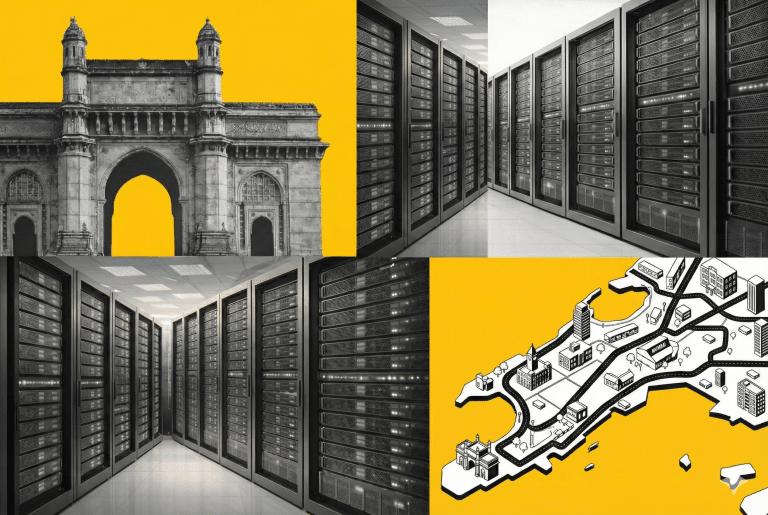

With capacity expected to more than double this decade, the industry is investing in training as graduates struggle to meet

Arrcus positions itself as a horizontal software layer that can run across different types of networking hardware.

With CoreWeave’s listing and Fractal Analytics going for an IPO, an array of AI companies are now looking to raise

Defenders must be active at all times, while attackers need only one opportunity.

Without compute buildup beyond metros, the next wave of digital adoption will be constrained

Land prices are among the highest in the country, but total build economics remain competitive by global standards.