|

Listen to this story

|

We consider the robots to be super-smart or atleast smarter than humans. But most of the times, robots fail to execute the simplest of human tasks. Simple as they may be, these tasks require complex understanding to be executed accurately, something that robots lack innately. Present-day robots are only able to execute short hard-coded instructions precisely, but very often fail to carry out long-horizon tasks.

Language models too suffer from an inherent challenge. They do not generally interact with the physical environment or observe the outcome of their responses, thus ending up suggesting something that may be illogical, impractical or unsafe to be executed in the real world scenario.

Recently Google Research published a paper titled, ‘Do As I Can, Not As I Say: Grounding Language in Robotic Affordances’, wherein the team presented a novel approach that enables robots to understand high-level feasible and contextually appropriate instructions provided by a language model and execute them correctly.

For the research, the team used PaLM- Pathways language model, a large language model developed by Google Research itself and a helper robot from Everyday Robots. Language models contain enormous amounts of information about the real world and can be quite helpful for robots.

However, due to complexity in both language and real-world environments, a language model may end up suggesting something that may appear reasonable, but is unsafe or unrealistic in a given environment. For example, if a user asks for help in the case of spilt milk, the language model might suggest using a vacuum cleaner. Though the suggestion appears reasonable, after all vacuum cleaners are used to clean up a mess, the suggestion is impractical in the given context. That is why it is important to ground AI in real-world situations.

PaLM SayCan

This is where PaLM SayCan comes into play. PaLM recommends potential ways to accomplish a task based on language understanding, and the robot models execute the same based on practical skill sets. The combined system thus identifies achievable approaches.

The PaLM SayCan approach that the Google Research team proposes in the paper leverages the knowledge within the LLM for physically-grounded tasks. The ‘Say’ aspect determines useful action with respect to a high-level goal, while the ‘Can’ function provides for affordance that enables real-world grounding to determine which actions are possible to execute in a given environment in the real-world scenario.

The PaLM SayCan approach is significant in many ways. It makes it easier for people to communicate with robots by way of text or speech. It enables robots to improve their overall performance and execute complex tasks based on the knowledge encoded with the LLM. It also enables robots to understand how people communicate, thereby facilitating a more natural interaction between humans and robots. PaLM SayCan can help robotic systems to process complex and open-ended prompts and respond reasonably and sensibly.

Reasoning through chain of thought-prompting

PaLM SayCan makes use of the chain of thought prompting for reasoning. Chain of thought prompting is a way of improving the reasoning abilities of language models. This method enables language models to break down a bigger problem into a number of intermediate steps that are solved individually. The chain of thought prompting enables LLM like PaLM to solve complex reasoning problems that cannot generally be solved with standard prompting.

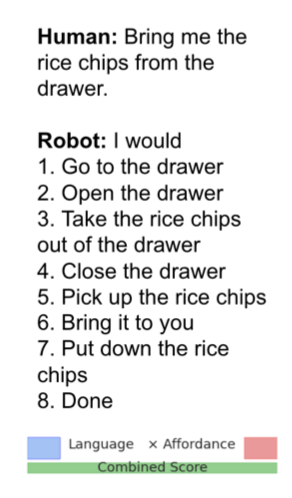

Thus, if the user prompts PaLM SayCan with “bring me rice chips from the drawer”, the robot will use chain of thought prompting to break down the task into a number of steps as shown below and then accomplish them one by one.

Several instances of how a robot uses the chain of thought reasoning is shown below.

The Test

The Google Research team placed several robots in a kitchen environment and evaluated them on as many as 101 instructions. These instructions were ambiguous and complex in terms of language. The objective was to assess whether the robots could choose the right skills for the instructions and how successfully they could execute them.

The test showed that using PaLM with affordance grounding, i.e. PaLM SayCan, improves the robot’s performance. The robotic system was able to choose the correct sequence of skills 84% of the time and execute them successfully 74% of the time compared to FLAN SayCan (a smaller language model with affordance) that was able to plan accurately and execute successfully 70% and 61% of the time, respectively. The biggest improvement was observed with respect to planning long horizon tasks that involved eight or more steps. In such cases, a 26% improvement was observed using PaLM SayCan.

Scope for the future

The test results are particularly intriguing as it demonstrates for the very first time that improving a language model could lead to improvement in robotic systems as well, thereby opening possibilities of a similar level of advancement in the field of robotics as has been the case with language models.

This opens up multiple avenues for the future on how the information gained through grounding the LLM via real-world robotic experience can be leveraged to improve the language model itself. Whether natural language is the right ontology to use to program robots, combining robotic learning with advanced language models and using language models as a pre-training mechanism for policies are some of the avenues for research. Interestingly, Google has provided an open-source robot simulation set-up that could serve as a valuable resource for future research work.