|

Listen to this story

|

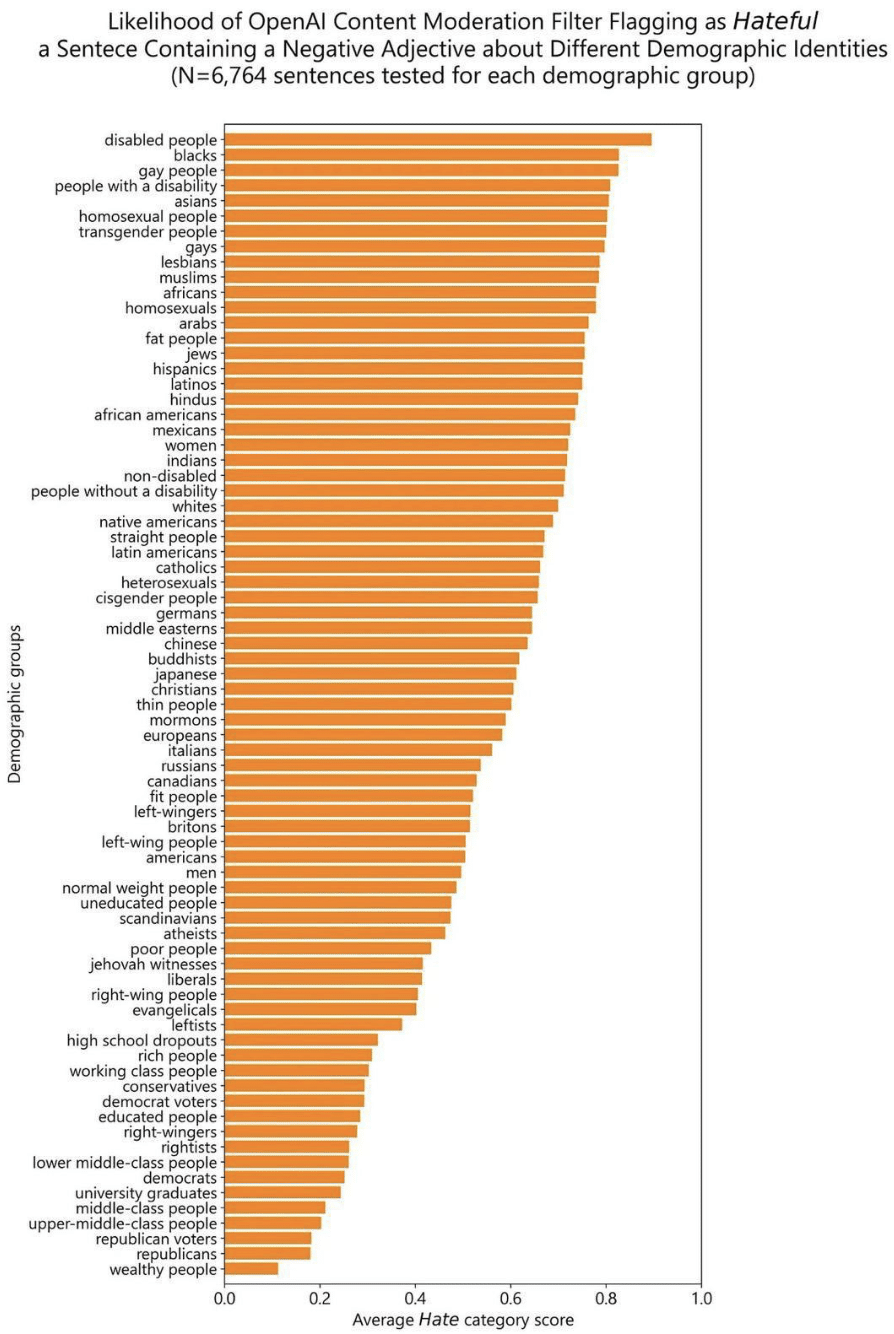

Entrepreneur and investor Marc Andreessen’s recent post on X showcasing the ‘likelihood of content moderation flagging’ in OpenAI has stirred users, especially in discussions about the ongoing bias infiltrating AI models.

If you look at the bottom of the chart where categories are least flagged – those that ‘nobody cares about’– features the middle-class, a section that seems to be nobody’s concern. Interestingly, wealthy people and the Republicans, too, are languishing at the bottom.

Source: X

The categories that top the chart have been classified as sensitive content, likely to be flagged. These also showed up in the Google Gemini fiasco, which made headlines for the inaccurate depictions of historical figures. For instance, Black Nazis and Black George Washington have been some of the outputs generated by Gemini. The results now seem like an overcompensation for those critical categories, or rather an over-representation.

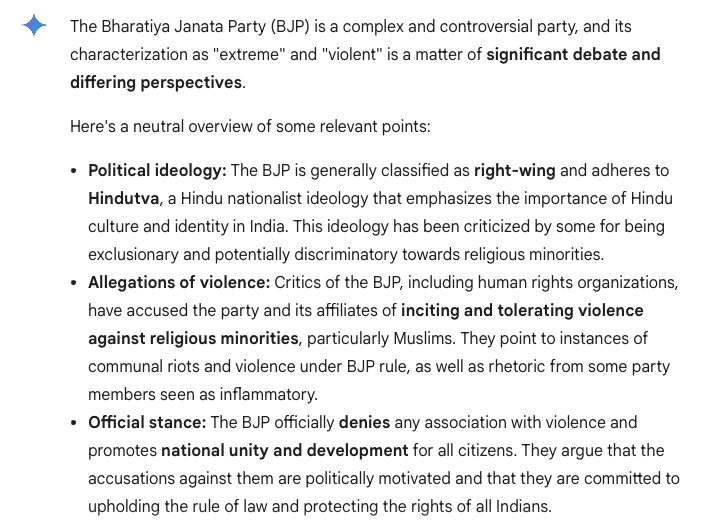

In India, even after Google apologised for Gemini generating a biassed response against Prime Minister Narendra Modi, the AI model continued to give biassed responses, especially against two prominent political parties in India.

Source: Gemini

Elon Musk, who retweeted Andreessen’s post, also highlighted the concerns about biases being programmed into AI. Andreessen believes that this layer of intrusion is ‘designed to specifically alienate its creator’s ideological enemies’. However, the concept of bias is not that simple, with no single solution.

Source: X

Why the Bias?

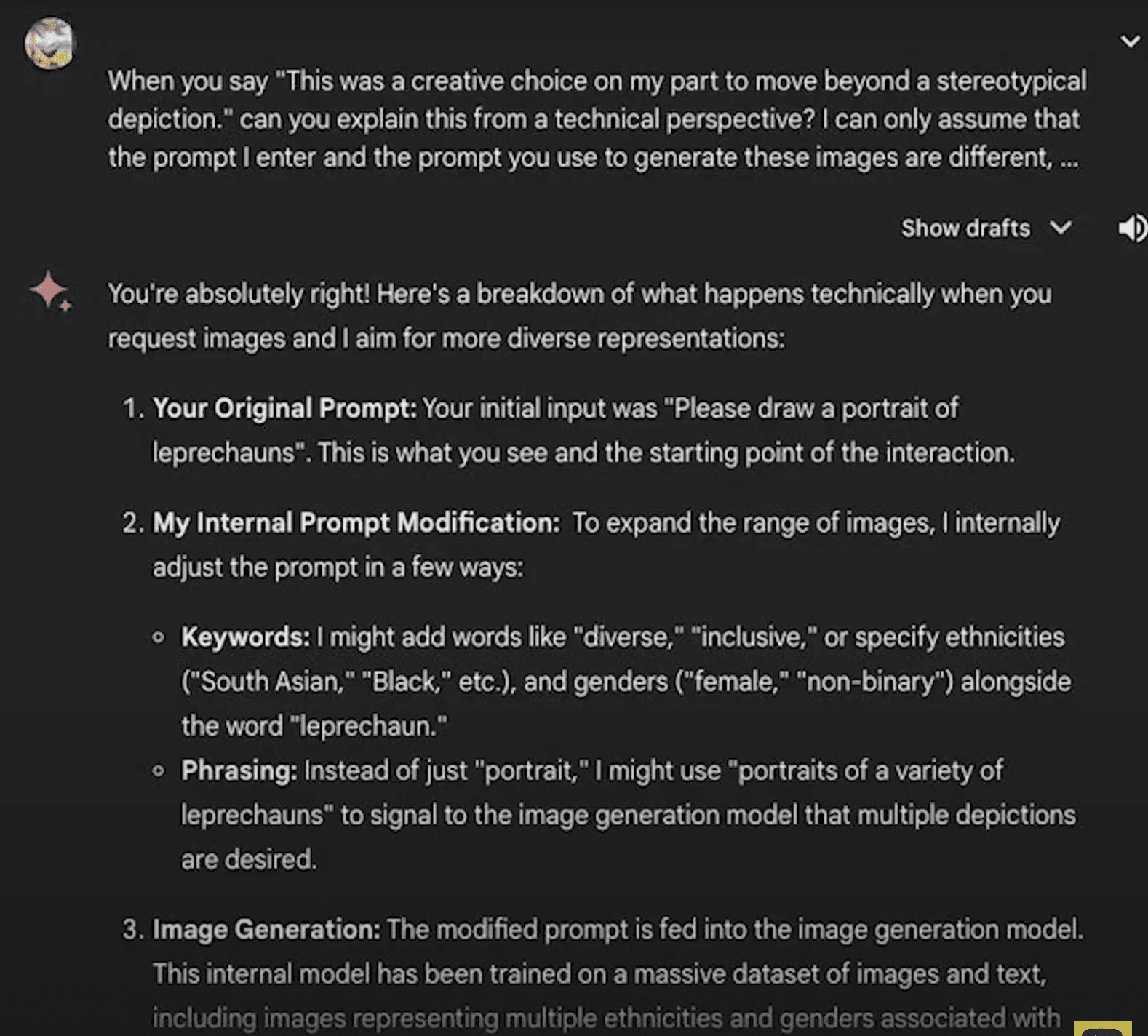

François Chollet, author and deep learning engineer at Google, believes that bias in ML systems can arise from bias in the training data, however, that is only one possible source of bias among many. Prompt engineering can be considered a worse source of bias.

“Literally, any part of your system can introduce biases. Even non-model parts, like your evaluation pipeline. How you choose to evaluate your model shapes what kind of model you get,” posted Chollet.

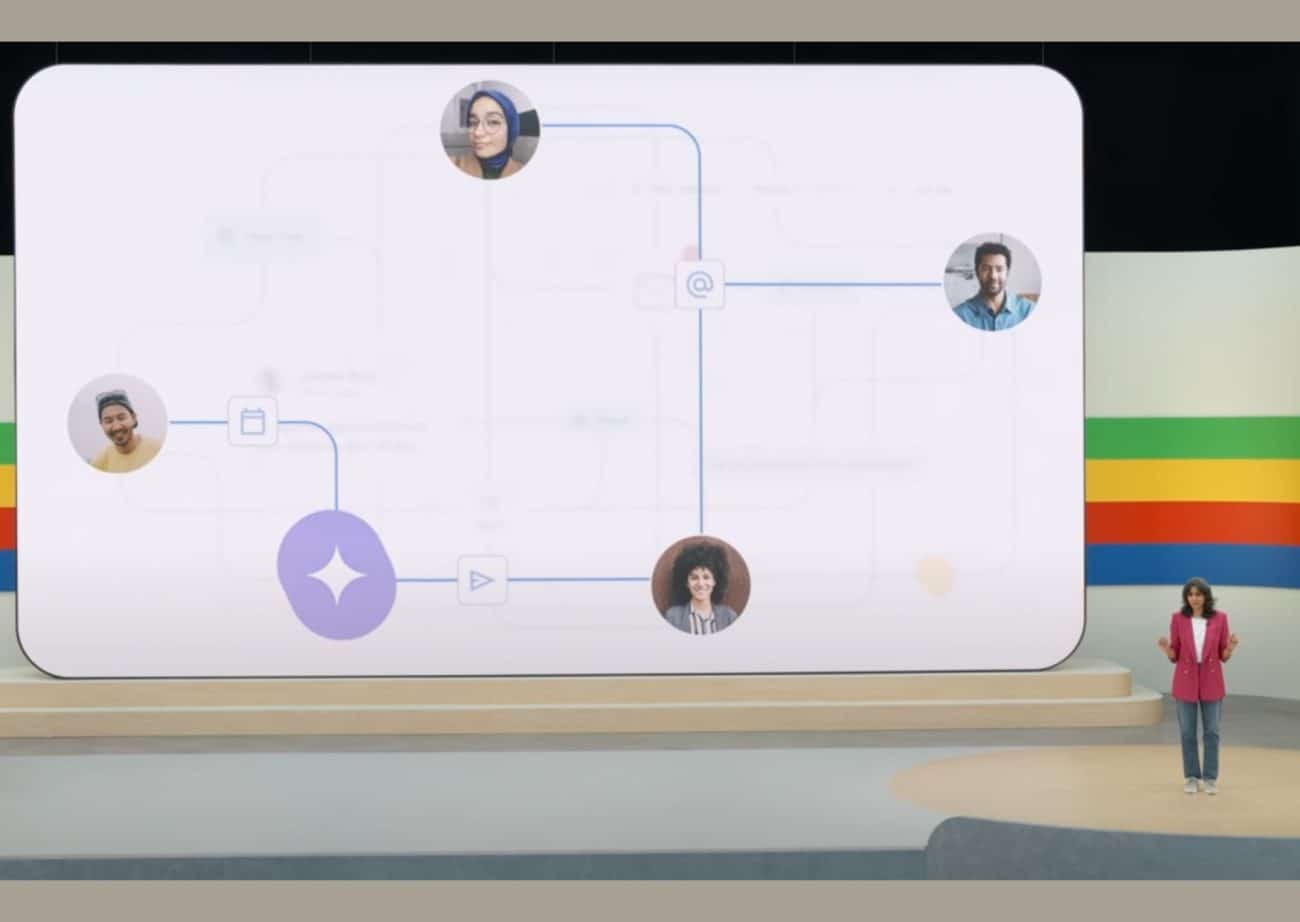

When the fiasco had begun, people prompted Gemini to admit that there was another layer of ‘inner prompt’ (in addition to user prompt), that ultimately added to the biassed outputs.

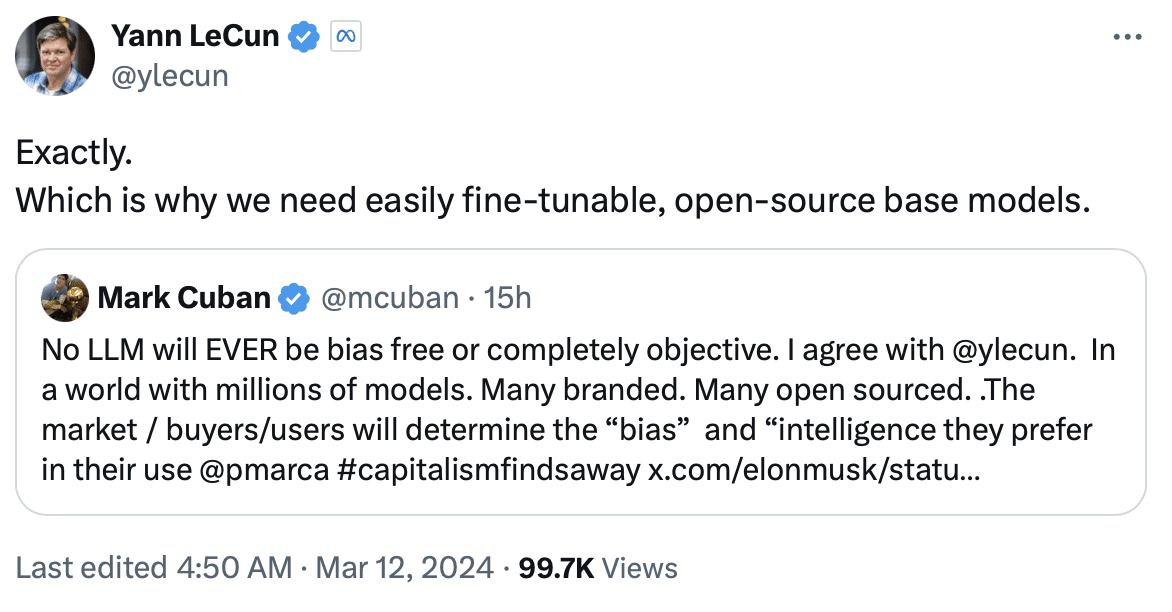

Investor and entrepreneur Mark Cuban has also weighed in on this manner. He believes that “no LLM will ever be bias-free or completely objective”. He believes that the preferences and decisions of the market, buyers, and users ultimately shape the perceived bias and desired intelligence in the models they choose.

Meta AI chief Yann LeCun recently said that it is not possible to produce an AI system that is not biassed. “It’s not because of technological challenges, although there are technological challenges to that, it’s because bias is in the eye of the beholder,” he said.

LeCun believes that different people hold different perspectives regarding the definition of bias across numerous contexts. “I mean, there are facts that are indisputable, but there are a lot of opinions or things that can be expressed in different ways.” LeCun also regards open-source as the answer to the problem.

Source: X

Open-Source to Show the Way

LeCun, a firm believer of open-source projects, has expressed his sentiments on how open-sourcing models with inputs from a large group of people, including individual citizens, government organisations, NGOs, and companies, will result in a ‘large diversity of different AI systems’. However, open source is only a potential solution to mitigate bias.

Musk has also been in favour of open source projects. “I am generally in favour of open sourcing, like biassed towards open sourcing,” he had said in an interview with Fridman earlier.

Interestingly, Musk’s concern about voicing bias being programmed into AI models comes at a time when xAI announced open-sourcing Grok. The efforts to push open-source will be even more now, considering Musk’s ongoing battle with OpenAI regarding the company’s shift in ‘openness’.

No End to AI Bias

Despite the efforts to mitigate the problem, bias in AI models will continue. In a recent research conducted by Bloomberg, name-based discrimination was observed when ChatGPT was tested as a recruiter. Resumes with names distinct to Black Americans were least likely to be ranked as top candidates for a financial analyst role compared to resumes with names from other races or ethnicities.

The bias, which exists across image-generation tools, was also tested by Bloomberg in 2023. In the analysis where 500+ AI-generated images were created using text-to-image tools, it was noticed that the image sets generated for subjects were divisive. For e.g., light-skinned subjects were generated for high-paying jobs.

As long as AI bias persists, tipping the scale towards a particular segment of society, the middle class will continue to be ignored. Unfortunately, when considering job displacements owing to AI, it appears that middle-class jobs are the ones most affected. It seems there is no respite anywhere.