In Reinforcement learning, the generalization of the agents is benchmarked on the environments they have been trained on. In a supervised learning setting, this would mean testing the model using the training dataset.

OpenAI has open-sourced Procgen-benchmark emphasizing the generalization for RL agents as they struggle to generalize in new environments.

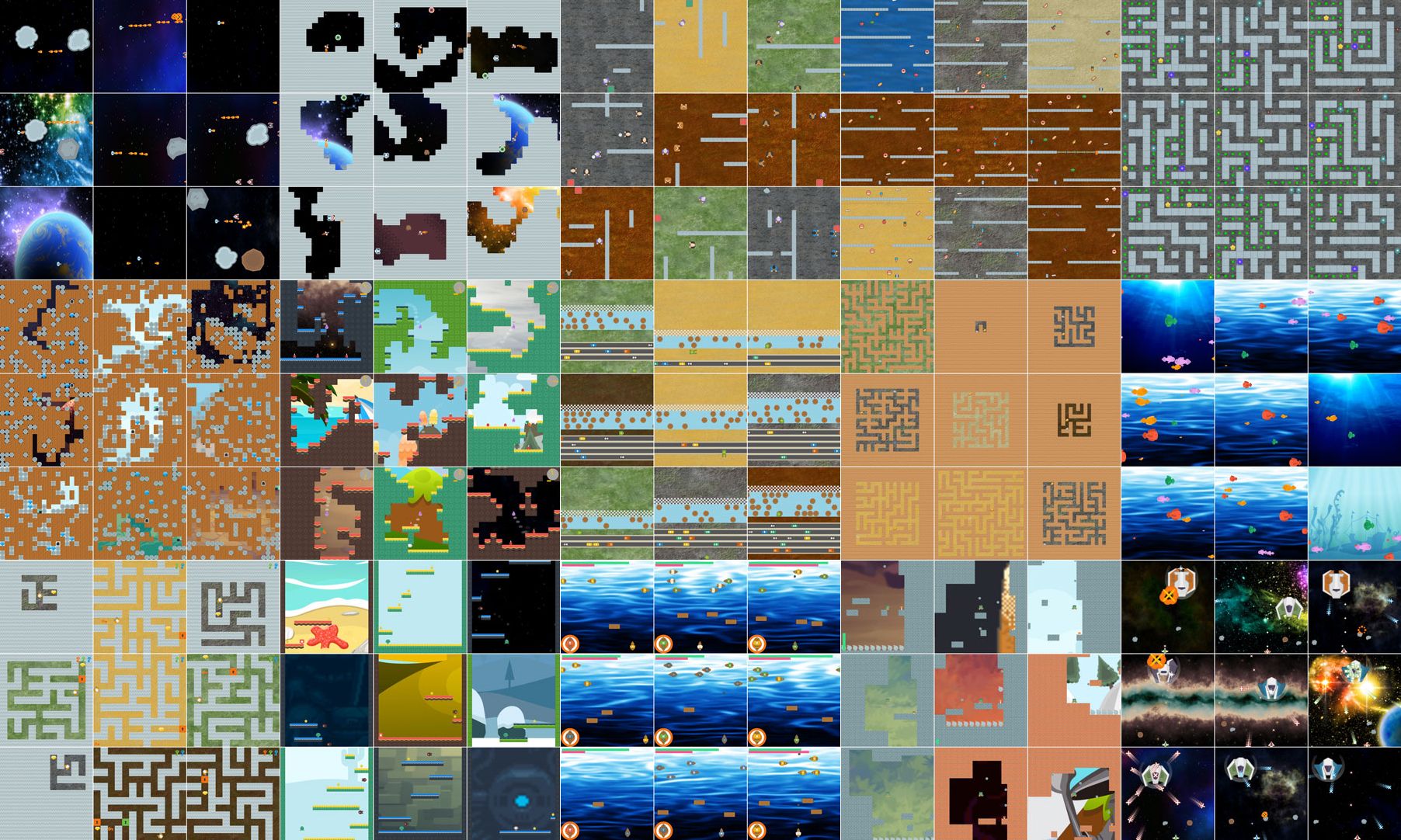

Procgen consists of 16 simple-to-use procedurally-generated gym environments which provide a direct measure of how quickly a reinforcement learning agent learns generalization skills. The environments run at high speed (thousands of steps per second) on a single core and The observation space is a box space with the RGB pixels the agent sees in a NumPy array of shape (64, 64, 3). The expected step rate for a human player is 15 Hz.

Benchmarking RL agents using Arcade Learning Environment has been considered a standard because of the diverse environment provided by ALE.

Nevertheless, the question must be asked whether the agents are learning generalization or they are simply memorizing the specifics of the environments?

Procedurally Generated Environments

To support this notion, Procgen has environments that are procedurally generated. Let’s understand this from one of the environment descriptions,

“Inspired by the Atari game “MsPacman”. Maze layouts are generated using Kruskal’s algorithm, and then walls are removed until no dead-ends remain in the maze. The player must collect all the green orbs. 3 large stars spawn that will make enemies vulnerable for a short time when collected. A collision with an enemy that isn’t vulnerable results in the player’s death. When a vulnerable enemy is eaten, an egg spawns somewhere on the map that will hatch into a new enemy after a short time, keeping the total number of enemies constant. The player receives a small reward for collecting each orb and a large reward for completing the level.”

Procedural generation also helped to develop intrinsically diverse environments, that forces the agent to learn robust policies to generalize instead of just overfitting the environment. Hence, finding the sweet spot between exploration and exploitation.

Features

All Procgen environments were designed keeping the following criterion in mind,

- High Diversity – Higher diversity presents agents with a generalization challenge.

- Fast Evaluation – The environments support a thousand steps per second on a single core machine for faster evaluation.

- Tunable Efficiency – All the environments support Easy, Medium and Hard levels of gameplay. However, the easy level uses 1/8th of resources to create the environment.

The above features were cited from the procgen release article by OpenAI.

Comparison with Gym Retro

The gym retro environment also supports diverse environments to train RL agents. However, there is a vast gap in terms of design and features when compared to procgen

- Faster – Gym Retro environments are already fast, but Procgen environments can run >4x faster.

- Non-deterministic – Gym Retro environments are always the same, so you can memorize a sequence of actions that will get the highest reward. Procgen environments are randomized so this is not possible.

- Customizable – If you install from source, you can perform experiments where you change the environments, or build your own environments. The environment-specific code for each environment is often less than 300 lines. This is almost impossible with Gym Retro.

Training Agents to Play in Procgen Environment

The following snippet will train an RL agent to play in various environments such as Coin run, Starpilot, and Chaser supported by procgen.

import imageio

import time

import numpy as np

import gym

from stable_baselines.common.vec_env import DummyVecEnv, VecVideoRecorder

from stable_baselines.ddpg.policies import CnnPolicy

from stable_baselines.common.policies import MlpLstmPolicy, CnnLstmPolicy

from stable_baselines import A2C, PPO2

video_folder = '/gdrive//videos'

video_length = 5000

env_id = "procgen:procgen-chaser-v0"

env = DummyVecEnv([lambda: gym.make(env_id)])

model = PPO2("CnnPolicy", env, verbose=1)

s_time = time.time()

model.learn(total_timesteps=int(1e4))

e_time = time.time()

print(f"Total Run-Time : , {round(((e_time - s_time) * 1000), 3)} seconds")

# Record the video starting at the first step

env = VecVideoRecorder(env, video_folder, record_video_trigger=lambda x: x == 1000,

video_length=video_length, name_prefix="trained-agent-{}".format(env_id))

env.reset()

for _ in range(video_length + 1):

action = [env.action_space.sample()]

obs, _, _, _ = env.step(action)

# Save the video

env.close()

The above agent was trained for 10,000 timesteps using CNN policy and Proximal Policy Optimization.

Have a look at the agent’s gameplay in the below video, the agent was trained under 3 minutes using GPU for the star-pilot environment. Have a look till the end to see the rational behaviour of the agent.

The benchmark published by OpenAI clearly reveals the vast gap in the performance of agents in train and test environment. It also highlights the flaw in using the same sequence of steps for training the agents clearing the longstanding puzzle in Reinforcement Learning research.