People often get stuck when they are asked to improve the performance of existing predictive models. What usually they do is try different algorithms and check their results. But often they end up not improving the model. Here are some of the steps you can take to boost your existing models.

People often get stuck when they are asked to improve the performance of existing predictive models. What usually they do is try different algorithms and check their results. But often they end up not improving the model. Here are some of the steps you can take to boost your existing models.

1. Add more data

More data is always useful. It helps us capture all the variance that the data has. Sometimes we may not have the option to get additional data for training. Example when you are competing in data science competitions. But while working on client projects, you can ask for more data if it’s desired.

The question is when we should ask for more data?

We cannot quantify more data. It depends on the problem you are working on and the algorithm you are implementing, example when we work with time series data, we should look for at least one-year data; And whenever you are dealing with neural network algorithms, you are advised to get more data for training otherwise model won’t generalize.

2. Feature engineering

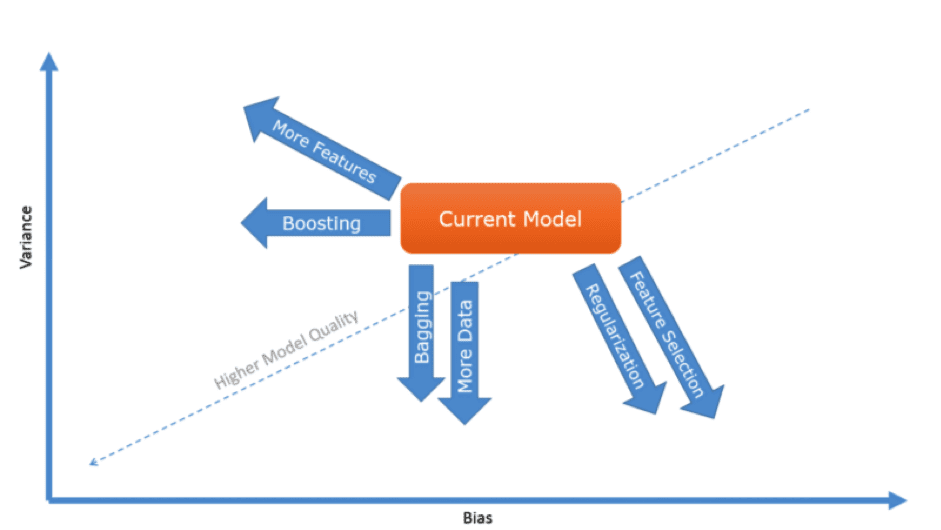

Adding new feature decreases bias on the expense of variance of the model. New features can help algorithms to explain variance of the model in more effective way. When we do hypothesis generation, there should be enough time spent on features required for the model. Then we should create those features from existing data sets. i.e.

- We want to predict daily withdrawals from ATMs. In this case, we can think people could be inclined to draw higher amounts at the start of the month. Possible reason can be people get their salaries or they pay for various monthly expenditures at the start of the month. So we will create a new feature for this.

2. When working on fraud detection model, We can look for the ratio of Income to Loan as a new feature.

3. Feature selection

This is one of the most important aspects of predictive modelling. it’s always advisable to choose important features in the model and build the model again only with important and significant features.

i.e. Let’s say we have 100 variables . There will be variables which drive most of the variance of a model. If we just select the number of features only on p-value basis, then we may still have more than 50 variables. In that case, you should look for other measures like contribution of individual variable to the model. If 90% variance of the model is explained by only 15 variables then only choose those 15 variables in the final model.

4. Missing value and Outlier Treatment

Outliers can deflect your model so badly that sometimes it becomes essential to treat these outliers. There might be some data which is wrong or illogical. i.e. Once I was working on airline industry data, in the data there were some passengers whose age is 100+ and some of them were 2000 years. So it is illogical to use this data. This is harder to explain but it is likely that some users intentionally entered their age incorrectly for privacy reasons. Another reason might be that they might have placed their birth year in the age column. Either way, these values would appear to be errors that will need to be addressed. In the same way, missing value issue should be addressed. Missing values treatment can play a role in boosting performance. i.e. Working with time series data, we can replace values by their overall mean or by their month wise mean. Month wise mean would be most logical because seasonality can deflect your overall mean. This effect will be visible in your prediction and performance will get better.

Missing value and Outlier Treatment are part of the modelling process. You may be thinking how these can help in boosting performance. Both issues can be addressed in several ways. You have to identify which is the best way for a given task. Right method will lead to performance improvement.

5. Ensemble Models

Ensemble modelling is one of the popular techniques in improving modelling outcomes. Bagging (Bootstrap Aggregating) and Boosting are some of the ways which can be used. These methods are generally more complex and black box type approaches.

We can also ensemble several weak models and produce better results by taking the simple average or weighted average of all those models. The idea behind ensemble modelling is that one model can be better at capturing variance of the data and another model can be better at capturing only the trend. In these types of cases, ensemble method works great.

6. Using the suitable Machine learning algorithm

Choosing the right algorithm is a crucial step in building a better model. Once I was working with holtzwinter model for prediction but It performed badly for real-time forecasting so I had to move on neural network models. Some algorithms are just better suited to some data sets than others. Identifying the right type of algorithms is an iterative process thought. You need to keep experimenting with different algorithms to eventually land onto highly efficient one.

7. Auto- feature generation

The quality of features is critical to the accuracy of the resulting machine learning algorithm. No machine learning method will work well with poorly chosen features. But when we use deep learning algorithms you don’t need feature engineering. Because Deep learning does not require you to provide the best possible features, it learns by its own. If you are doing image classification or handwriting classification then deep learning is for you. Image processing tasks have seen amazing results using deep learning. Below picture is showing how features are created automatically in every layer. You can also observe how much features are getting better after every layer.

8. Data distribution and Parameter Tuning

It is always better to explore the data efficiently. The data distribution might suggest transformation. The data might be following the gaussian function or some other family of function, in that case, we can apply algorithm with a little transformation to have better predictions. Another thing we can do is fine tuning of parameters of algorithms. i.e. when we build random forest classifier we can tune the number of trees to build, the number of variables to choose for splitting etc. Similarly, when we build deep learning algorithm we can specify how many layers we would need, how many neurons we want in each layer, which activation function we want. Tuning parameter enhances model performance if we use the right type of parameters in an algorithm.

Conclusion:

Improving performance of machine learning models is hard. Above methods of improving performance are based on my experiences. When we use ensemble method, it requires a thorough knowledge of algorithms.

Algorithms like Random Forest, Xgboost, SVM, and Neural network are used for high performance. Not knowing how the algorithm can be tuned well to training data is a barrier in getting higher performance. So we should always know how the algorithms can be tuned according to different tasks.When we do parameter tuning, we should take care of overfitting. You can use cross-validation methods to prevent overfitting.

I hope this article satisfies your curiosity towards performance boosting techniques. Keep in mind these techniques whenever you try to boost performance of existing models.