AdverTorch is a set of tools for studying adversarial robustness. It includes a variety of assault and defence implementations, as well as robust training mechanisms. AdverTorch is based on PyTorch and takes advantage of the benefits of the dynamic computational graph to create succinct and efficient reference implementations. So we will walk through this toolset, its design principles, and how it works in this article. The important points that will be explored in this article are mentioned below.

Table of contents

- What is an adversarial attack?

- How can AdverTorch be used?

- Implementing an attack

Let’s first discuss an adversarial attack.

What is an adversarial attack?

Adversarial attacks are deceptive acts aimed at undermining machine learning performance, causing model deviance, or gaining access to sensitive data. However, in recent years, the rise of deep learning and its incorporation into a wide range of applications has reawakened interest in adversarial machine learning.

In the security industry, there’s a growing fear that adversarial flaws might be used to attack AI-powered systems. Unlike traditional software, which requires engineers to manually set instructions and rules, machine learning algorithms learn via experience.

To design a lane-detection system, for example, the developer creates a machine learning algorithm and trains it by feeding it a large number of tagged photos of street lanes from various angles and lighting conditions. The machine learning algorithm is then fine-tuned to detect recurrent patterns in pictures of street lanes. If the algorithm structure is proper and there is enough training data, the model will be able to detect lanes in new images and videos with high accuracy.

Machine learning algorithms, despite their effectiveness in tough areas such as computer vision and speech recognition, are statistical inference engines: complicated mathematical functions that convert inputs to outputs.

When a machine learning system identifies an image as containing a specific object, it has discovered that the pixel values in that image are statistically similar to other photographs of the object examined during training.

Adversarial attacks take advantage of this feature to confuse machine learning systems by tampering with their input data. A malicious actor can, for example, influence a machine-learning algorithm to identify an image as something it is not by adding tiny and inconspicuous patches of pixels to it.

In adversarial attacks, the sorts of perturbations used are determined by the target data type and desired effect. To be reasonably adversarial, the threat model must be tailored for different data modalities. Small data disturbance, for example, makes sense to consider as a threat model for images and audios because it will not be clearly detected by a human but may cause the target model to misbehave, resulting in a discrepancy between human and machine.

However, for some data kinds, like text, the perturbation may disturb the semantics and be easily identified by humans by just changing a word or a letter. As a result, the threat model for text should be distinct from that for image or voice.

How can AdverTorch be used?

AdverTorch is a tool developed by the Borealis AI research lab that employs a number of attack-and-defence tactics. The goal of AdverTorch is to give academics the tools they need to do research on all areas of adversarial attacks and defence.

Attacks, defences, and thorough training are all included in the current version of AdverTorch. Advorch strives for clear and consistent APIs for attacks and defences, concise reference implementations using PyTorch’s dynamic computational networks, and quick executions with GPU-powered PyTorch implementations, which are vital for attacking-the-loop techniques like adversarial training.

The various attack and defence strategies that come with areas as given below.

Fast gradient (sign) methods, projected gradient descent methods, Carlini-Wagner Assault, spatial transformation attacks, and other gradient-based attacks are available. Single-pixel assault, local search attack, and Jacobian saliency map attack are some of the gradient-free approaches.

Apart from specific assaults, it also includes a wrapper for the Backward Pass Differentiable Approximation (BPDA), an attack approach that improves gradient-based attacks against defended models with non-differentiable or gradient-obfuscating components.

The library includes two defence strategies: preprocessing-based defences and ii) robust training. It implements the JPEG filter, bit squeezing, and several types of spatial smoothing filters for preprocessing-based protection. There is an example of a robust training approach implemented in their official repository. There is a script for adversarial training on the MNIST dataset there you can check it out here.

Implementing an attack

Here in this section, we’ll implement an L2PDGAttack which is a gradient-based attack. To implement this attack we need to install and import respective dependencies. The library is not optimized for the latest version of Pytorch so needs to install the respective version in order to carry out this attack.

# install torch ! pip install torch==1.3.0 torchvision==0.4.1 # install library ! pip install advertorch import torch import torch.nn as nn from torchvision.models import vgg16 # preprocessing dependencies from advertorch.utils import predict_from_logits from advertorch_examples.utils import ImageNetClassNameLookup from advertorch_examples.utils import get_panda_image from advertorch_examples.utils import bhwc2bchw from advertorch_examples.utils import bchw2bhwc # load the attack class from advertorch.attacks import L2PGDAttack device = "cuda" if torch.cuda.is_available() else "cpu"

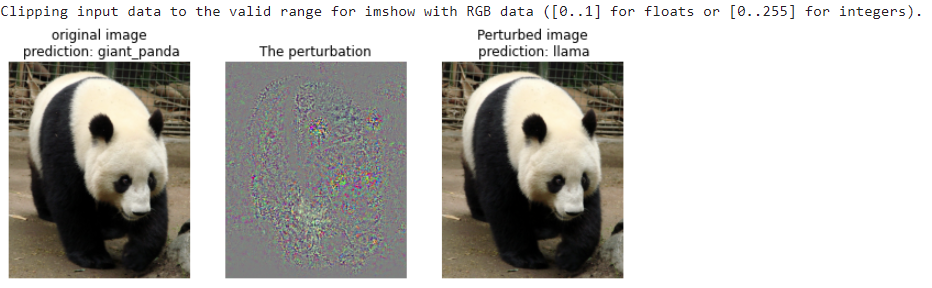

Here we are going to perform an attack on a pre-trained transfer learning model called VGG16 with its pre-trained weights for the ImageNet competition.

# load the model model = vgg16(pretrained=True) model.eval() model = nn.Sequential(model) model = model.to(device)

Now we’ll load the image and associated label that later be used to check the severity of the attack.

# load the image and index for true label np_img = get_panda_image() img = torch.tensor(bhwc2bchw(np_img))[None, :, :, :].float().to(device) label = torch.tensor([388, ]).long().to(device) # true label imagenet_label2classname = ImageNetClassNameLookup()

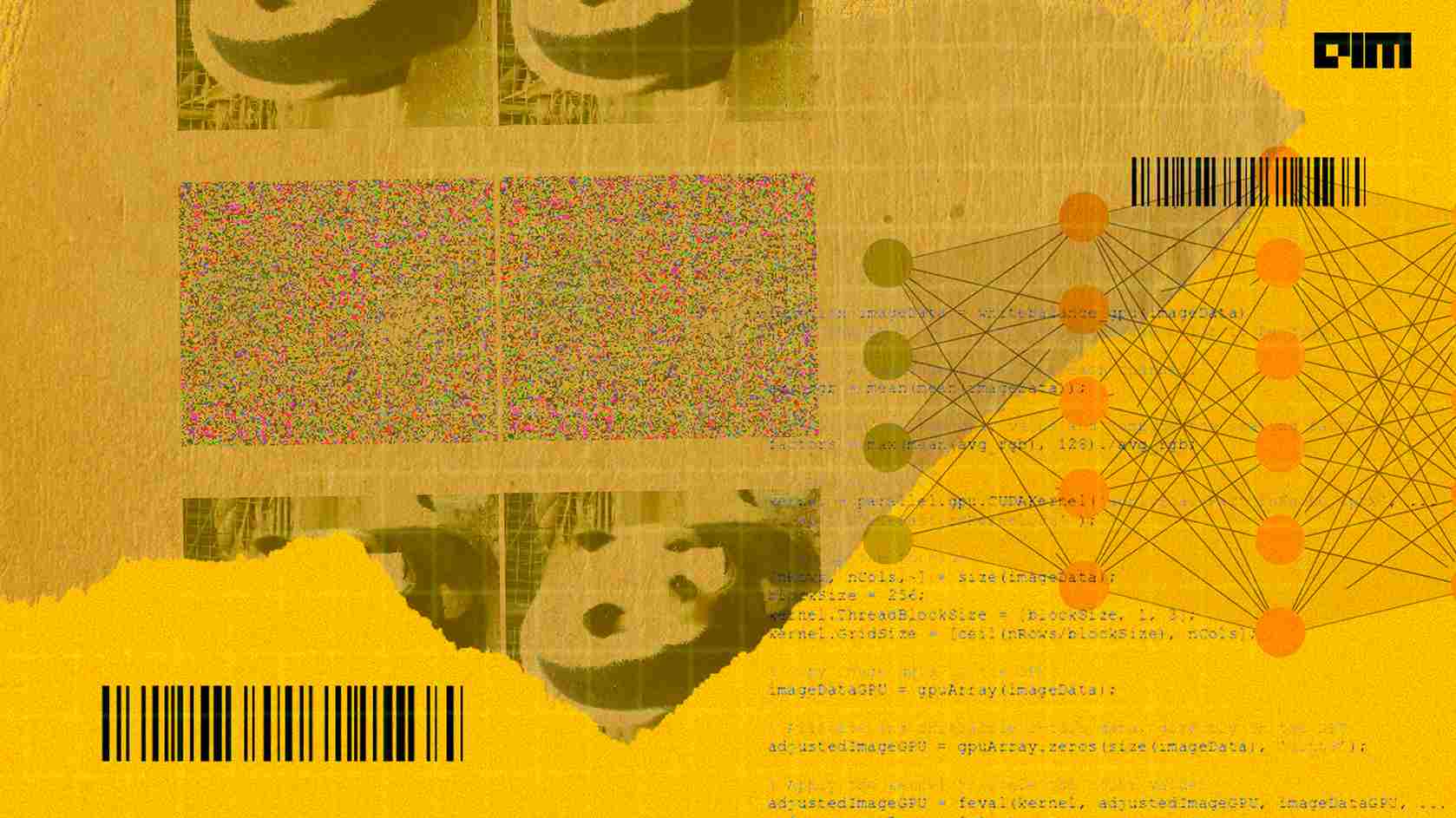

Below is some utility user-defined function that is used to visualize the result and conversion procedure.

def tensor2npimg(tensor):

return bchw2bhwc(tensor[0].cpu().numpy())

def _show_images(enhance=127):

np_advimg = tensor2npimg(advimg)

np_perturb = tensor2npimg(advimg - img)

pred = imagenet_label2classname(predict_from_logits(model(img)))

advpred = imagenet_label2classname(predict_from_logits(model(advimg)))

plt.figure(figsize=(10, 5))

plt.subplot(1, 3, 1)

plt.imshow(np_img)

plt.axis("off")

plt.title("original image\n prediction: {}".format(pred))

plt.subplot(1, 3, 2)

plt.imshow(np_perturb * enhance + 0.5)

plt.axis("off")

plt.title("The perturbation")

plt.subplot(1, 3, 3)

plt.imshow(np_advimg)

plt.axis("off")

plt.title("Perturbed image\n prediction: {}".format(advpred))

plt.show()

Now let’s perform the attack, The hyperparameters that we defined when using L2PGDAttack are as follows:

- nb_iter is the number of iterations in a loop.

- eps_iter specifies the attack step size.

- rand_init is a function that starts a random number generator (optional bool) Initialization at random.

- target is to specify whether the attack is directed or not.

adversary = L2PGDAttack(

model, eps=1., eps_iter=1.*2/40, nb_iter=40,

rand_init=False, targeted=False)

advimg = adversary.perturb(img, label)

_show_images()

And here is the result,

Final words

Through this article, we have discussed the adversarial attacks and how these attacks can be cases even SOTA ML architectures based on computer vision and audio data. In order to ensure the robustness of the model, we have discussed a Python-based tool called AdverTorch. This is based on PyTorch which combines certain attack and defence strategies where other libraries focus on only attack strategies like FoolBox. In the end, practically, we have seen how we can attack a pre-trained transfer learning model like VGG16.