CoAtNets is a hybrid model built by Google’s Brain Team and has recently gained the attention of deep learning practitioners. Since it is made up of merging two models Convolution and attention, hence it is called CoAtNets (Convolution and Attention). This model has achieved state-of-the-art performance in image classification tasks when used with the benchmark datasets like ImageNet. In this article, we will introduce this model with its working mechanism and will try to understand its features that help in achieving significantly higher accuracy. Following are the major points that are to be discussed in this article.

Table of contents

- Introduction to CoAtNet

- Design of CoAtNet Model

- Performance Analysis of CoAtNet

Introduction to CoAtNet

Convolutional Neural Networks have been a dominant model architecture for computer vision since the breakthrough of AlexNet. Since the success of self-attention models like Transformers in natural language processing, many researchers have tried to bring the power of attention to computer vision. Most recently Vision Transformer (ViT) performed reasonably well on ImageNet-1K. When pre-trained on large-scale on JFT-300M dataset, ViT yields similar results to ConvNets, indicating that it has the same capacity as convnet.

But if Vit does not pre-trained on JFT-300M, ViT accuracy is still lower than ConvNets on ImageNet, Also after regularization and data augmentation to improve ViT still falls behind state-of-the-art convnet. The results suggest that vanilla Transformer layers lack certain desirable inductive biases, whereas ConvNets possess these biases, and thus it requires significant amounts of data and computation to compensate for their lack.

Right now it is more focused on image classification. This hybrid model is based on two key insights:

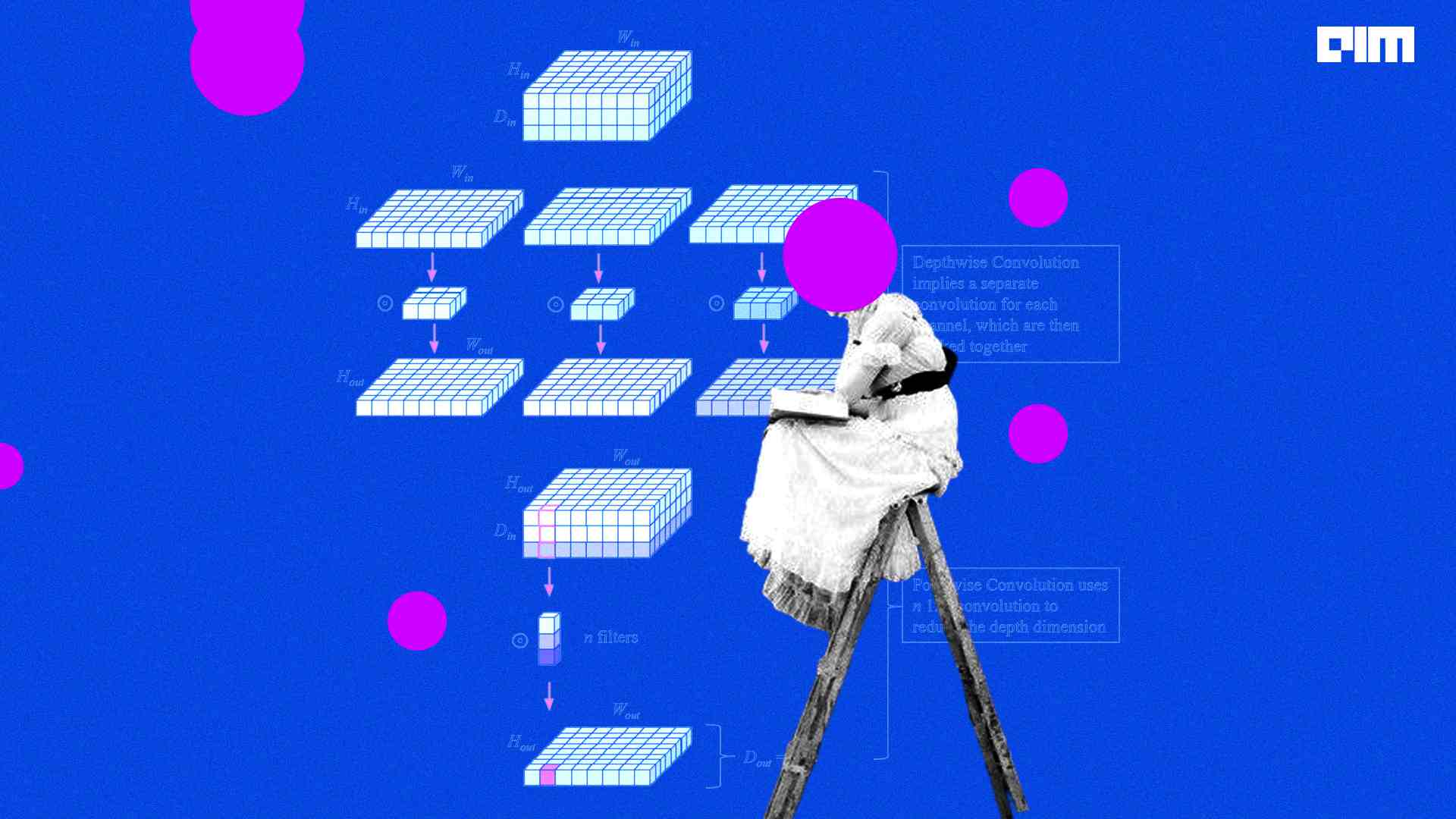

- By using simple relative attention, depthwise convolution and self-attention can be naturally fused together

- By stacking convolution layers and attention layers in a principled manner, generalization, capacity, and efficiency are dramatically improved.

The experiments show that CoAtNets perform well regardless of resource constraints across different datasets. You can sense how well this hybrid model works ahead, without any extra data CoAtNet achieves 86.0% ImageNet top-1 accuracy which is huge, and when it is pretrained with 13M images from ImageNet-21K CoAtNet achieves 88.56% top-1 accuracy, its performance matched with ViT-huge (Vision Transformer) pre-trained with 300M images from JFT-300M while using 23 times less data, that is absolutely mind blowing. Furthermore, when we scale up CoAtNet with JFT-3B, it achieves a whopping 90.88% top-1 accuracy on ImageNet which is ridiculous. In this article, we will go through the introduction of CoAtNet, and it’s model.

Hybridizing convolution and attention in machine learning is studied in a systematic manner from two fundamental aspects – generalization and model capacity, and the study shows that convolutional layers have better generalization while attention has higher model capacity. By combining both convolutional and attention layers we can achieve better generalization and capacity.

That is why researchers proposed the CoAtNet which has the power of both ConvNet and Transformer. CoAtNe achieves state-of-art performance under the same resource with different data sizes. CoAtNet has the generalization property of ConvNets because of favourable inductive biases. Furthermore, CoAtNet benefits from superior scalability of transformers as well as achieves faster convergence thus its efficiency is improved.

Are you looking for for a complete repository of Python libraries used in data science, check out here.

Design of CoAtNet Model

In model building, researchers faced the problem of how to optimally merge both convolution and transformer, they decompose this problem into two parts.

- How to merge the convolution and attention layer into one basic block?

- How to vertically stack different blocks together to form a complete network?

So first we will discuss the merging of convolution and attention. For convolution, we will use the MBconv block, because It is a type of image residual block with an inverted structure for efficiency reasons and is used for image models. It uses a narrow-wide-narrow approach. For example, first, we use 1X1 convolution then use a 3X3 depthwise convolution as a result, the number of parameters is greatly reduced. For the attention model, we use the feed-forward network module (FFN module).

The reason behind choosing the FFN module in Transformer and the “inverted bottleneck” of MBConv, is because first expands the channel size of the input by 4X, afterwards 4X-wide hidden state projected back to the original channel size to create a residual connection.

There are mathematical ways of understanding how convolution and self-attention combine, but they will not be sufficient for one article. So to create a basic block of convolution and attention there are three desirable properties that should be present in the basic block. These properties are shown below in the table.

Let’s understand these properties quickly.

Translation Equivariance: Convolutional neural networks have a property called Translation Equivariance, which states that the position of the image should not be fixed in order to detect it by the CNN.

Input-adaptive Weighting: This helps the attention model to recognize the relationship between the different elements in the input, however, there is a risk of overfitting when the data is limited.

Global Receptive Field: In CNN, receptive Field refers to the area of the input matrix that influences a particular unit of the network. self-attention uses a larger receptive field, which is why it is called the Global Receptive Field, which helps attention to get more contextual information than the simple CNN Receptive Field.

In conclusion, an optimal basic block contains the Input-Adaptive Weighting and Global Receptive Field features of self-attention, while the Translation Equivariance of CNNs.

Now let’s discuss Vertical Layout Design. We have three main options for creating a network that is implemented in practice:

- When a manageable level of detail is reached in a feature map, perform some downsampling to reduce the spatial size.

- Restrict global receptive fields G in attention to a local field L, as in convolution.

- In place of the quadratic Softmax attention variant, use a linear attention variant whose complexity only relates to the spatial size.

For the first option, down-sampling can be achieved in two ways:

- Convolution stems from expanded strides (e.g., strides 16×16) as in ViT.

- Multistage networks consist of gradual pooling similar to ConvNets Using these choices, we establish a search space of 5 levels. The first two layers, a classic convolution and an MBConv, were used to reduce the dimensionality of the image. Last three levels we can consider either MBConv or the Transformer block. Therefore, there are 4 variants with increasing amounts of Transformer stages, CCCC, CCCT, CCTT, and CTTT, where C and T denote Convolution and Transformer, respectively.

This led to five models being compared in terms of generalization. For generalization, We would like to know more about the gap between the training loss and evaluation accuracy, and for model capacity, we measure how well it fits large training datasets.

Performance analysis of CoAtNet

So we train different variants of hybrid models using ImageNet-1K (1.3M) and JFT (>300M) datasets over 300 and 3 epochs, respectively, to compare generalization and model capacity. The result we get.

In the figure below, The models are compared for generalization and capacity under different data sizes. For a fair comparison, they all have the same number of parameters and computational costs.

After observing the above figure we can arrange models in their generalization and capacity power.

For generalization: C-C-C-C ≈ C-C-C-C ≥ C-C-T-T > C-T-T-T >> ViT

For model capacity: C-C-T-T ≈ C-T-T-T > ViT > C-C-C-T > C-C-C-C

As described above, the impressive results of those configurations are described visually in the below graph.

See how CoAtNet performs far more better than the previous model such as DeepVit, Cait.

Final words

In this article, we saw two states of art models: convolution and attention are fused together to create a better model. We went through how researchers divided the problem of creating a basic block and stacking them vertically so that they can achieve generalization of convolution and capacity of attention model. And finally, we analyze how CoAtNet performs better than other previous advanced models.