Facebook recently introduced a new image generation model called ‘Instance-Conditioned GAN (IC-GAN). This new model creates high-quality, diverse images with or without input images present in the training set. Compared to traditional methods, IC-GANs can generate realistic, unforeseen image combinations.

The PyTorch code for Instance-Conditioned GAN is available on GitHub.

GANs vs IC-GAN

GAN, or generative adversarial network, is one of the popular AI methods to create images, be it abstract collages or photorealistic pictures. However, they, too, have limitations as they can only generate images of objects or scenes closely related to the training dataset.

For example, a traditional GAN trained on images of cars shows impressive results when asked to generate other images of cars, but it is most likely to fail if asked to generate images of flowers or other objects outside of the car dataset.

That is where Facebook’s Instance-Conditioned GAN comes into the picture, where it can generate images outside of the training dataset seamlessly. The new approach exhibits exceptional transfer capabilities across various types of objects. Facebook researchers said that the researchers can use IC-GANs off the shelf with previously unseen datasets and still generate realistic-looking images without the need for annotated/labelled data.

Also, IC-GANs could create new visual examples to augment datasets to include diverse objects and scenes, helping artists and creators with more expansive, creative AI-generated content, and advance research in high-quality image generation.

Here’s how IC-GAN works

Facebook’s IC-GAN can be used with both labelled and unlabelled datasets. It extends the GAN framework to model a mixture of local and overlapping data clusters. The way it works is, it takes a single image, or ‘instance,’ and then generates images that are similar to the instance’s closest neighbours in the dataset.

The researchers said that they use neighbours as an input to the discriminator to force the generator to create samples similar to the neighbourhood samples of each instance. This way, it avoids the problem of partitioning data into small clusters as so much of the data is overlapping. As a result, it lets models use datasets more efficiently.

After the model is trained, the researchers then test it on images it has never seen before. With the help of a single image, the model can effectively generate visually rich images similar to the closest neighbours in the dataset.

In contrast, standard methods like class conditional GANs focus on conditioning class labels, effectively partitioning the data into groups corresponding to those labels to generate more high-quality samples than their unconditional counterparts. So, instead of creating only random images, these GANs create images that fit a particular label like ‘clothing’ or ‘car.’ At the same time, they rely on annotated/labelled data that may be unfeasible to obtain.

Previously, label-free learning approaches — using no labelled data — to image generation have been promising, but their result is usually of poor quality when trained to model complex datasets (ImageNet). Plus, they either use coarse, nonoverlapping data partitions or fine partitions that tend to deteriorate output as the clusters contain limited data points.

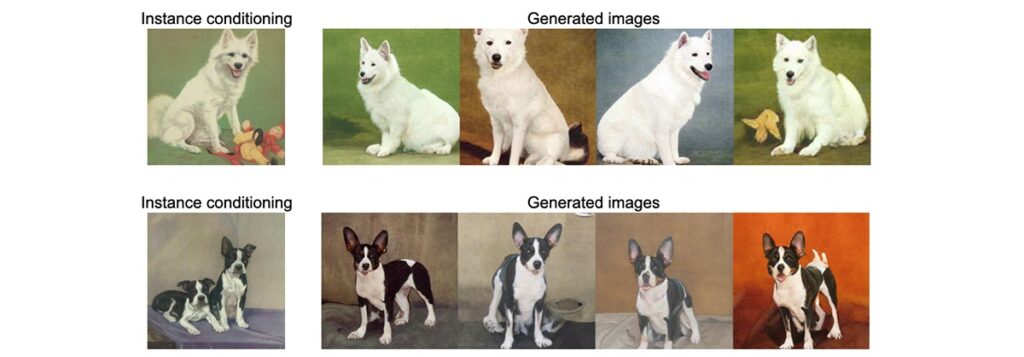

The image below depicts an IC-GAN instance on the left to generate the corresponding images shown on the right. Here, no class label is provided.

Interestingly, the IC-GAN can be transferred to other datasets not seen during the training for both class-conditional scenarios and where there are no labels.

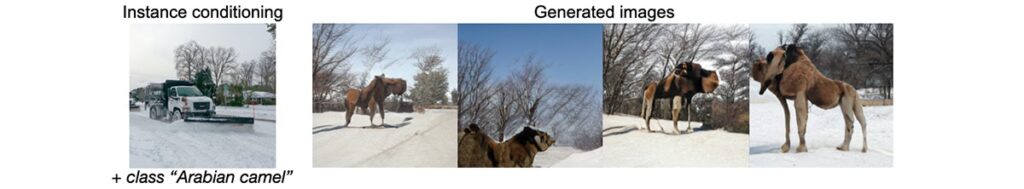

Further, in IC-GAN, the researchers said they do this by swapping out the conditioning instances at inference time. In the case of class-conditional IC-GAN, they can swap either the instance conditioning or the class label. Combining instances and the class labels, the class-conditional IC-GAN can create unusual scenes that aren’t present or are very rare in current datasets.

For example, with the image of a snowplough surrounded by snow and a class label ‘camel,’ which does not appear in the instance conditioning, the user can generate camels surrounded by snow, thereby eliminating the bias that camels live only in the desert. (see the image below)

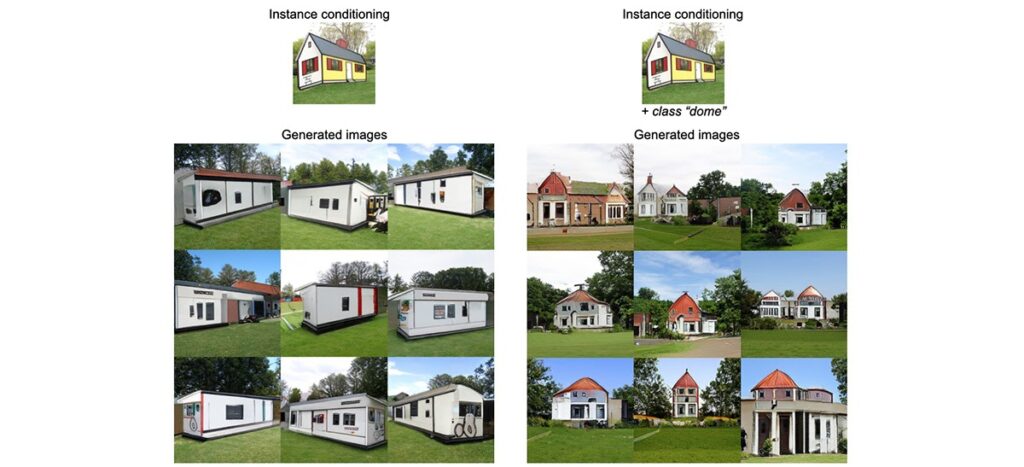

Another example (as shown below) shows that the IC-GAN uses the graphics of a house to create a more realistic-looking building.

Unlimited Possibilities

In a nutshell, IC-GAN can augment data and include objects commonly found in the training data. As a result, this new approach can help generate more diverse training data for object recognition models as it works across various domains.

For instance, traditional GAN models would not generate images of zebras standing in urban areas, as its training data would likely only contain images of zebras in grasslands. Here, the IC-GAN can augment data and include objects that are not commonly found in standard datasets.

“In the future, we hope to explore ways to bring even more control to this model,” said Facebook, stating that it will no longer be just about the object at the centre and the background. “We want to explore how more objects can be placed in the background and determine where the items are placed, creating complex, picture-perfect scenes,” said the researchers.