|

Listen to this story

|

Google’s AI research counterpart DeepMind has launched a new set of resources for general-purpose robotics learning after teaming up with 33 academic labs.

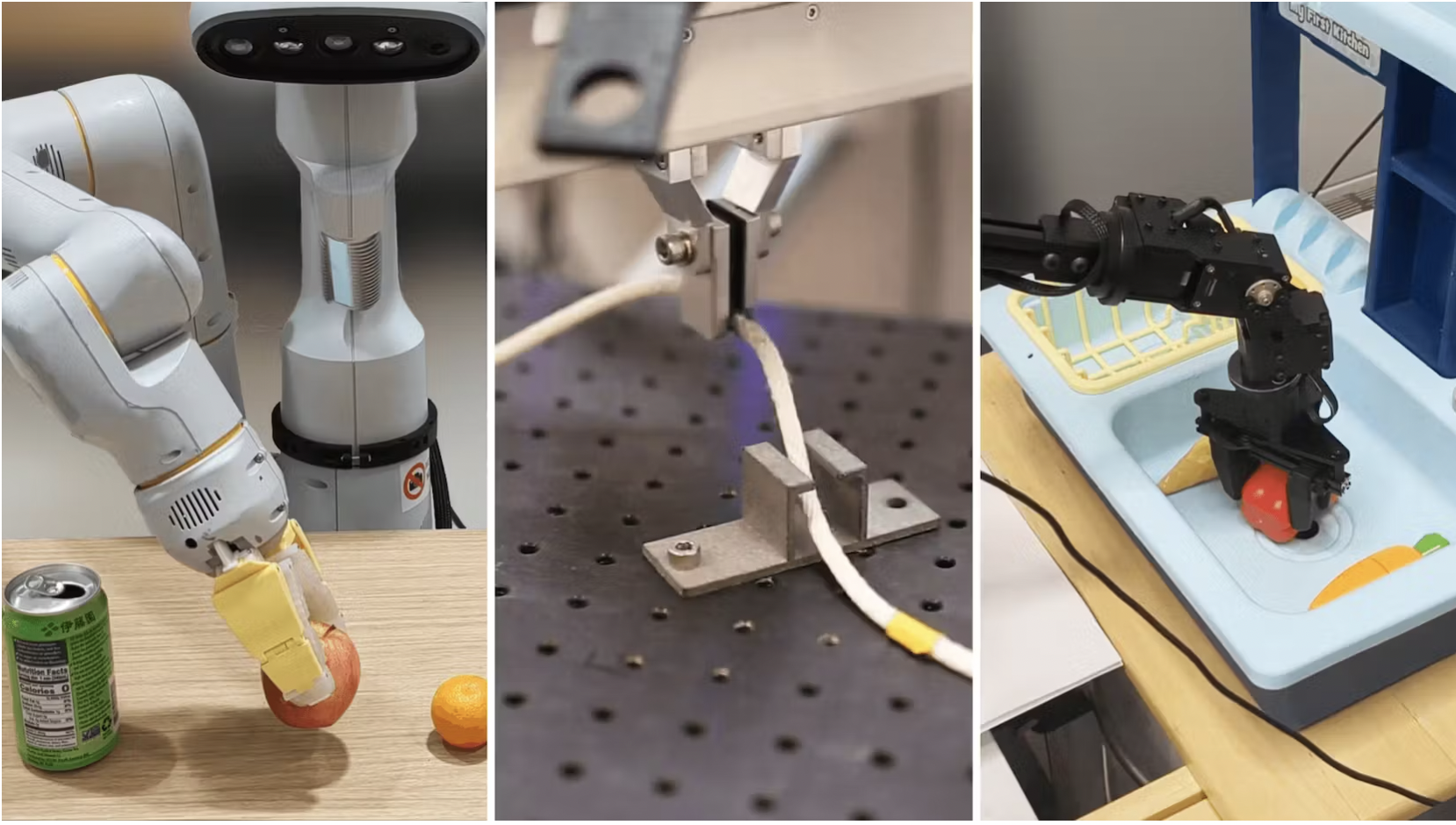

It made a big collection of data called the Open X-Embodiment dataset which includes information pooled from 22 different types of robots. These robots performed 527 different things and completed more than 150,000 tasks, all during more than a million episodes. Notably, this dataset is the biggest of its kind and a step towards making a computer program that can understand and control many different types of robots – a generalised model.

The dataset is the need of the hour since robotics particularly deals with a data problem. On one hand, large and diverse datasets outperform models with a narrow dataset in their own areas of expertise. On the other hand, building massive data sets is a tedious resource and time consuming procedure. Moreover, maintaining its quality and relevance is challenging.

“Today may be the ImageNet moment for robotics,” tweeted Jim Fan, a research scientist at NVIDIA AI. He further pointed out that 11 years ago, ImageNet kicked off the deep learning revolution which eventually led to the first GPT and diffusion models. “I think 2023 is finally the year for robotics to scale up,” he added.

The researchers used the latest Open X-Embodiment dataset to train two new generalist models. One, called RT-1-X, is a transformer model designed to control robots. The model performs tasks with a 50% higher average success rate like opening doors better than purpose-built models made just for that.

The other, RT-2-X, a vision-language-action model understands what it sees and hears and also uses information from the internet for training. These programs are better than their predecessors RT-1 and RT-2, even though they have the same foundational architecture. It is important to note that the former models were trained on narrower datasets.

The robots also learned to perform tasks that they had never been trained to do. These emergent skills were learned because of the knowledge encoded in the range of experiences captured from other types of robots. While experimenting, the DeepMind team found this to be true when it came to tasks that require a better spatial understanding.

The team has open-sourced both the dataset and the trained models for other researchers to continue building on the work.