In a recent Google AI blog post, lead Jeff Dean, scientists at Google Research and the Google chip implementation and infrastructure team described an AI technology that can design computer chips in less than six hours.

The team explained the process in a published paper where it talked about a learning-based approach to chip design that can learn from experience and improve over time, becoming better at generating architectures for unseen components. They claim that this technology can complete designing computer chips in under six hours on average, which is significantly faster than the weeks it takes human experts in the loop.

According to the company, the new technology advances the state of the art in that it implies the placement of on-chip transistors can be largely automated. If made publicly available, the Google researchers’ technique could enable cash-strapped startups to develop their chips for AI and other specialised purposes.

Additionally, such a development can shorten the chip design cycle, which will allow hardware to adapt better to rapidly evolving research.

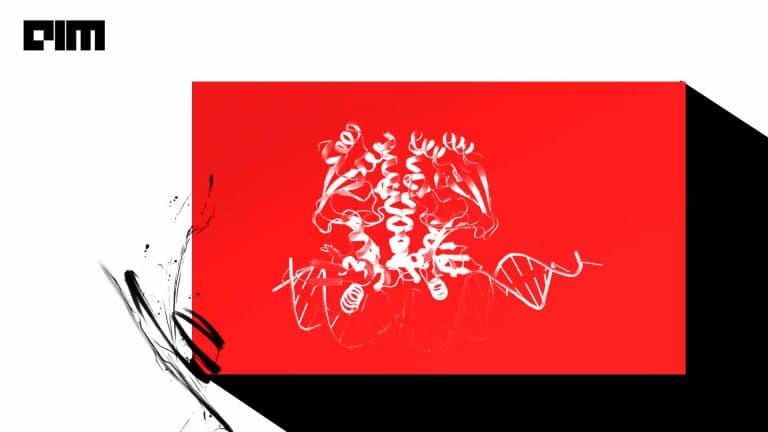

[Placements of Ariane, an open-source processor, as training progresses. Image Credit: Google]

Explaining the process, the blog post stated — in essence, the approach aims to place a “netlist” graph of logic gates, memory, and more onto a chip canvas, such that the design optimises power, performance, and area (PPA) while adhering to constraints on placement density and routing congestion. The graphs range in size from millions to billions of nodes grouped in thousands of clusters, and typically, evaluating the target metrics takes from hours to over a day.

The researchers devised a framework that directs an agent trained through reinforcement learning to optimise chip placements. Given the netlist, the ID of the current node to be placed, and the metadata of the netlist and the semiconductor technology, a policy AI model outputs a probability distribution over available placement locations, while a value model estimates the expected reward for the current placement.

While testing the team started with an empty chip, the agent as mentioned above places components sequentially until it completes the netlist and doesn’t receive a reward until the end when a negative weighted sum of proxy wavelength and congestion is tabulated. To guide the agent in selecting which components to place first, components are sorted by descending size — placing larger components first reduces the chance there’s no feasible placement for it later.

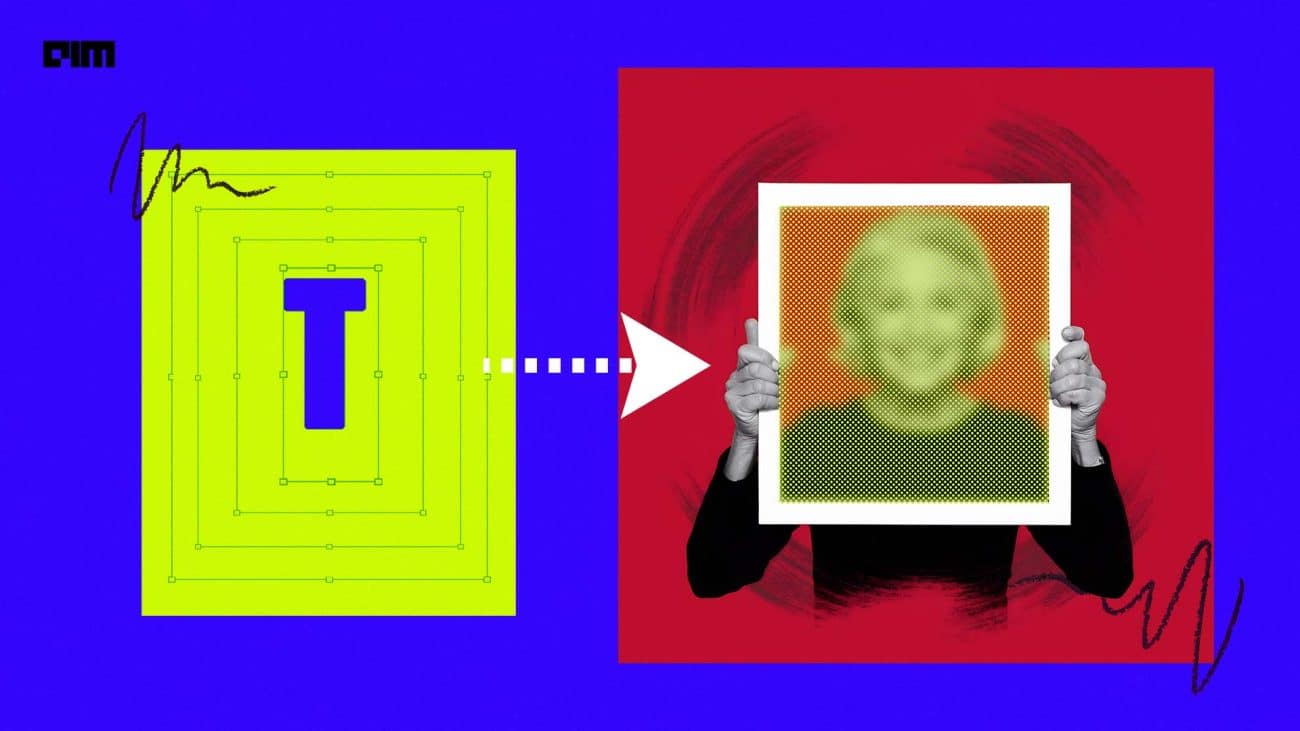

[Training data size versus fine-tuning performance. Image Credit: Google]

According to the team, training the agent required creating a data set of 10,000 chip placements, where the input is the state associated with the given placement, and the label is the reward for the placement. To build it, the researchers first picked five different chip netlists, to which an AI algorithm was applied to create 2,000 diverse placements for each netlist.

Post testing, the co-authors report that as they trained the framework on more chips, they were able to speed up the training process and generate high-quality results faster. In fact, they claim it achieved superior PPA on in-production Google tensor processing units (TPUs) — Google’s custom-designed AI accelerator chips — as compared with leading baselines.

According to the researchers, “Unlike existing methods that optimise the placement for each new chip from scratch, our work leverages knowledge gained from placing prior chips to become better over time.”

Additionally, “our method enables direct optimisation of the target metrics, such as wirelength, density, and congestion, without having to define … approximations of those functions as is done in other approaches. Not only does our formulation make it easy to incorporate new cost functions as they become available, but it also allows us to weigh their relative importance according to the needs of a given chip block (e.g., timing-critical or power-constrained),” concluded the researchers.