|

Listen to this story

|

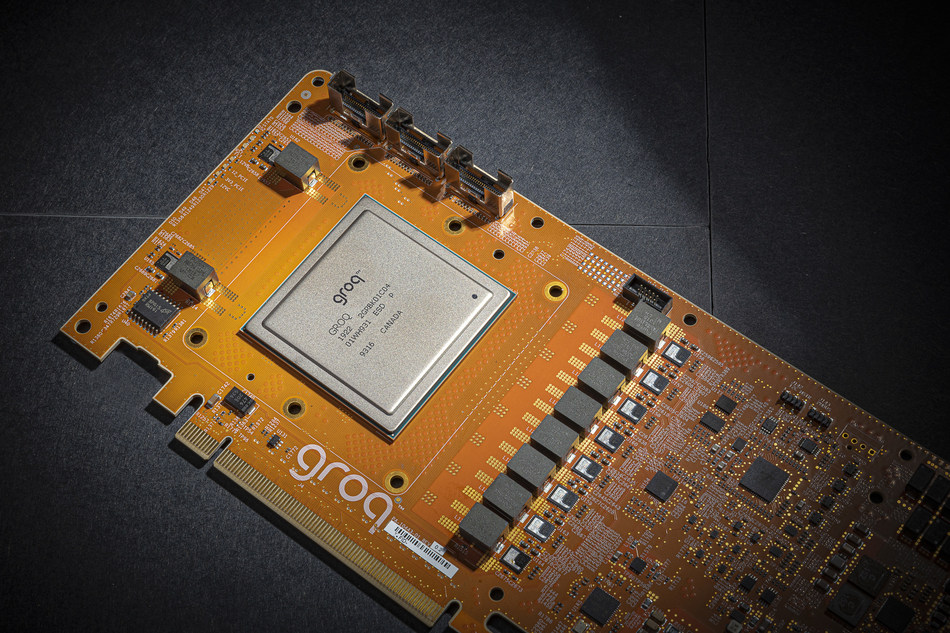

Groq recently introduced the Language Processing Unit (LPU), a new type of end-to-end processing unit system. It offers the fastest inference for computationally intensive applications with a sequential component, such as LLMs.

It has taken the internet by storm with its extremely low latency, serving at an unprecedented speed of almost 500 T/s.

The first public demo using Groq: a lightning-fast AI Answers Engine.

— Matt Shumer (@mattshumer_) February 19, 2024

It writes factual, cited answers with hundreds of words in less than a second.

More than 3/4 of the time is spent searching, not generating!

The LLM runs in a fraction of a second.https://t.co/dVUPyh3XGV https://t.co/mNV78XkoVB pic.twitter.com/QaDXixgSzp

This technology aims to address the limitations of traditional CPUs and GPUs for handling the intensive computational demands of LLMs. It promises faster inference and lower power consumption compared to existing solutions.

Wow, that's a lot of tweets tonight! FAQs responses.

— Groq Inc (@GroqInc) February 19, 2024

• We're faster because we designed our chip & systems

• It's an LPU, Language Processing Unit (not a GPU)

• We use open-source models, but we don't train them

• We are increasing access capacity weekly, stay tuned pic.twitter.com/nFlFXETKUP

Groq’s LPU marks a departure from the conventional SIMD (Single Instruction, Multiple Data) model employed by GPUs. Unlike GPUs, which are designed for parallel processing with hundreds of cores primarily for graphics rendering, LPUs are architected to deliver deterministic performance for AI computations.

Energy efficiency is another noteworthy advantage of LPUs over GPUs. By reducing the overhead associated with managing multiple threads and avoiding core underutilisation, LPUs can deliver more computations per watt, positioning them as a greener alternative.

Groq’s LPU has the potential to improve the performance and affordability of various LLM-based applications, including chatbot interactions, personalised content generation, and machine translation. They could act as an alternative to NVIDIA GPUs especially since A100s and H100s are in such high demand.

Groq was founded in 2016 by its chief Jonathan Ross. He initially began what became Google’s TPU (Tensor Processing Unit) project as a 20% project and later joined Google X’s Rapid Eval Team before founding Groq.