Deepfake and Face Swapping have emerged as a new trend in the past few years. As crazy as this technology sounds and has been introduced as a concept in the market, it has become more popular than ever. The social media space is buzzing lately with images and videos of people swapping faces and impersonating their favourite idols, whether politicians, top musicians, actors, or other highly admired personalities. It is interchanging your face with another person, and it mostly results in hilarious pictures and videos only when done with the right intentions. Face Swap apps enable you to have a lot of fun on your own and with your friends to create content that could take the social media by storm. Impersonating a famous Hollywood actor or a pop star has never been easier as you can just swap faces with your favorite celebrity. It is also important to note that the results you’ll be able to get are completely dependent on the app’s face-swapping capabilities. New and upcoming technologies such as Deepfake also have an interesting take on such, where you can replace and swap a face with someone else and create a video so flawless that it seems completely authentic. Easily accessible and highly downloaded mobile applications based on the Deepfake concept, such as Reface and MSQRD are being used to get a feel of what it would be like to be in the shoes of the eminents; but with your face!

Deepfake, a technology that uses deep learning and neural networks, is an AI or artificial intelligence-based human synthesis technique used to combine and superimpose existing images and videos onto source images or videos using a generative adversarial network as GAN, a deep learning technique. Combining an existing source video with an image to morph results apparently in a “fake” video that shows a person or persons performing an action at an event that never actually occurred in real life. GANs are a kind of neural network where two different networks coordinate each other. One network generates the fakes or generative network as it can be called, and the other evaluates how real and distinct they look.

Face swapping was inculcated in movies for years. Highly skilled video editors and computer-generated imagery or CGI experts needed to spend several hours achieving only decent results using heavy computational power. Today with new & emerging breakthroughs in technology allow anyone with deep learning techniques and a powerful graphics processing unit to create fake videos and images far superior to the traditional face-swapping techniques seen in old films. No heavy video editing skills are needed now as the entire process is handled automatically by an algorithm. But these days, such technologies are also being misused to create hatred among the masses and or for people’s political agendas.

What is SimSwap?

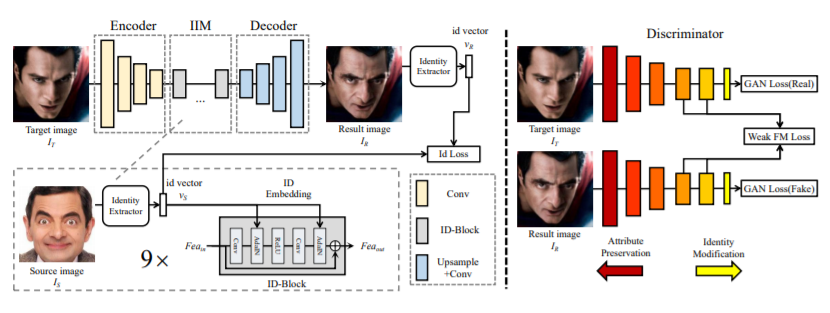

SimSwap is an efficient framework that aims to generalize high fidelity face-swapping. In contrast to previous conventional approaches that either lack the ability to generalize to arbitrary identity or fail to preserve and detect specific distinct attributes like facial expression and gaze direction, SimSwap’s framework transfers the identity of an arbitrary source face easily into an arbitrary target face while preserving the attributes of the target face. The mentioned process is performed in the following two ways. At first, the ID Injection Module (IIM) transfers the identity information of the source face into the target face at a feature level. By using this module, it extends the architecture of an identity specific face swapping algorithm to a framework for arbitrary face-swapping. Secondly, using the Weak Feature Matching Loss Efficiently helps the framework preserve and implicitly save the facial attributes. The Encoder extracts the target’s features containing both identities and attributes information from the target face during the process. Since the Decoder only manages to convert the target’s features to an image with the source’s identity, the identity information of the source face gets integrated into the weights of the Decoder. So the Decoder in DeepFakes hence can be only applied to one specific identity. The industrial face-swapping method utilizes advanced equipment to reconstruct the actor’s face model and rebuild the scene’s lighting condition, which is beyond the reach of most people. Recently, face-swapping without high-end equipment by using technologies such as SimSwap has attracted the researcher’s attention lately. SimSwap is fully capable of achieving competitive identity performance to other such technologies while preserving attributes better than previous state-of-the-art methods.

Creating Your Own Face Swapping Model

We will try to create our face-swapping model by making use of SimSwap. We will implement face swapping on a video to replace the faces in the target video with a different facial image. The following is an official implementation from the creators of SimSwap, whose GitHub can be accessed from the link here.

Getting Started

First, we will call our model from SimSwap’s Github repository, using the following code.

!git clone https://github.com/neuralchen/SimSwap !cd SimSwap && git pull

Then we will install all our dependencies to get started with using the following code,

!pip install insightface==0.2.1 onnxruntime moviepy !pip install googledrivedownloader !pip install imageio==2.4.1

Here we are using Insightface and imageio. Imageio is a Python library that provides an interface to read and write different kinds of image data, such as animated images and volumetric data from images.

Calling the prerequisites from SimSwap,

import os

os.chdir("SimSwap") #importing SimSwap Model

!ls

Calling the prerequisites from InsightFace,

!wget --no-check-certificate "https://sh23tw.dm.files.1drv.com/y4mmGiIkNVigkSwOKDcV3nwMJulRGhbtHdkheehR5TArc52UjudUYNXAEvKCii2O5LAmzGCGK6IfleocxuDeoKxDZkNzDRSt4ZUlEt8GlSOpCXAFEkBwaZimtWGDRbpIGpb_pz9Nq5jATBQpezBS6G_UtspWTkgrXHHxhviV2nWy8APPx134zOZrUIbkSF6xnsqzs3uZ_SEX_m9Rey0ykpx9w" -O antelope.zip !unzip ./antelope.zip -d ./insightface_func/models/

Importing further dependencies for the face swapping model,

import cv2 import torch import fractions import numpy as np from PIL import Image import torch.nn.functional as F from torchvision import transforms from models.models import create_model from options.test_options import TestOptions from insightface_func.face_detect_crop_multi import Face_detect_crop from util.videoswap import video_swap from util.add_watermark import watermark_image

We will start building the transformer for our face-swapping model with everything imported, which will consist of an encoder and decoder to implement our face swap on the target video.

#creating the video transformer transformer = transforms.Compose([ transforms.ToTensor(), #transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) ]) transformer_Arcface = transforms.Compose([ transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) ]) detransformer = transforms.Compose([ transforms.Normalize([0, 0, 0], [1/0.229, 1/0.224, 1/0.225]), transforms.Normalize([-0.485, -0.456, -0.406], [1, 1, 1]) ])

Setting the path to our target image and video, we are using an image of actor Robert Downey Jr to replace the faces in the target video.

opt = TestOptions()

opt.initialize()

opt.parser.add_argument('-f') ## dummy arg to avoid bug

opt = opt.parse()

opt.pic_a_path = './demo_file/Iron_man.jpg' ## image to be replace it with

opt.video_path = './demo_file/multi_people_1080p.mp4' #Target video Path

opt.output_path = './output/demo.mp4'#Path to save output at

opt.temp_path = './tmp'

opt.Arc_path = './arcface_model/arcface_checkpoint.tar'

opt.isTrain = False

Creating our neural network model,

crop_size = 224

torch.nn.Module.dump_patches = True

model = create_model(opt)

model.eval()

app = Face_detect_crop(name='antelope', root='./insightface_func/models')

app.prepare(ctx_id= 0, det_thresh=0.6, det_size=(640,640))

pic_a = opt.pic_a_path

# img_a = Image.open(pic_a).convert('RGB')

img_a_whole = cv2.imread(pic_a)

img_a_align_crop, _ = app.get(img_a_whole,crop_size)

img_a_align_crop_pil = Image.fromarray(cv2.cvtColor(img_a_align_crop[0],cv2.COLOR_BGR2RGB))

img_a = transformer_Arcface(img_a_align_crop_pil)

img_id = img_a.view(-1, img_a.shape[0], img_a.shape[1], img_a.shape[2])

# convert numpy to tensor

img_id = img_id.cuda()

Training our Model And Implementing the Transformation

#create latent id

img_id_downsample = F.interpolate(img_id, scale_factor=0.5)

latend_id = model.netArc(img_id_downsample)

latend_id = latend_id.detach().to('cpu')

latend_id = latend_id/np.linalg.norm(latend_id,axis=1,keepdims=True)

latend_id = latend_id.to('cuda')

video_swap(opt.video_path, latend_id, model, app, opt.output_path,temp_results_dir=opt.temp_path)

Output :

input mean and std: 127.5 127.5 find model: ./insightface_func/models/antelope/glintr100.onnx recognition find model: ./insightface_func/models/antelope/scrfd_10g_bnkps.onnx detection set det-size: (640, 640) 0%| | 0/594 [00:00<?, ?it/s](142, 366, 4) 100%|██████████| 594/594 [08:19<00:00, 1.19it/s] [MoviePy] >>>> Building video ./output/demo.mp4 [MoviePy] Writing audio in demoTEMP_MPY_wvf_snd.mp3 100%|██████████| 438/438 [00:00<00:00, 635.28it/s][MoviePy] Done. [MoviePy] Writing video ./output/demo.mp4 100%|██████████| 595/595 [00:55<00:00, 10.69it/s] [MoviePy] Done. [MoviePy] >>>> Video ready: ./output/demo.mp4

The Face Swapped Video will be saved at the mentioned path with the output being

Isn’t That Cool?

EndNotes

In this article, we have explored the domain of face swapping and deepfake using the SimSwap Library. We have implemented a face-swapping model, which has replaced the target video with our image. You can try with different faces and more complex images to see how SImSwap behaves with them. You can find the link to this colab implementation here.

References :