Artificial Intelligence (AI) has been the star technology over the past few years and it is easy to see why. From smartphones to PCs, some of the most game-changing applications are powered by AI . In addition to this, there is a groundswell of interest around applications like video recognition, speech recognition or object detection that will find use cases in smartphones, security and self-driving cars.

Many AI applications that we see in our day-to-day lives have become ubiquitous, for example, Facebook photo-tagging or virtual assistant on our smartphones. Gartner predicts the business value created by AI will reach $3.9 trillion in 2022. Some of the use cases that will drive the maximum investment are intelligent automation on the enterprise side and product recommendations and digital assistants on the consumer end. Most of these compelling use cases depend on computational power and deep learning algorithms that will drive the next phase of growth in AI.

So, while the data science community is overwhelmed by AI’s possibilities, what’s often less clear is the underlying significance of the hardware layer powering these applications. In this article, we explore why data scientists today demand a full-stack solution across hardware platforms, tools and libraries to enable ease of application development. We also look at the shifting revenue towards inference and the need for software/frameworks to utilise the power of the high-end processors.

AI Hardware & Software Presents The Biggest Untapped Opportunity

Given the massive potential of AI, trendsetting companies have taken concrete steps to capture real value from AI and win in this market. End users of enterprise-AI and even consumer-AI expect AI systems to provide highly accurate outcomes and that too in near-real-time. The amount of data available for training these AI systems is increasing at a steady rate. While data availability is no longer a major bottleneck, the computing infrastructure — hardware + software will be the key differentiator to meet the dual requirements of speed and accuracy.

What Forward-thinking Enterprises Want — To Build Highly Accurate Models

In all business domains, the AI systems that provide state-of-the-art performance are all Deep Learning-based systems. These DL-based algorithms are extremely computationally intensive and have fuelled rapid advancements in the processor technology in general but more specifically in the processors used in the AI systems.

The most crucial need for businesses today is to build highly accurate models. For an AI system to be highly accurate, the trained AI models need to be generalizable and the training infrastructure needs to be scalable to seamlessly ingest more data. In the next step, for AI system to make predictions in near-real-time, the inference infrastructure needs to have the appropriate computational sophistication.

Hardware Is The Key Differentiator In AI

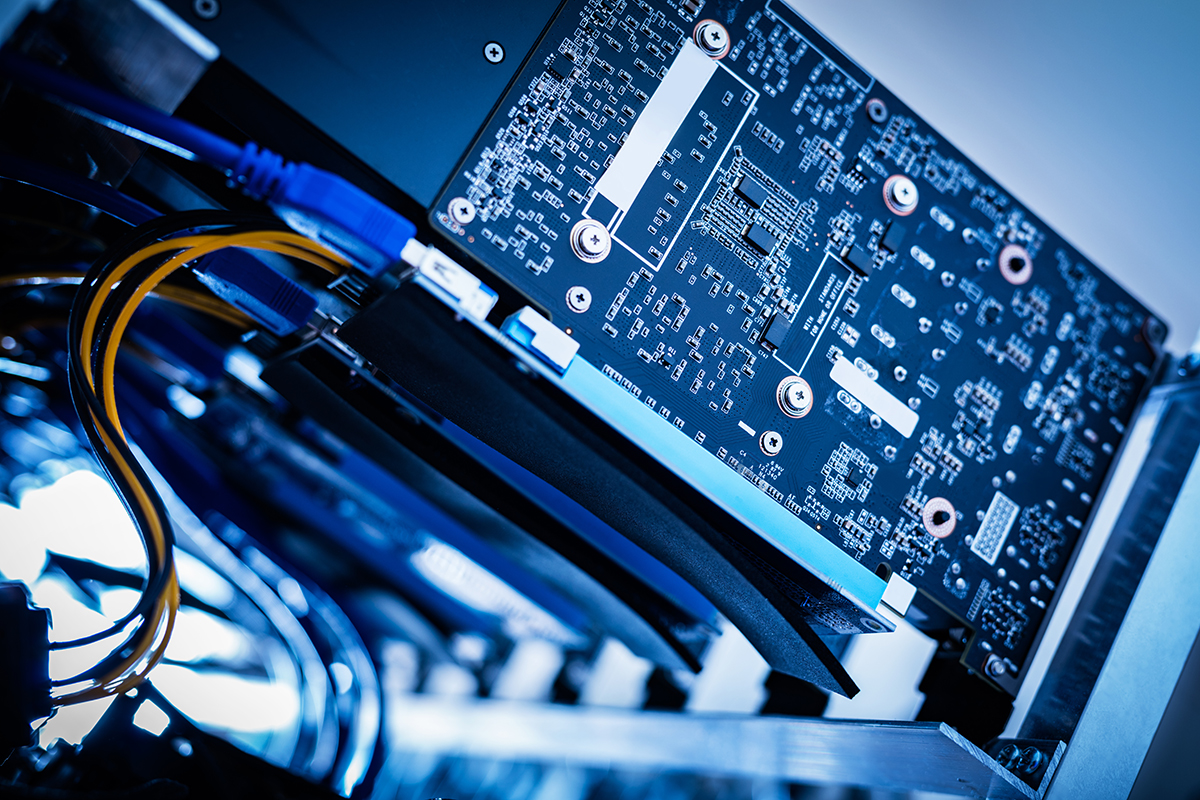

A typical data science infrastructure relies on a combination of GPUs and CPUs which are quite different architecturally. CPUs, the traditional workhorses of computing, are designed for efficient computation of complex operations whereas GPUs are best at simpler/elemental operations which need to be optimally repeated at a massive scale. GPUs started off as processors for better rendering of high fidelity graphics but have now grown on to be a class of their own. GPUs achieve the optimality through in-built parallelization.

Since AI system training is typically done on tens of millions of training samples, the same set of features need to be computed for each of these training samples and the same set of model parameters need to be updated over and over again. GPUs are thus most suited for these repetitive tasks. The parallelization provided by the GPUs also helps in completing these training tasks relatively quickly, which in turn helps in exploring many more training architectures.

Inference Will Drive The AI Market Forward

Model training and inference (i.e., prediction) happen in very different contexts and hence their hardware requirements are also quite different. For example, inference typically happens on only a few samples at a time and that too these samples are captured across a wide variety of conditions, and hence there isn’t enough parallelization to warrant a GPU. Thus, for inference, a CPU is preferred almost always. In terms of cost, GPUs are typically a lot more expensive than CPUs. Another key point one must remember is that inference typically happens on more diverse and less expensive devices (like smartphones, drones, edge devices) as compared to training, which typically happens on dedicated hardware.

It is interesting to note that the industry is working towards making GPUs the go-to-processors for inference. Among other things, iPhones now have GPUs. No doubt, going forward inference will become the biggest revenue contributor in AI.

What’s The Next Frontier In AI — Cohesive Software Environment

Want to know why hardware enterprises are racing to build next-gen processors that are optimized with popular Deep Learning frameworks? Data Scientists don’t want to spend time parsing high-level AI/ML code in a way that is consistent with the optimization of the corresponding hardware. Moreover, Data Scientists and Machine Learning Engineers are not necessarily trained in writing code which can inherently utilise the optimization capabilities of these CPUs/GPUs.

That’s why the next wave of innovations will come from AI frameworks that are developed around these different processors. To fully utilise the power of these high-end processors (be it CPUs or GPUs), data scientists require software/framework. In fact, most data scientists and machine learning engineers don’t want to spend any extra effort acquiring these skills. This makes it all the more important that the next-gen processors also have a wide array of necessary framework which can interpret and port the ‘high-level’ AI/ML code into optimized routines which utilize all the capabilities of the underlying processors.

These speedup frameworks can either be provided by the creators of the processors themselves or by sponsoring communities of coders who will be contribute to these frameworks.

For example, some of the most popular frameworks used today are:

- a) clDNN library by Intel is a set of compute kernels to accelerate deep learning and AI models

- b) Intel® MKL-DNN actively supported by Intel coding community for both CPUs and GPUs is the set of accelerators which is optimized for a variety of deep learning architectures but more so specifically for some of the popular ones like AlexNet, GoogleNet etc.

Finally, the next big push in the industry is towards building processors which are specifically optimized for certain kinds of AI workloads (Nervana Neural Network Processor (NNP-I) and TPUs).

Bottomline

Semiconductor companies and AI computing players need to coalesce their strategy around hardware innovations and software that reduces the complexities and offers a wide appeal to the developer ecosystem. To win the developer community over, hardware players need to offer simpler interfaces and a suite of software platforms that is compatible with multiple compute architecture. Hardware companies should also think how to include application-specific capabilities that could see a high demand over the next few years.