Over the years, containers became essential as they give more control to developers over resources and managing apps on the cloud, like Azure or AWS enabling them to create powerful, scalable applications and without spending too much money on storage, networking and compute resources. Such cloud natives are serving enterprise customers to get their workloads on the cloud and welcome a multi-cloud hybrid architecture.

There was a period when containers weren’t held as an industry-changing force, but over a span of time, container platforms like Kubernetes are growing to be the new operating system, which has gained full support from the cloud giants.

Containers provide the operating system with virtualisation that allows developers to run an application and its dependencies in completely isolated processes. This means that containers can package a program’s code, configurations, and dependencies into individual building blocks that deliver environmental consistency.

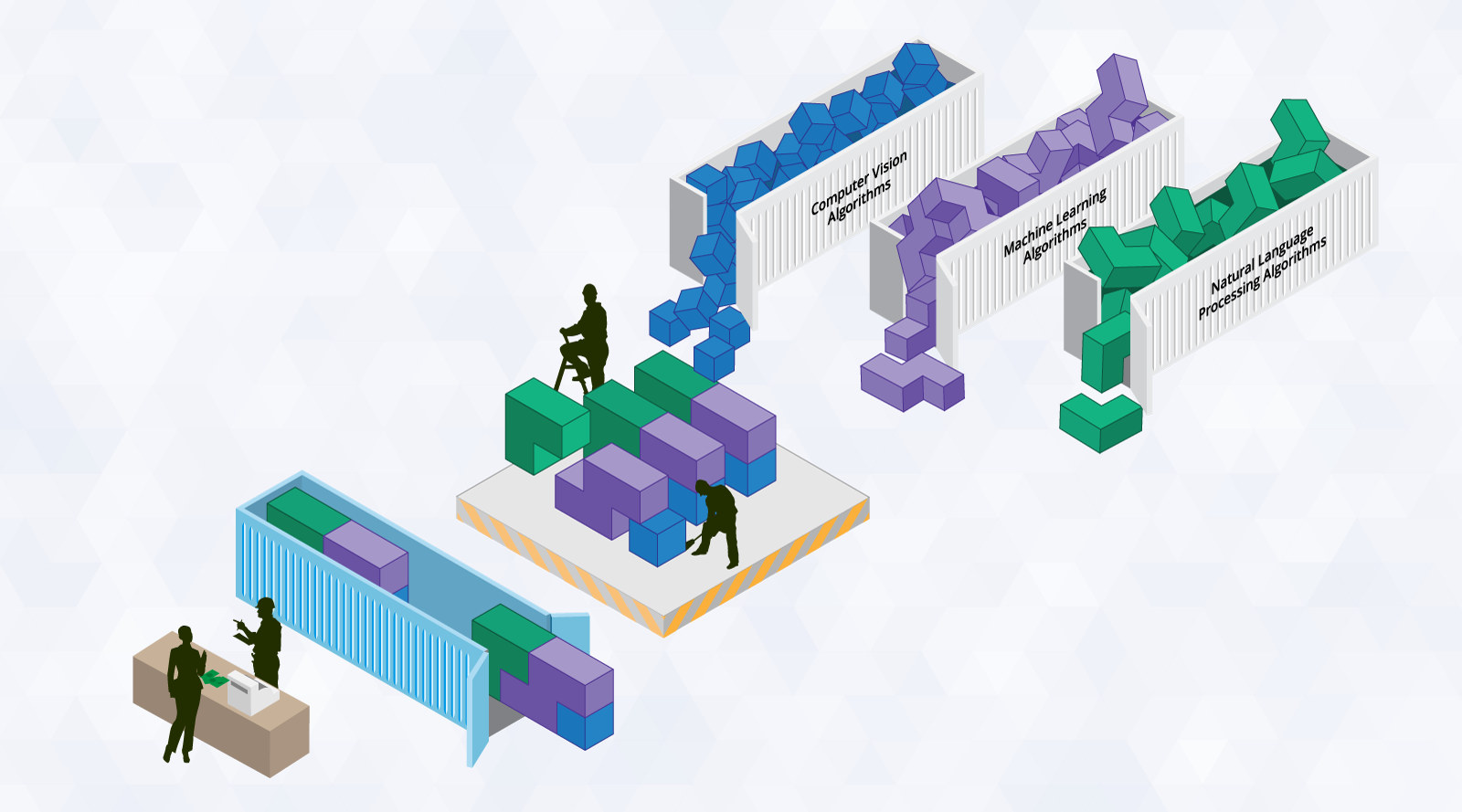

Building Unified Architecture For AI

Developers can run application an independent unit virtual OS, making it easy to integrate it from one environment to the other, thus removing the need for dependency on the underlying hardware or the cloud platform. This brings in a tremendous amount of flexibility, resourcefulness, and portability of applications. It is this nature of containers which, when combined with open-source technologies, can create innovative apps for businesses over the world.

Container platforms such as Kubernetes and Docker are helping build unified architecture for AI applications. Enterprise platforms built using containerisation are effective in collecting, organising and processing data for analytics, machine learning and deep learning models.

For example, there is Kubeflow, an open-source project for running TensorFlow deep learning workloads on top of Kubernetes. Kubeflow makes it easy to build production ML deployments on the cloud using containers.

How Custom Containers Can Help In Building Distributed AI Models

For AI/ML applications that require access to data, experts say a data fabric can ensure these containerised apps access the uniform view of the data regardless of where they are deployed, be it on-premise or the cloud. This entails configuration persistence of the data in the container.

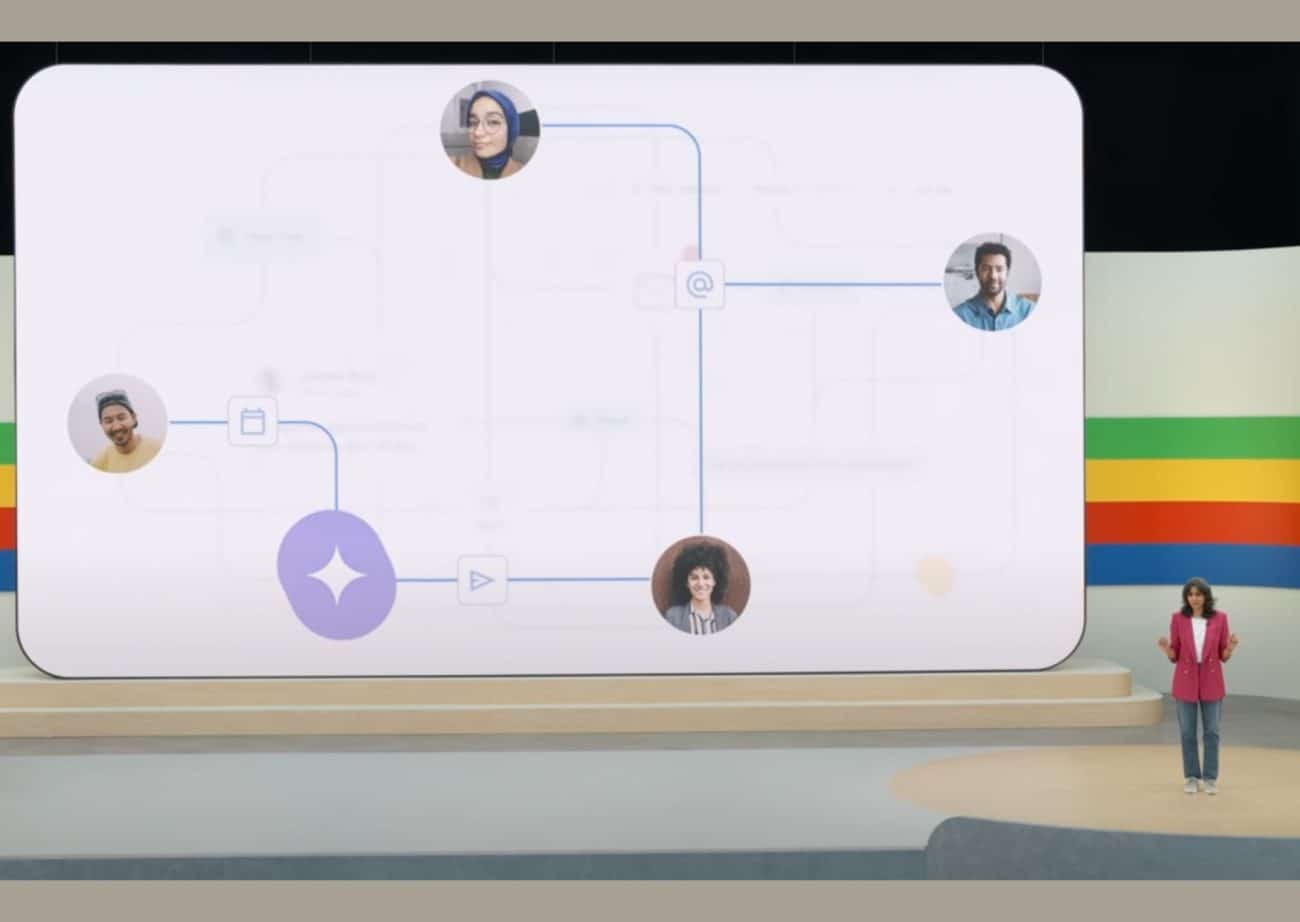

AI apps running on cloud platforms can containers management systems such as Kubernetes can help in scaling the apps and leveraging the data capacity to machine learning models via containerised clusters that are not possible with other on-prem systems. Containers also have tools built-in for external and distributed data access, so you can use common data-oriented interfaces that maintain many data models.

According to RedHat, its container services are proving to be very valuable in helping accelerate artificial intelligence and machine learning lifecycle for organisations worldwide. ExxonMobil, BMW, Volkswagen, Ministry of Defense (Israel), are some organisations that have operationalised Red Hat OpenShift, industry-leading Kubernetes-based container platform, to accelerate data science workflows, and build intelligent applications.

Containers Are Important For Data Security In Applications

The unique properties of the cloud mean that developers are increasingly using Kubernetes and containers to modernise applications and changing the nature of workloads that need to be secured. Safeguarding workloads is important to the security of applications and data inside every organisation. Containerised applications running in Kubernetes enable security capabilities as a part of the fabric of the existing IT and DevOps ecosystems.

Container platforms like Kubernetes and Docker, along with technology and microservices companies are working in creating additional capabilities all the way from management, monitoring, to security and access controls for building business scale AI applications, which can be put into production.