With Google AI conducting many studies, a considerable amount of research has gone into understanding how machines can imitate human – or animal – behavior. Most recently, the company developed a system that learns from the motions of animals to give robots greater ‘agility’. Developing a robot that can move like animals/humans and one that can replicate agile/complex motions opens up various possibilities. One practical use case could be to leverage its help for sophisticated tasks. But designing such robots is not easy.

While in the past, Google explored the possibility of making a robot learn how to walk from scratch, in their latest attempt, they explore the idea of making a four-legged robot learn agile behaviors by imitating motions from real animals like trotting and hopping.

The Research

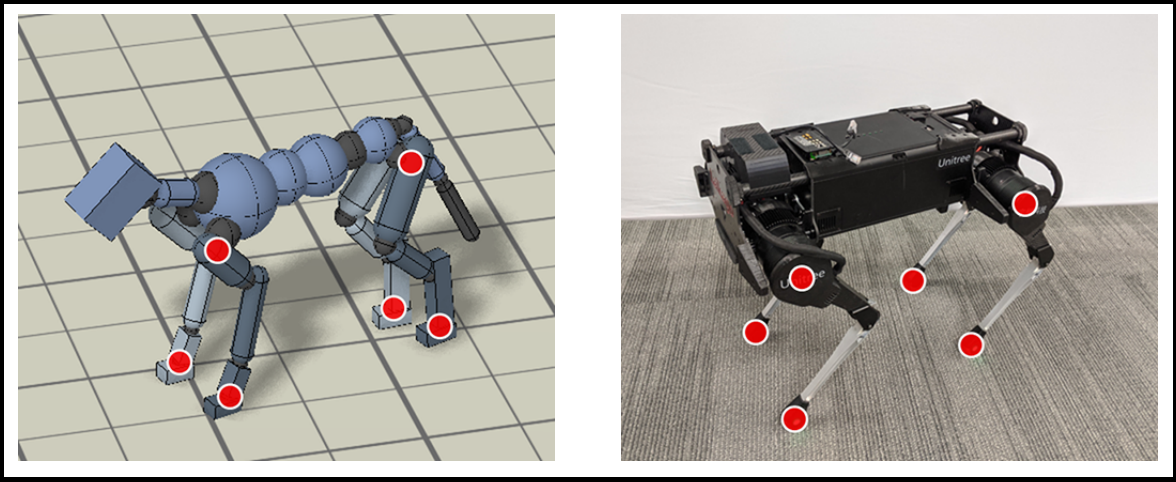

To counter problems like inefficient algorithms and hardware, the teams’ framework takes motion capture clips of a dog and uses reinforcement learning. The clip provides the system with different reference motions. It helps researchers teach a four-legged Unitree Laikago robot to perform a range of behaviors, like hopping, turning and speed walking (4.18 km/hr).

First, Google AI’s research team compiled a data set of real dogs performing various tasks/skills. Next, by using the different motions in the reward function – which describes how agents ought to behave – the researchers used about 200 million samples to train the simulated robot to imitate the motion skills, in this case, the motions of a dog. The training took place in a physics simulation so that the pose of the reference motions could be carefully tracked.

But the problem with simulators is that they provide a rough idea of approximation of the real-world. To find a solution to this problem, the researchers employed an adaptation technique that randomized the dynamics of the simulation. To give an example, the technique randomized the physical quantities of the robots, like its mass and friction. Values like these were mapped using an encoder to a numerical representation, which is called encoding, and this encoding was passed on as an input to the root control policy. But the encoder was removed during deployment, and the researchers directly searched for a set of variables that allowed these robots to execute skills successfully.

The system, according to the team, was able to adapt the policy to the real-world using a clip from real-world data which was under eight minutes. The real-world data has approximately 50 trials, and they demonstrated that the real-world robot learned to imitate various motions from a dog and excellent artist animated keyframe motions. The motions included pacing, trotting and spinning action, as well as a dynamic hop-turn.

Final Thoughts

Although this approach holds a lot of promise, it comes with a few challenges. The control policy can only learn to hop, spin, trot and walk but, imitating an animal naturally means doing more than that. The researchers say that while the system has successfully learned policies for a diverse set of behaviors, due to limitations in hardware and algorithms, they have not been able to learn more dynamic behaviors, like large jumps and runs. Also, the behaviors learned are not stable when compared to the manually-designed controllers.

While the team encounters these problems, they believe that reproducing complex behaviors and improving the robustness of the system will eventually lead to more complex real-world applications. In future, they expect to make the system learn from video clips as much as possible.