Intel, along with the Georgia Institute of Technology (Georgia Tech) recently obtained a multimillion-dollar deal from the Defense Advanced Research Projects Agency (DARPA) in the US. As per the four-year contract, both will work on ‘Guaranteeing Artificial Intelligence (AI) Robustness against Deception’ – or GARD – program for DARPA.

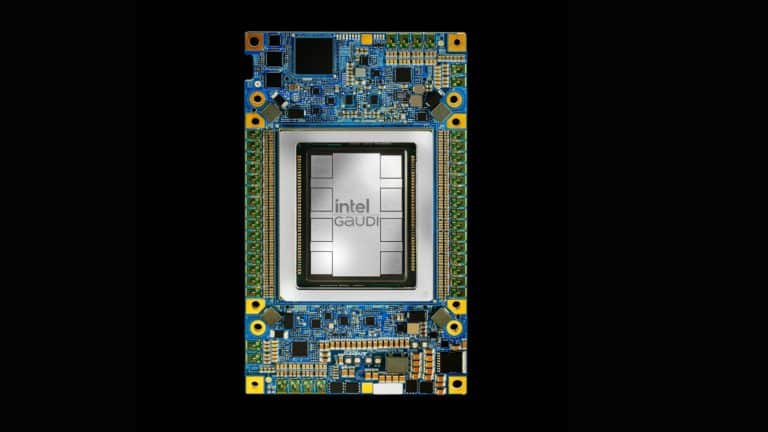

According to Intel, it is the main contractor in the multimillion-dollar joint deal, which is targeted at improving cybersecurity defence support facing and spoofing attacks on machine learning (ML) systems.

Spoofing attacks can alter and imperil the interpretation of data by the ML algorithms used in an autonomous system. Military systems are vulnerable to security attacks, which can pose risks to extremely sensitive information that can potentially harm military systems.

What Is GARD?

DARPA is the main organization of the US Department of Defense, which is included in obtaining significant investments in advanced technologies for American national security. Project GARD is aimed at enhancing AI and ML models to protect against possible attacks. DARPA started this program because if not countered, adversarial attacks can create instability and security concerns.

GARD plans to address ML defence in a unique way – by advancing broad-based defence mechanisms. This includes addressing likely attacks in given scenarios that may lead an AI model to perform misclassification or misinterpretation of data.

The deal with Intel and Georgia Tech is being deemed as a significant one for Intel, proving its ML prowess in dealing with cybersecurity issues.

“Intel and Georgia Tech are operating together to advance the ecosystem’s collective knowledge of and ability to mitigate against AI and ML vulnerabilities. For innovative research incoherence techniques, we are co-operating on an approach to enhance object detection and to advance the ability for AI and ML to react to adversarial attacks,” according to Jason Martin, who is a principal engineer for Intel Labs, as well as the principal investigator for the DARPA GARD project from Intel.

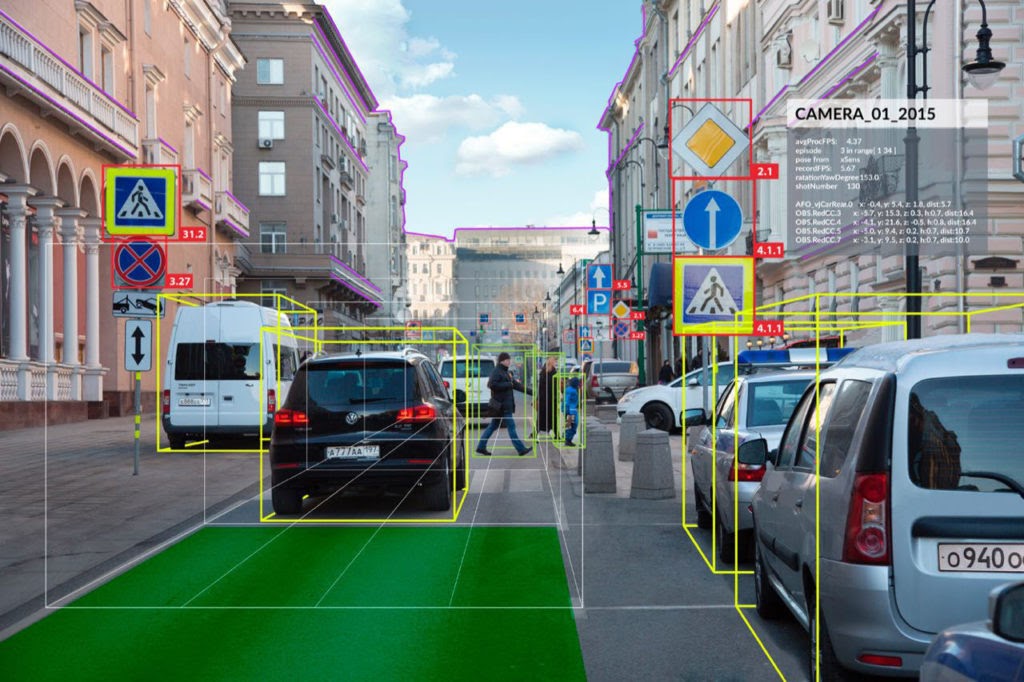

In the initial stage of GARD, Intel and Georgia Tech are improving object detection technologies within spatial, temporal and semantic coherence for both still images as well as videos.

Caption: Intel Labs members show an illustration of an artificial intelligence system getting confused by an adversarial T-shirt. (Source: Intel Corporation)

Why Is This Important?

We know that ML enables systems to learn over time with new data and experiences, and one of its prevalent use cases is object recognition. The use of ML in autonomous systems is so pervasive that most car companies today have car models that utilize it. Advanced sensors like LIDAR and state-of-the-art camera systems collect data which can be fed into AI/ML systems that rely on object detection to achieve intelligent automation.

Now, it gets complex if AI misclassifications at the pixel level can cause image misinterpretation and mislabeling situations, or subtle modifications to real-world objects that can potentially throw off AI perception systems. DARPA precisely wants to prevent such related vulnerabilities so that autonomous systems can be fully trusted and defended against adversaries.

Even though adversarial attacks are infrequent, they present a risk to corrupt or modify AI/ML systems by changing the algorithm interpretation of data. This holds significant importance as AI/ML models have become quite ubiquitous given its use in autonomous systems in both civilian and military applications. One common example is self-driving cars which depend on object detection to understand their surroundings.

According to Intel, the present defence technologies are designed to protect against pre-defined adversarial attacks, but continue to be vulnerable to attacks if tested outside their particular design parameters.

Due to its comprehensive architectural reach and security research, Intel is uniquely placed to support further advancements in AI and ML systems that can defend against spoofing attacks.