Intel®, in collaboration with Analytics India Magazine, is organising an oneAPI workshop on Intel® Neural Compressor on May 13th from 3:00 PM to 5:00 PM.

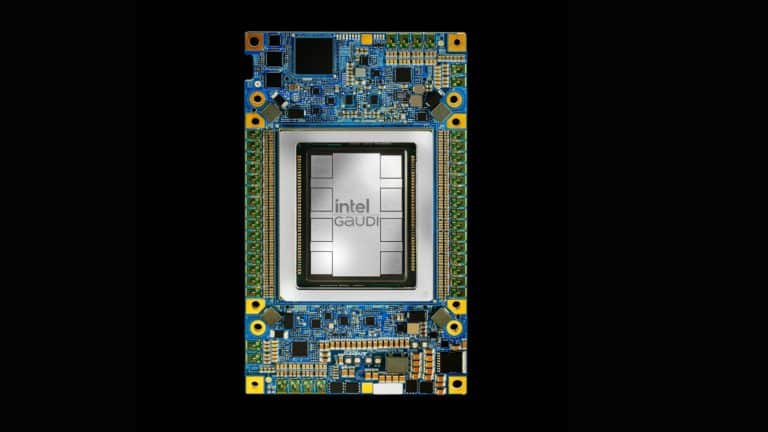

Quantization is an important acceleration method, and to support it in hardware, Intel developed Intel® Deep Learning Boost. Additionally, to help developers quantize AI models easily and quickly, Intel has developed a tool called Intel® Neural Compressor.

The workshop will introduce Intel® Optimisation for Tensorflow to help Tensorflow users get better performance on Intel platforms. Intel® Optimisation for TensorFlow is the binary distribution of TensorFlow with Intel® oneAPI deep neural network (oneDNN) library primitives.

oneDNN is an open-source, cross-platform performance library for deep learning applications. The optimisations are directly upstreamed and made available in the official TensorFlow release via a simple flag update, enabling developers to benefit from the Intel® optimisations seamlessly.

Intel® has already released Intel® Optimization for Tensorflow.

Click here to view the installation guide.

Register for the workshop

The workshop will cover

- oneAPI AI Analytics Toolkit overview

- Introduction to Intel® Optimization for Tensorflow

- Intel optimisations for Tensorflow

- Intel Neural Compressor

- Hands-on demo to showcase usage and performance boost on DevCloud

Please create an Intel® DevCloud Account here.

The workshop will also offer a demo on how to speed up the AI model by quantization using Intel®’s Neural Compressor, alongside comparing the performance increase online with Intel® Deep Learning Boost.

The demo will walk you through an end-to-end pipeline to train a Tensorflow model with a small customer dataset and speed up the model based on quantization by Intel® Neural Compressor, including:

- Train a model by Keras and Intel Optimization for Tensorflow.

- Get an INT8 model by Intel® Neural Compressor.

- Compare the performance of the FP32 and INT8 models by the same script.

Register for the workshop

Agenda:

| Session | Content | Duration | Owner |

| Introduction | Developer Ecosystem Program | 3:00 – 3:10 PM | Kavita Aroor |

| Overview | oneAPI AI Analytics Toolkit Overview, DevCloud Setup | 3:10 – 3:25 PM | Aditya |

| Intel® Extension for TensorFlow | Introduction of Intel® Optimization for TensorFlow + Intel® Extension for TensorFlow hands-on | 3:30 – 4:10 PM | Aditya |

| Intel Neural Compressor Hands-on +QA | Introduction of Intel Neural CompressorHands-on with quantization workload | 4:10 – 5:00 PM | Jianyu Zhang |

Who should attend

- Software Developers

- IT managers

- ML developers

- AI engineers

- Data science professionals

- IT, technology, and software architects

- Senior managers of technology/engineering/software

Register for the workshop

___________________________________________________

Exclusive Contests — Participate & Win!

Lucky Draw Contest

- Analytics India Magazine is running a Lucky Draw wherein at the end of the workshop 10 lucky participants will get a chance to WIN Amazon Vouchers worth INR 2000/- each.

Note: The winners will be selected based on their engagement on Discord throughout the workshop.

_______________________________________________________

Speaker details

Zhang Jianyu

Zhang Jianyu (Neo) is a senior AI software solution engineer (SSE) of SATG AIA in the PRC. Focus on optimising, consulting and supporting the AI frameworks on the Intel® Platform. Jianyu graduated from Northwestern Polytechnical University (China) with a master’s in Pattern Recognition and Intelligence System. Senior software engineer with rich experience in AI, virtualisation, high concurrency communication and embedded systems.

Kavita Aroor

Kavita Aroor is a developer marketing lead – Asia Pacific & Japan at Intel. She has over 16+ years of experience in marketing.

Aditya Sirvaiya

Aditya Sirvaiya is an AI Software Solutions Engineer at Intel. He specialises in Intel Optimized AI frameworks and OpenVINO Toolkit.