With the advent of intelligent systems which learn from massive amounts of data with the goal of achieving high efficiency and minimum computational cost, the threat of vulnerable security attacks has also increased. While researching on various machine learning models such as SVM, decision tree, Naive Bayes, logistic regression, clustering, deep neural networks, etc. researchers have found out several security threats against these learning algorithms.

Researchers around the globe are working hard to overcome such issues and vulnerabilities in the learning models. Basically, many security threats towards machine learning are due to the adversarial samples which cause the performance reduction of ML-based systems. These vulnerabilities affect both classification and clustering algorithms. According to the researchers from the National University of Defense Technology, the University of British Columbia and Deakin University, there are currently few notable trends in the research on security threats and defensive techniques of the machine learning models.

Security Threat On ML Models

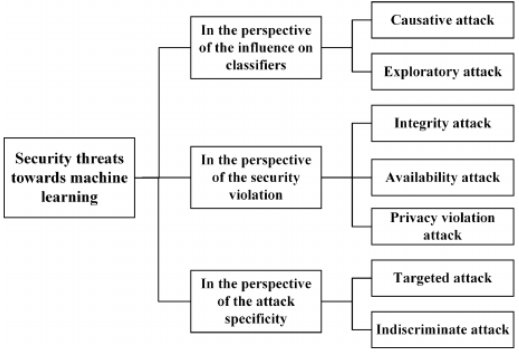

Fig: Taxonomy of security threats towards machine learning (Source)

The taxonomy of security threats for ML models is mainly based on three different perspectives. They are the influence on classifiers, the security violation and the attack specificity. In the perspective of these three influences, security threats towards machine learning models can be further divided into seven categories. They are mentioned below

- Causative Attack: This attack includes the modification in the distribution of training data such as change of parameters of the learning models. This modification in the already trained classifiers results in decreasing the performance of classifiers in the classification tasks.

- Exploratory Attack: This attack causes misclassification with respect to adversarial samples which results in exposing the sensitive information from training data as well as learning models.

- Integrity Attack: The integrity attack achieves an increase of the false negatives (FN) in the performance measure of existing classifiers when classifying harmful samples.

- Availability Attack: This attack is opposite to the integrity attack. Unlike integrity attack, this attack achieves an increase of the false positives (FP) in the performance measure of classifiers for the positive samples.

- Privacy Violation Attack: With the privacy violation attack, adversaries are able to obtain sensitive as well as confidential information from training data and learning models.

- Targeted Attack: This attack is mainly used to reduce the performance of the classifiers on a specific sample or on a specific group of samples.

- Indiscriminate Attack: This type of attack causes the classifiers to fail in an undiscriminating way on a wider range of samples.

Deep learning has emerged as one of the important domains in ML. Researchers have found several vulnerabilities in this domain. In the case of deep neural networks, there are several adversarial attacks as mentioned below:

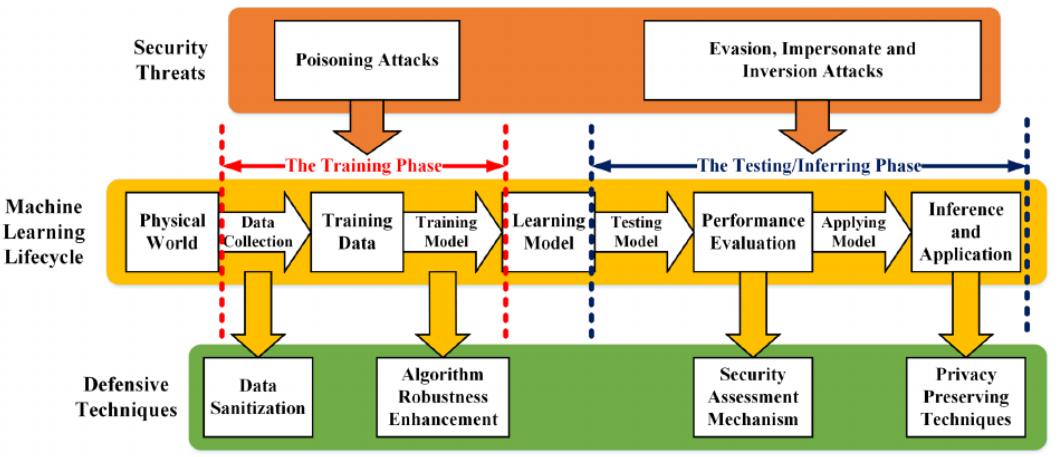

- Security Threats Against The Training Phase: Poisoning attack is a typical type of security threat which disrupts the availability and the integrity of machine learning models via injecting adversarial samples to the training dataset. It is a type of causative attack which is mainly designated to have almost similar features with malicious samples and incorrect labels.

- Security Threats Against The Testing/ Inferring Phase: Spoofing such as evasion and impersonate threats and Inversion attacks can be said as the most common types of security threats against the testing/inferring phase.

Defensive Techniques On Machine Learning Models

In order to address the diverse security threats towards machine learning models, many researchers have devoted themselves to propose defensive techniques to protect these learning algorithms. The defensive techniques of machine learning mainly consist of four groups as mentioned below

- Security Assessment Mechanisms: The security assessment of machine learning algorithms is mainly performed by the researchers based on the “what if” methodology. In this process, the developer will test the vulnerabilities of the classifier by introducing adversarial assumptions which are done by two types of defensive mechanisms i.e. proactive defence and reactive defence.

- Countermeasures In The Training Phase: Ensuring the purity of training data and improving the robustness of the machine learning algorithms can be said as the two main countermeasures. One of the examples of this type of defensive technique is Data Sanitization which can be said as the practical defending technique in order to ensure the purity of the training data by separating the adversarial samples from the normal ones and then removing these malicious samples. For improving the robustness of a learning algorithm, techniques such as Bootstrap Aggregating and Random SubspaceMethod (RSM) can be used.

- Countermeasures In The Testing/ Inferring Phase: Countermeasures in the testing/ inferring phase mainly focus on the improvement of learning algorithms’ robustness. Another countermeasure against attacks in the testing/ inferring phase is the active defence considering data distributions.

- Data Security And Privacy: In this case, differential privacy (DP) can be said as one of the specific techniques of preserving data via data encryption. Another technique is the homomorphic encryption (HE) which is applied to provide data privacy in the same manner as differential privacy does. However, regardless of these existing cryptographic mechanisms, reducing sensitive outputs of learning model APIs can be considered as an alternative idea of assuring data security and privacy.