In today’s world, it is seen as a sign of technical prowess when a nation can build great supercomputer, systems which are millions of times more stronger than personal computers. The US and China have been competing with each other for building supercomputers for quite some time now. Underlining the importance of such technical developments, a US committee had gone on record to say, “Through multilevel government support, China now has the world’s two fastest supercomputers and is on track to surpass the United States in the next generation of supercomputers — exascale computers — with an expected rollout by 2020 compared to the accelerated US timeline of 2021.”

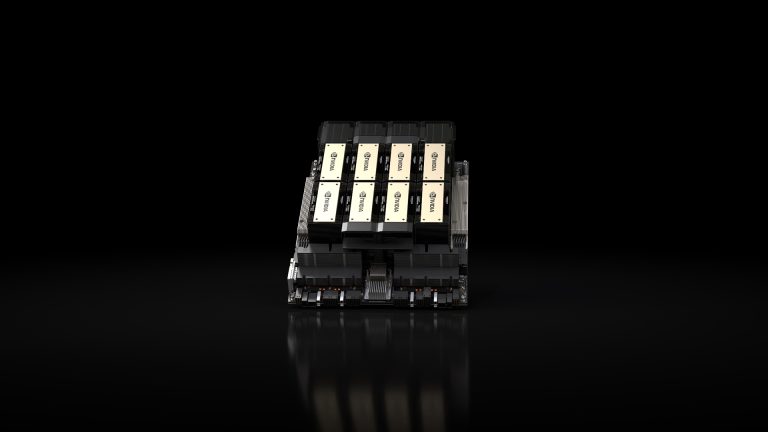

In fact, right here in India, major US chipmaker NVIDIA and the Indian industrial science institution, Council of Scientific and Industrial Research (CSIR) joined forces to build a Supercomputer Centre of Excellence at Central Electronics Engineering Research Institute (CEERI) at New Delhi. This centre is set to house India’s first ever supercomputer. This initiative will give India a five-petaflop supercomputer which is also India’s first ever AI supercomputer.

Supercomputer And Super Speed

Supercomputers can achieve great computing speeds that can also reach petascale levels — which means they can do about one quadrillion (1,000,000,000,000,000) computations per second. On the other hand, our normal computers can compute upto one billion calculations per second. These kind of supercomputing speeds are needed for important domains like chemistry and biology applications.

Thom Dunning who is a chemistry researcher and lecturer at the University of Washington and the co-director of the Northwest Institute for Advanced Computing, had once said, “As you develop models that are more sophisticated that include more of the physics, chemistry and environmental issues that are important in predicting the climate, the computing resources you need increases.”

With inspiration for such innovative applications supercomputers are reaching new heights. For example, the supercomputer built by IBM and Nvidia for the Oak Ridge National Laboratory can reach upto 20 quadrillion computations per second.

New York Times, compared this achievement as, “A human would require 63 billion years to do what Summit can do in a single second.”

MIT Technology Review said, “Everyone on Earth would have to do a calculation every second of every day for 305 days to crunch what the new machine can do in the blink of an eye.”

Technology Behind The Supercomputer

Supercomputers have been largely powered by the concept of parallel processing where several commands can be computed at the same time with the help of many executioners or processors. The paradigm of parallel processing came after decades of computers working on a much simpler paradigm known as serial processing in which commands are executed one at a time.

So why do supercomputers work on parallel processing? When a biology or chemistry problem needs to be solved by scientists it can push normal computers to the very limit. The best approach is to split the bigger problem into smaller problem and add more high-performing processors and divide the tasks between them. The idea springed a revolution in the scientific and computing community. Every time more computing speed is needed, more processors are added.

The next steps is to bring many such computers (off-the-shelf PCs) and connect them using LAN and creating a supercomputing cluster. Sunway TaihuLight, which was reported to have simulated the universe for scientists has around 40,960 processing modules. Each of these modules has around 260 processor cores which adds to 10,649,600 processor cores in the whole supercomputer.

Exascale Supercomputers And Solving World Problems

The next wave of supercomputers are called exascale supercomputers. These supercomputers will help us build greater applications that will help us solve problems in the complex fields of physics, biology and manufacturing, for example. Exascale computers are supposed to be at least 10 to 20 times faster than today’s supercomputer. The Exascale project is being run by the Department Of Energy in the US and has active research on subject areas like chemistry and materials applications of supercomputers, earth and space science applications, among many others.

A statement put out by the Exascale project states, “The Exascale Computing Project is focused on accelerating the delivery of a capable exascale computing ecosystem that delivers 50 times more computational science and data analytic application power than possible with DOE HPC systems such as Titan (ORNL) and Sequoia (LLNL). With the goal to launch a US exascale ecosystem by 2021, the ECP will have profound effects on the American people and the world.”

The primary source of motivation for the project is strengthening national security, advancing scientific security and building software and hardware technologies for building powerful exascale supercomputers. The hardware integration project makes sure the dream of exascale computers is a reality with the combination of right ECP applications, software and advanced hardware technology.

How can exascale supercomputers do for the most difficult problems on earth? Sample this. Due to shortage of drinking water poor all over the world suffer and are faced with difficult situations everyday. The only solution currently seems to be turning ocean water into something drinkable and edible. Researchers at the Lawrence Livermore National Laboratory say that the solution is solving the carbon nanotubes problem. The researchers said, “The microscopic cylinders serve as the perfect desalination filters: their radius is wide enough to let water molecules slip through, but narrow enough to block the larger salt particles. The scale we’re talking about here is truly unimaginable; the width of a single nanotube is more than 10,000 times smaller than a human hair.”