AI has given humans a lot to think about. While we have benefited from great innovations, there are also some people who are using it to create chaos. As if the existing problem of fake news was not rampant enough, now some people are using artificial intelligence to generate fake news — which are just as undetectable and harmful.

To avoid fake news, several users from social media platforms rely upon tools and websites such as NewsGuard, Hoaxy, PolitiFact, etc. but these services are based on manual fact-checking efforts, verifying the accuracy of claims, articles, and entire websites.

Recently, researchers from the University of Washington and Allen Institute for Artificial Intelligence built a neural network model Grover to study and detect neural fake news. GROVER is a model for controllable text generation which allows for controllable yet efficient generation of an entire news article that includes the body, title, new source, publication data an author list. This tool focuses on text-only documents which are formatted as news articles and stories that contain purposefully false information. It also helps in detecting the ML-based disinformation threats and how they can be countered.

Fig: Grover detecting fake news

Framework

Grover can not only generate convincing fake news articles but also has the ability to spot its own generated fake news articles. The framework of this model is motivated by the current dynamics of manually created fake news. They are

- Adversary: The goal of this method is to generate fake stories which will match specified attributes, i.e. being viral or persuasive. The stories must be realistic to both human users as well as the verifier.

- Verifier: The goal of this method is to classify news stories as real or fake. The verifier will have access to unlimited real news stories, but few fake news stories will be accessible from a specific adversary. This setup matches the existing landscape such as when a platform blocks an account or website, their disinformative stories provide training for the verifier; but it is difficult to collect fake news from newly-created accounts.

Architecture & Dataset

Grover, a new approach for efficient learning and generation of multi-field documents. Grover’s architecture is based on OpenAI’s GPT-2, a powerful pre-training model. The training has been performed on randomly-sampled sequences from RealNews dataset and the Newspaper Python library has been used to extract the body and metadata from each article. The dataset used for building this model is called as RealNews which is a fairly large corpus of news articles from Common Crawl. For training the model, the researchers construct a large corpus of news articles with metadata from Common Crawl which includes 5,000 news domains indexed by Google News. News from Common Crawl from December 2016 through March 2019 were used as training data and the news articles published in April 2019 from the April 2019 were used as test data.

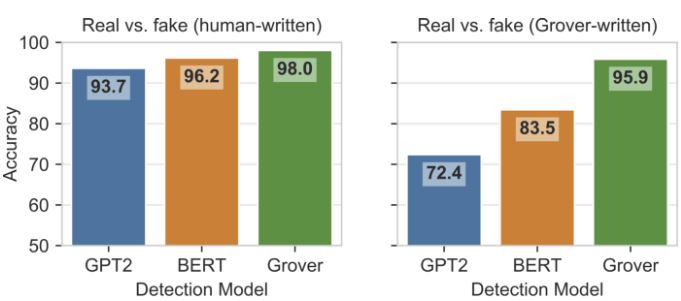

Fig: Grover model shows more than 95% accuracy in both cases (Source)

What Next?

According to the researchers, Grover is a strong step towards the development of effective automated defence mechanisms against fake news articles. A Grover model that has been adapted for detection can achieve more than 90% classification accuracy at telling apart human-written “real” from machine-written “fake” news. However, there is one possible concern which is the “rejection-sampling attack” that can fool Grover as a detector. The researchers stated that this will happen only when an adversary has access to the exact same discriminator that a verifier is using.