At the recently-concluded PyTorch DevCon, the team released updates that can probably cement PyTorch position as one of the top frameworks for machine learning.

The new release includes experimental support for features such as seamless model deployment to mobile devices, model quantization for better performance at inference time, and front-end improvements along with a number of additional tools and libraries to support model interpretability and bringing multimodal research to production.

Here is a brief overview of the updates:

Tools To Improve Model Interpretability

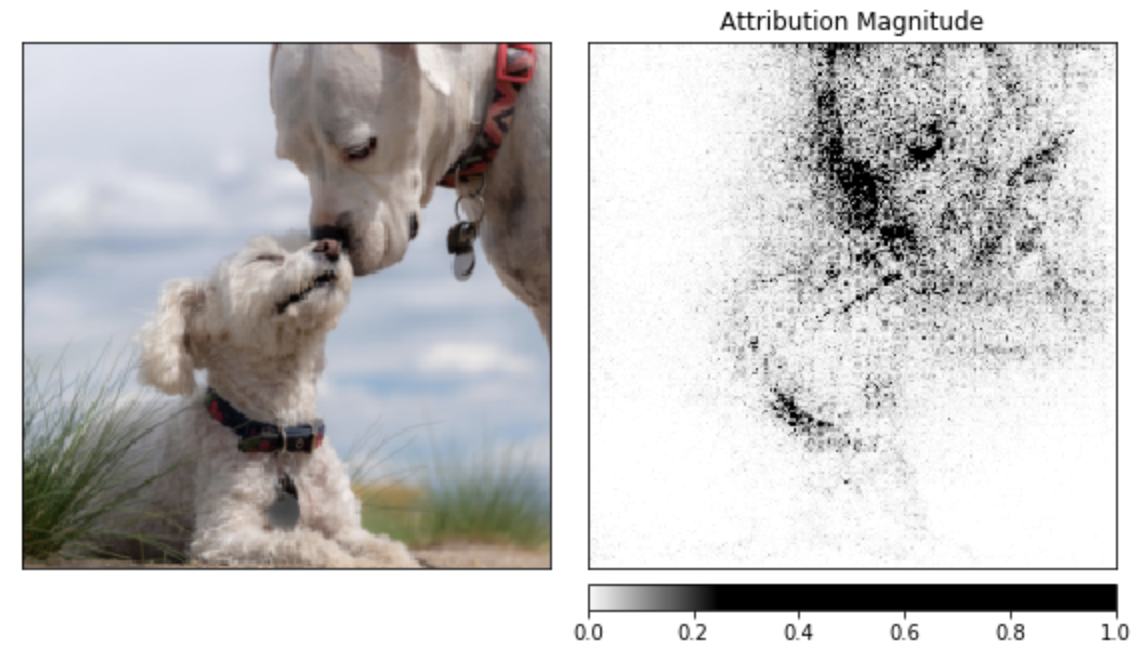

To play down the reputation of ML models as black models, the developers at PyTorch introduced a new tool called Captum. This tool enables the users to visualise what their models are detecting. For example, when an image, say of an animal is given, it visualize the attributions for each pixel by overlaying them on the image as can be seen in the picture above.

Captum’s algorithms include integrated gradients, conductance, SmoothGrad and VarGrad, and DeepLift. The purpose of the tool is to provide state-of-the-art tools to understand how the importance of specific neurons and layers and affect predictions made by the models.

Along with tools like Captum there are other interesting works such as CrypTen, which is a new community-based research platform for taking the field of privacy-preserving ML forward.

This is aimed at addressing the security and privacy challenges involved with cloud-based or machine-learning-as-a-service (MLaaS) platforms.

PyTorch Cloud TPU and TPU pod support is now in general availability on

@GCPcloudYou can also try it right now on Colab, for free at https://t.co/G6D3dfQpux pic.twitter.com/MsyfLDnwrK

— PyTorch (@PyTorch) October 10, 2019

The contributions of PyTorch to the machine learning community can be verified by the fact that PyTorch citations in papers on grew 194 percent in the first half of 2019 alone, as noted by O’Reilly, and the number of contributors to the platform has grown more than 50 percent over the last year, to nearly 1,200.

Facebook, Microsoft, Uber, and other organizations across industries are increasingly using PyToch as the foundation for their most important machine learning (ML) research and production workloads.

Along with the ones discussed above other notable PyTorch developments include:

- Facebook AI Research (FAIR) is releasing Detectron2, an object detection library.

- In addition to key GPU and CPU partners, the PyTorch ecosystem has also updates from Intel and Habana that enables developers to utilize market-specific solutions.

- Mila SpeechBrain an open source, all-in-one speech toolkit based on PyTorch.

- PyTorch Lightning is a Keras-like ML library for PyTorch.

- Torchmeta, which provides extensions for PyTorch to simplify the development of meta-learning algorithms.

The most interesting update is PyTorch’s efficient end-to-end workflow from Python to deployment on iOS and Android. Efficiency on mobile devices is required to cut down delays, which in turn has a great part to play in implementing techniques like federated learning that work on privacy preservation.

Along with improvements for mobile devices, the Named Tensors update in PyTorch enables the developers to write and maintain more readable code. This ability is still categorised as being experimental because of its shortcomings during implementation.

Whereas, to support more efficient deployment machine learning on servers and edge devices, 8-bit model quantization is made available on PyTorch. Quantization is the ability to make computation and storage at reduced precision.

Future Direction

These innovations from the PyTorch team are targeted at optimising for performance on mobile devices and extending their abilities to cover common preprocessing and integration tasks needed for incorporating computer vision, NLP and other machine learning mobile applications. ML in mobile applications e.g. Computer vision and NLP.

Now with the release of PyTorch 1.3, the team at Facebook AI looks to enable seamless model deployment on mobile devices, model quantisation for better performance at inference time, and front-end improvements, like the ability to name tensors and create clearer code and bring multimodal research to production.

They have also collaborated with Google and Salesforce to add broad support for Cloud Tensor Processing Units, providing a significantly accelerated option for training large-scale deep neural networks.

With Alibaba Cloud also joining the likes of Amazon Web Services, Microsoft Azure, and Google Cloud as supported cloud platforms for PyTorch, the team believes that this will help build a productive machine learning community and promote democratisation.