Recently Salesforce Research launched an open-sourced framework for economic policy design and simulation: AI Economist. It is an economic simulation environment in which Artificial intelligence agents extract and trade resources, make houses, earn salaries, and pay taxes to the government bodies. It is a reinforcement learning(RL) problem to tax research to provide simulation and data-driven solutions to defining optimal taxes for specific socio-economic objectives.

It was created by L-R Melvin, Gruesbeck, Alex Trott, Stephan Zheng, Richard Socher, and Sunil Srinivasa at the Salesforce research lab.

AI economists use a different collection of AI agents designed to simulate millions of years of economies to help economists, governments, and other bodies to optimize social outcome in the real world.

As a society, we are entering uncharted territory — a new world in which governments, business leaders, the scientific community, and citizens need to work together to define the paths that direct these technologies at improving the human condition and minimizing the risks.

Marc Benioff, Chairman and CEO

Its research paper is been published here: “The AI Economist: Improving Equality and Productivity with AI-Driven Tax Policies”

Now let’s straight jump to code here

Foundation

“Foundation”: It is a name given to the economic simulator for AI Economist, this is the first of many tutorials designed to see and explain how the foundation works.

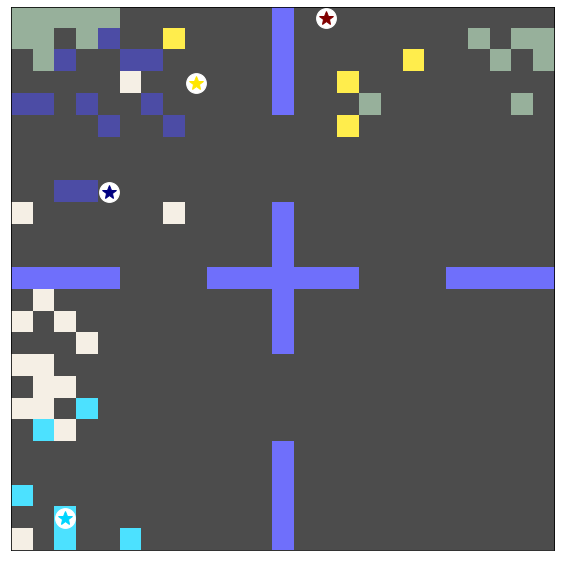

As shown in the above image it is a top view of specially designed modeling economies in a spatial 2D grid world. A nicely rendered example of what an environment looks like. As mentioned in the paper it uses a scenario with having 4 agents in a field/world with wood and stone, which can be traded, collected, and even to build a house.

The above image is only indicating some features, behind the scenes, we have agents with stone inventories, Wood, and coin to exchange commodities marketplace. Also, the agents pay taxes from time to time by earning money through trading and building

Markov Decision Process

AI-Economist heavily depends on MDP(Markov Decision Process), describing episodes in which agents receive observation and use policy to select actions. The environment then advances to an upgraded state, using the old states and actions. The agent receives new observations and rewards. This process repeats over the T timestamp.

Implementation

We are going to use the basic economic simulator demo, licensed by Salesforce to see how this model works and how we can leverage the power of AI economists in our given situation. This tutorial will give you enough to see how to create the type of simulation environment described above and interact with.

Downloading GitHub repo and installing dependencies

import sys IN_COLAB = 'google.colab' in sys.modules if IN_COLAB: ! git clone https://github.com/salesforce/ai-economist.git % cd ai-economist ! pip install -e . else: ! pip install ai-economist # Import foundation from ai_economist import foundation import numpy as np %matplotlib inline import matplotlib.pyplot as plt from IPython import display if IN_COLAB: from tutorials.utils import plotting # plotting utilities for visualizing env state else: from utils import plotting

Let’s Create a Simulation Environment

The scenario provides a high-level gym style API, that lets agents interact with it. The Scenario class implements an economic simulation with multiple agents, each scenario implements two main methods:

- Step: for advancing simulation to next state

- Reset puts simulation back in an initial state.

# Define the configuration of the environment that will be built

env_config = {

# ===== SCENARIO CLASS =====

# Which Scenario class to use: the class's name in the Scenario Registry (foundation.scenarios).

# The environment object will be an instance of the Scenario class.

'scenario_name': 'layout_from_file/simple_wood_and_stone',

# ===== COMPONENTS =====

# Which components to use (specified as list of ("component_name", {component_kwargs}) tuples).

# "component_name" refers to the Component class's name in the Component Registry (foundation.components)

# {component_kwargs} is a dictionary of kwargs passed to the Component class

# The order in which components reset, step, and generate obs follows their listed order below.

'components': [

# (1) Building houses

('Build', {'skill_dist': "pareto", 'payment_max_skill_multiplier': 3}),

# (2) Trading collectible resources

('ContinuousDoubleAuction', {'max_num_orders': 5}),

# (3) Movement and resource collection

('Gather', {}),

],

# ===== SCENARIO CLASS ARGUMENTS =====

# (optional) kwargs that are added by the Scenario class (i.e. not defined in BaseEnvironment)

'env_layout_file': 'quadrant_25x25_20each_30clump.txt',

'starting_agent_coin': 10,

'fixed_four_skill_and_loc': True,

# ===== STANDARD ARGUMENTS ======

# kwargs that are used by every Scenario class (i.e. defined in BaseEnvironment)

'n_agents': 4, # Number of non-planner agents (must be > 1)

'world_size': [25, 25], # [Height, Width] of the env world

'episode_length': 1000, # Number of timesteps per episode

# In multi-action-mode, the policy selects an action for each action subspace (defined in component code).

# Otherwise, the policy selects only 1 action.

'multi_action_mode_agents': False,

'multi_action_mode_planner': True,

# When flattening observations, concatenate scalar & vector observations before output.

# Otherwise, return observations with minimal processing.

'flatten_observations': False,

# When Flattening masks, concatenate each action subspace mask into a single array.

# Note: flatten_masks = True is required for masking action logits in the code below.

'flatten_masks': True,

}

# Create an environment instance using this configuration:

env = foundation.make_env_instance(**env_config)

Start interacting with Simulation

env.get_agent(0)

def sample_random_action(agent, mask):

"""Sample random UNMASKED action(s) for agent."""

# Return a list of actions: 1 for each action subspace

if agent.multi_action_mode:

split_masks = np.split(mask, agent.action_spaces.cumsum()[:-1])

return [np.random.choice(np.arange(len(m_)), p=m_/m_.sum()) for m_ in split_masks]

# Return a single action

else:

return np.random.choice(np.arange(agent.action_spaces), p=mask/mask.sum())

def sample_random_actions(env, obs):

"""Samples random UNMASKED actions for each agent in obs."""

actions = {

a_idx: sample_random_action(env.get_agent(a_idx), a_obs['action_mask'])

for a_idx, a_obs in obs.items()

}

return actions

We can interact with the simulation, the first environment put in an initial state by using reset.

obs = env.reset()

Then, we can further call steps to advance the state and advance time by one tick.

actions = sample_random_actions(env, obs) obs, rew, done, info = env.step(actions)

Reward

For each agent, the reward dictionary contains some scalar reward

for agent_idx, reward in rew.items():

print("{:2} {:.3f}".format(agent_idx, reward))

Done

done object is a default dictionary that records whether all agents/planner have seen the end of the episode. The default criterion for each agent is to ‘stop’ their episode once in a while as H steps have been executed. After the agent is ‘done’, they do not change their state after that. So, while it’s not currently implemented, this could be used to indicate that the episode has ended for a specific Agent.

done

Info

Similarly, we have an info object that can record auxiliary actions from simulators, which can be useful, sometimes for visualization by default it’s empty.

info

Visualizing Episode

def do_plot(env, ax, fig):

"""Plots world state during episode sampling."""

plotting.plot_env_state(env, ax)

ax.set_aspect('equal')

display.display(fig)

display.clear_output(wait=True)

def play_random_episode(env, plot_every=100, do_dense_logging=False):

"""Plays an episode with randomly sampled actions.

Demonstrates gym-style API:

obs <-- env.reset(...) # Reset

obs, rew, done, info <-- env.step(actions, ...) # Interaction loop

"""

fig, ax = plt.subplots(1, 1, figsize=(10, 10))

# Reset

obs = env.reset(force_dense_logging=do_dense_logging)

# Interaction loop (w/ plotting)

for t in range(env.episode_length):

actions = sample_random_actions(env, obs)

obs, rew, done, info = env.step(actions)

if ((t+1) % plot_every) == 0:

do_plot(env, ax, fig)

if ((t+1) % plot_every) != 0:

do_plot(env, ax, fig)

Visualizing random episodes

play_random_episode(env, plot_every=100)

Conclusion

We have seen the basics of the AI-economist basic simulation system and what are the main attributes that have been placed within the project, the outputs are pretty satisfying. In further advanced tutorial Salesforce research team has introduced the more complex tutorial, you can follow below resources to learn more :