Bidirectional Encoder Representations from Transformers or BERT is a very popular NLP model from Google known for producing state-of-the-art results in a wide variety of NLP tasks. The importance of Natural Language Processing (NLP) is profound in the artificial intelligence domain.

The most abundant data in the world today is in the form of texts. That’s why having a powerful text-processing system is critical and is more than just a necessity. In this article, we will look at implementing a multi-class classification using BERT.

The BERT algorithm is built on top of breakthrough techniques such as seq2seq (sequence-to-sequence) models and transformers. The seq2seq model is a network that converts a given sequence of words into a different sequence and is capable of relating the words that seem more important. LSTM network is a good example for seq2seq model. The transformer architecture is also responsible for transforming a sequence into another, but without depending on any Recurrent Networks such as LSTMs or GRUs. We will not go deep into the architecture of BERT and will focus mainly on the implementation part and hence it will be good to have a basic understanding of BERT and its working before you proceed.

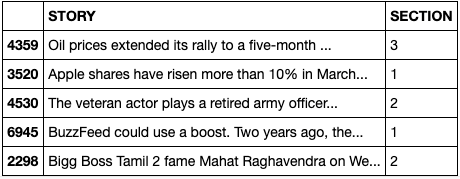

About Dataset

For this article, we will use MachineHack’s Predict The News Category Hackathon data. The data consists of a collection of news articles which are categorised into four sections. The features of the datasets are as follows:

- Size of training set: 7,628 records

- Size of test set: 2,748 records

FEATURES:

- STORY: A part of the main content of the article to be published as a piece of news

- SECTION: The genre/category the STORY falls in

There are four distinct sections where each story may fall in to. The Sections are labelled as:

- Politics: 0

- Technology: 1

- Entertainment: 2

- Business: 3

BERT Inference Essentials

To implement BERT or to use it for inference there are certain requirements to be met.

Data Preprocessing

BERT expects data in a specific format and the datasets are usually structured to have the following four features:

- guid: A unique id that represents an observation.

- text_a: The text we need to classify into given categories

- text_b: It is used when we’re training a model to understand the relationship between sentences and it does not apply for classification problems.

- label: It consists of the labels or classes or categories that a given text belongs to.

Having the above features in mind, let’s look at the data we have:

In our dataset, we have text_a and label. Let’s convert this is to the format that BERT requires.

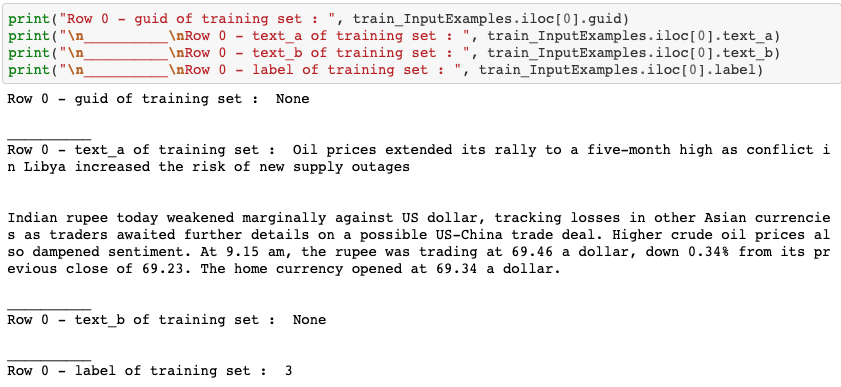

The following code block will create objects for each of the above-mentioned features for all the records in our dataset using the InputExample class provided in the BERT library.

The guid and text_b are none since we don’t have it in our dataset.

Let’s try to print the 4 features for the first observation.

Output:

Now we have a proper format for our BERT model and can start preprocessing the data.

We will perform the following operations :

- Normalizing the text by converting all whitespace characters to spaces and casing the alphabets based on the type of model being used(Cased or Uncased).

- Tokenizing the text or splitting the sentence into words and splitting all punctuation characters from the text.

- Adding CLS and SEP tokens to distinguish the beginning and the end of a sentence.

- Breaking words into WordPieces based on similarity (i.e. “calling” -> [“call”, “##ing”])

- Mapping the words in the text to indexes using the BERT’s own vocabulary which is saved in BERT’s vocab.txt file.

If that seems like a lot to do, no worries! All the above operations are effortlessly handled by BERT’s own tokenization package. The following code block performs all the mentioned operations.

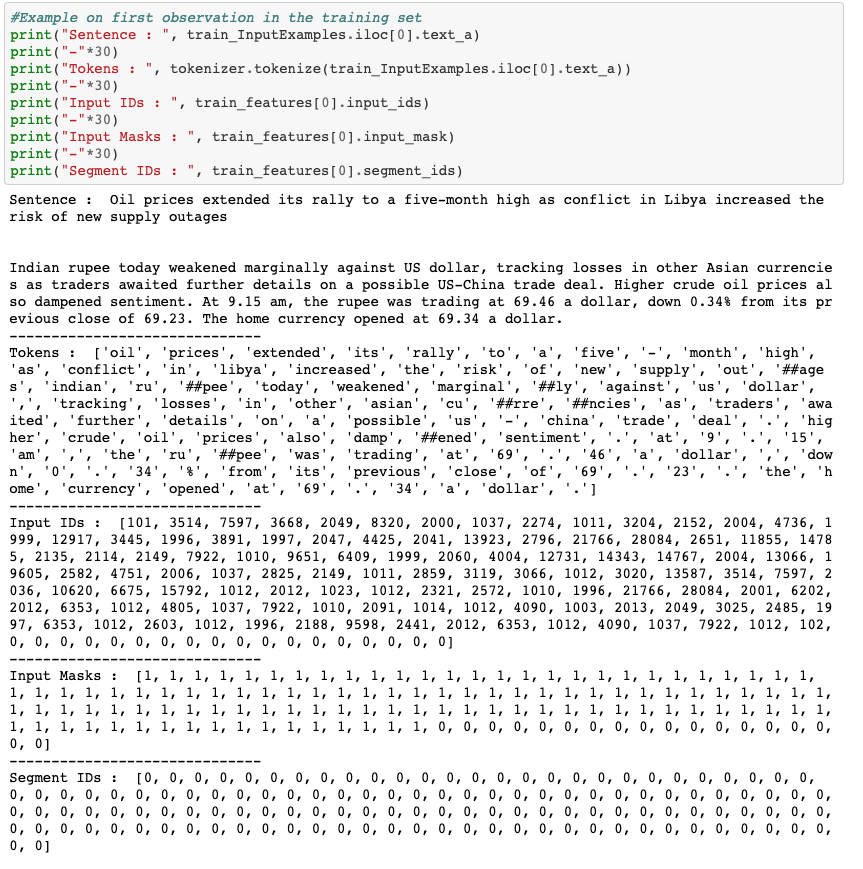

Let’s see what each of the features looks like:

In the above output, we have an original sentence from the training set. Next, the tokens from the sentence are printed. The input IDs are token IDs with each ID representing a unique token. The input-masks help distinguish the tokens from the padding elements. In the above example, 0’s represent the padding elements. The padding is determined by the specified sequence length. If the length of the tokens is less than the specified sequence length, the tokenizer will perform padding to meet the sequence length. The segment IDs are used to distinguish different sentences. In the above example, we only have one text segment hence all Segment IDs are the same. If two sentences are to be processed, each word in the first sentence will be masked to 0 and each word in the second sentence will be masked to 1.

BERT Model

Now we have the input ready, we can now load the BERT model, initiate it with the required parameters and metrics.

The code block defines a function to load up the model for fine-tuning.

we can effortlessly use BERT for our problem by fine-tuning it with the prepared input.

Follow along with the complete code in the below notebook.

Complete Code

The above example was done based on the original Predicting Movie Reviews with BERT on TF Hub.ipynb notebook by Tensorflow.