Last December, Larsen & Toubro Infotech, a global technology consulting and digital solutions company, launched Fosfor to help businesses monetise data at speed and scale. Their product suite leverages AI, ML and advanced analytics to empower customers with the right data in the fastest time possible.

“LTI believes that AI with all its cognitive brilliance is already shaking up the business landscape. Fosfor is a testimony of LTI’s intensified focus on the multi-billion dollar, fast-growing data and AI products market,” said Satyakam Mohanty, Chief Product Officer at Fosfor by L&T Infotech (LTI).

In an interview with Analytics India Magazine, Satyakam spoke about how Fosfor embeds ethics into their product suite.

AIM: What are the AI governance methods, techniques, and frameworks used at Fosfor?

Satyakam Mohanty: AI governance methods are hard to standardise as there is no unified global agreement on AI best practices. It is either regulatory-driven or self-driven by the organisations. At Fosfor, our approach to AI governance is based on the following principles:

- Standards for Explainability: Our products incorporate various explainability layers to remove the complexities involved with black box AI models. Our augmented analytics product Lumin has an in-built explainable AI layer that helps provide transparency and generate trust in erstwhile black box AI models, which helps our clients make confident data-driven decisions.

- Fairness evaluation of the AI models: Our bias and variance evaluation process establishes fairness and conveys transparent practices to inform the user about the model decisions.

- Safety standards for high-risk applications: This is an exceptional scenario that has not been encountered yet, but robust policies and procedures are in place to address such anomalies in AI modelling for high-risk applications.

- Human in the loop approach (HITL): We ensure a human is always in the loop to train, tune or test a particular algorithm or adjust and makes changes in deep learning models.

AIM: What explains the growing conversations around AI ethics, responsibility, and fairness? Why is it important?

Satyakam Mohanty: According to 2021 PwC research, only 20% of enterprises had an AI ethics framework in place, and only 35% had plans to improve the governance of AI systems and processes. But a growing number of enterprises are now starting to pay attention to AI ethics, responsible AI and fairness to address the ethical problems that arise from non-human data analysis and decision-making.

- Firstly, companies are quickly learning that AI doesn’t just scale solutions — it also scales risk. There is regulatory risk, reputation risk as well as compliance risk, hence many organisations need to adopt fairness practices and responsible AI as part of their AI strategy. Issues of bias, explainability, data handling, transparency on data policies, systems capabilities, and design choices should be addressed in a responsible and open way to ensure ethical practices are followed across every level.

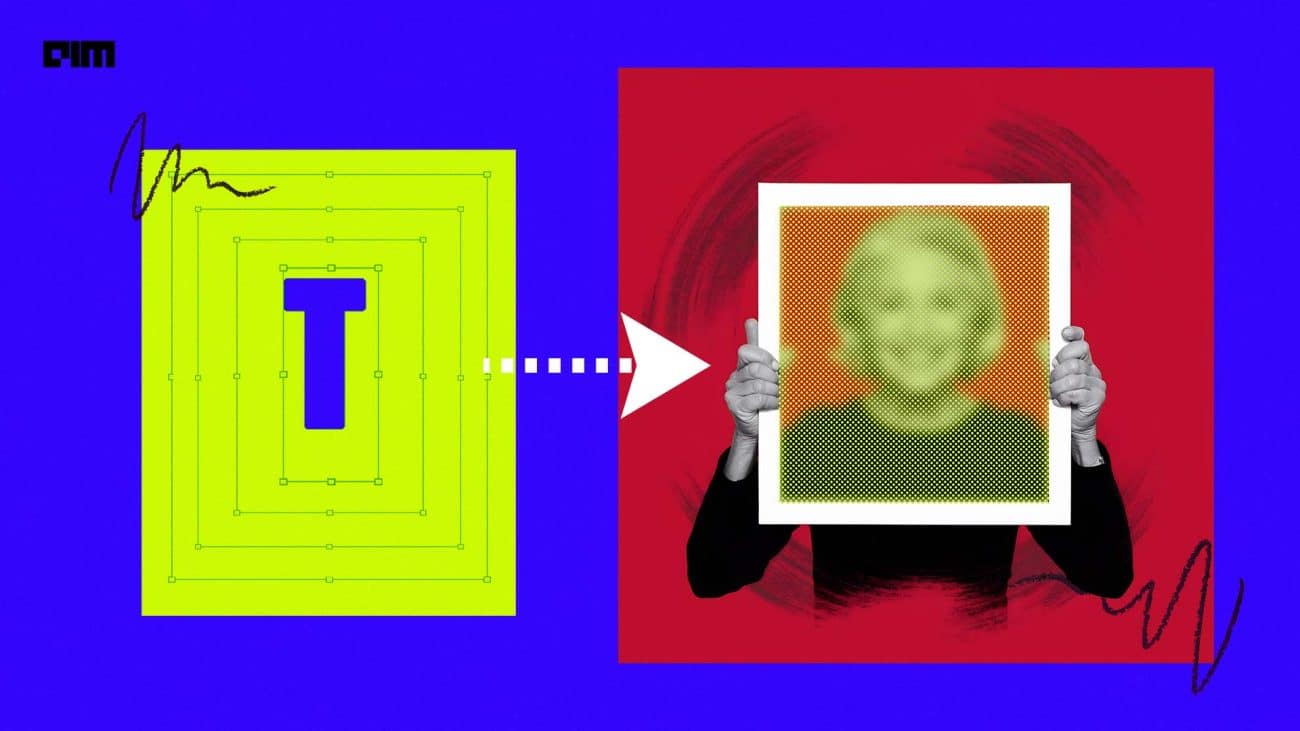

- Secondly, with AI models making more critical decisions every day, they require new oversight protocols that can ensure they are accurate, fair, and curb potential abuse. There are endless ways in which AI can be misused, and many are already happening. Deep fake technologies are being misused to create political unrest, high-risk patients are misrepresented due to racial bias created by algorithms, AI-based recruitment tools are feeding implicit gender bias – the list is endless. This growing need for independent validation of AI models requires the same attention and investment used to build models themselves.

I believe responsible AI is the only way to mitigate AI risks. The great AI debate opens various facets of ethics, but without a common agreement and agreed standard, its impact and repercussions on the way organisations operate is not quantifiable. Fairness and explainability can be managed and scaled by introducing data bias mitigation practices and algorithmic bias mitigation processes and ensuring higher standard explainability frameworks into the implementations and decision-making process. By utilising ethics as a key decision-making tool, AI-driven companies save time and money in the long run by building robust and innovative solutions from the start.

AIM: How does Fosfor ensure adherence to its AI governance policies?

Satyakam Mohanty: The following approach is ingrained in all our AI initiatives, and guidelines are in place to ensure they align with LTI’s best practices for data governance and privacy:

a. Stakeholder awareness: AI models used for generating insights or providing recommendations is communicated to the internal stakeholders, product managers, project managers and risk and governance teams to make sure all the responsible teams are always in the loop.

b. Human in the loop: All Auto ML pipelines for generating decisions are always thoroughly peer-reviewed with robust guidelines for internal approvals before putting them into production.

c. Explainability: At each prediction layer or decisioning layer, explainability frameworks (be it pre-model or post-model explainability) are integrated to let the user know how the AI model arrived at the projections or predictions.

d. Model monitoring: Fairness and monitoring metrics are strictly followed, and auto alerts are generated to ensure the best outcomes are delivered to the users.

AIM: How do you mitigate biases in your AI algorithms?

Satyakam Mohanty: Bias is something that our human brain exhibits when making assumptions. On the contrary, the common source of bias for AI/ML systems could be due to a data bias where bias has been ingrained in the data collection phase (collecting only male resumes for a position), algorithmic bias (when there is a preference for certain types of models over others), training bias like premature testing vs over training of models or interpretation bias where confusing interpretations leads to incorrect decisions.

It is hard to avoid the biases as fairness is not something you can crisply define in an ML pipeline and set thresholds while coding. Some biases are inherent and difficult to identify, however, some can be traced using the right methods and techniques. Bias identification is a challenge, but mitigation is possible by minimising the bias and introducing a demographic or statistical parity to equalise data representation.

A more practical approach is knowing the potential bias and impact of decisions until and unless it is a mission-critical application. Sometimes little bias plus decision generation is preferred over no decision with the caveat of potential risk.

AIM: Do you have a due diligence process to make sure the data is collected ethically?

Satyakam Mohanty: All of our AI products are proprietary and built using patented technology. For areas where we have third party integrations, we have a robust due diligence process in place. We do not collect any data outside of the use case we are solving. The data is not shared, moved, or copied on our products.

For example, in Lumin, we only work on the virtual reflection of the datasets and not the actual data, whether it resides in on-prem servers or on the cloud. This accelerates the querying process and ensures that data privacy and ethical concerns are kept in check. Similarly, our MLops platform, Refract, has enterprise governance and security integrations in-built for models which can securely be installed onto any cloud or on-prem infrastructure.

AIM: How does Fosfor protect user data?

Satyakam Mohanty: Our data privacy is governed by LTI’s Data Privacy Policy. We do not share, sell, transfer, or otherwise disseminate personal data to third parties unless we are allowed to do so on the basis of a data processing agreement according to GDPR or express consent from users. All concerns related to data privacy issues are addressed by our Data Privacy Officer.

LTI’s data privacy offering, PrivateEye, helps classify, discover, and label personal data across various systems to uncover how data is stored and managed in its lifecycle. This can help uncover gaps in people, processes, and technology on your journey towards data privacy compliance.