|

Listen to this story

|

NVIDIA’s AI hardware remains a highly coveted product in the Silicon Valley. In fact, the Jensen Huang-led company currently enjoys a near-monopoly in the GPU market. Nevertheless, Intel, a company with a long history of chip dominance, is progressively narrowing the gap with NVIDIA. This competition between the two giants could prove beneficial for the market as a whole.

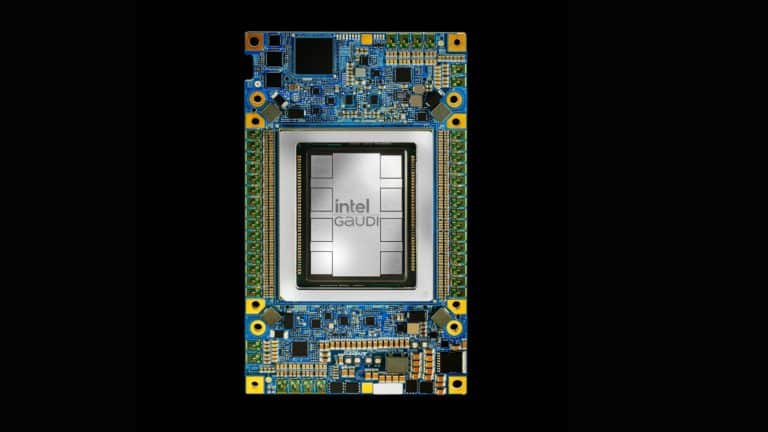

In the recently released ML Perf benchmark test, NVIDIA dominated the charts and won every benchmark, however, surprisingly, Intel finished a close second. The company provided performance results for the Habana Gaudi2 accelerators, 4th Gen Intel Xeon Scalable processors, and Intel Xeon CPU Max Series.

Intel beats NVIDIA in Vision Models

Since its acquisition of AI chipmaker Habana Labs in 2019 for USD 2 billion, Intel has tried to break into the AI compute market and is making significant strides. Interestingly, Gaudi2’s performance surpassed that of NVIDIA’s H100 on a state-of-the-art vision language model on Hugging Face’s performance benchmarks. “Optimum Habana v1.7 on Habana Gaudi2 achieves 2.5x speedups compared to A100 and 1.4x compared to H100 when fine-tuning BridgeTower, a state-of-the-art vision-language model,” a Hugging Face blog post states.

This performance improvement, according to Intel, relies on hardware-accelerated data loading to make the most of your devices. Furthermore, Intel believes these recent results serve as a strong confirmation that presently, Intel stands as the primary and most compelling alternative to NVIDIA’s H100 and A100 for AI computing requirements.

Intel’s claim is based on Gaudi2’s strong performance on the ML Perf benchmarks as well. When it comes to LLMs, Gaudi2 delivers compelling performance against NVIDIA’s H100, with H100 showing a slight advantage of 1.09x (server) and 1.28x (offline) performance relative to Gaudi2. Given NVIDIA’s first-mover advantage and Intel’s track record, it’s a significant development.

Why it matters

What Intel would bring with its Gaudi2 processors is competition to the market, besides catering to the GPU shortage. Today, enterprises want to get their hands on the NVIDIA H100 GPUs, because it is the fastest AI accelerator on the market. Moreover, NVIDIA has established its dominance in the market through forward-thinking strategies, a meticulously planned and well-documented software ecosystem, and sheer processing prowess. However, what Intel seeks to challenge is the prevailing industry narrative that generative AI and LLMs can solely operate on NVIDIA GPUs.

Currently, NVIDIA’s GPUs come at an exorbitant price. A single H100 could cost around USD 40,000. While Gaudi 2 is not only closing the gap with NVIDIA, but also claims to be cheaper than NVIDIA’s processors. This is a welcome news even though Intel has not specifically revealed its price point. “Gaudi2 also provides substantially competitive cost advantages to customers, both in server and system costs,” Intel said in a blog post.

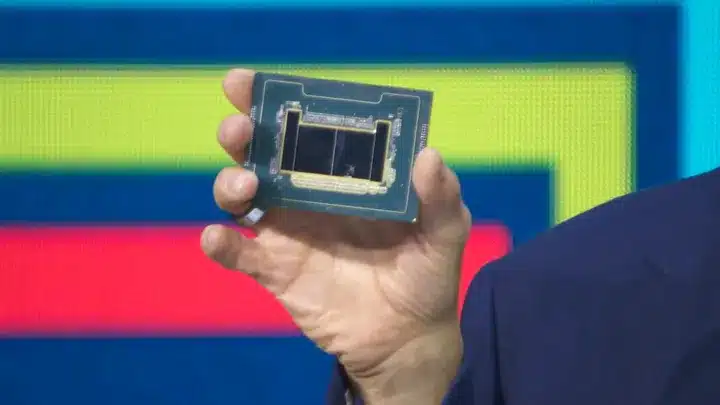

Gaudi2 is powered by a 7nm TSMC processor, compared to NVIDIA’s 5nm Hopper GPU. However, the next generation of Gaudi could be powered by a 5nm chip and likely be released by year end. Moreover, according to Intel, the integration with FP8 precision quantisation would make Gaudi2 even faster for AI interference.

Tarun Dua, CEO at E2E Networks, also told AIM that Intel’s entry into the GPU space is great news because competition is always good for the market. Other players entering the market with relatively cheaper alternatives would make GPUs more accessible. AMD and China-based Huawei are also working to introduce GPUs to the market to meet the growing demand. Moreover, constrained supply chains could hamper innovation.

“However, for Intel to truly compete with NVIDIA, it will need to build an entire plug-and-play ecosystem that NVIDIA has built over the years. It may take some time before we witness Intel operating at production scales comparable to NVIDIA, particularly in the training aspect. However, in terms of interference, we might observe their entry sooner,” Dua said.

Challenges galore

While Intel is closing the gap, NVIDIA, on the other hand, continues to leap higher. The GPU maker has unveiled an updated TensorRT software designed specifically for LLMs. This software promises to deliver significant enhancements in both performance and efficiency during inference processing applicable to all NVIDIA GPUs. The software upgrade could supercharge NVIDIA’s most advanced GPUs, which could further widen the gap between NVIDIA and Intel.

Besides the pure performance of its GPUs, NVIDIA moat is its software stack. NVIDIA released its first GPU in 1999 and in 2006 NVIDIA developed CUDA, touted as the world’s first solution for general computing on GPUs. Since then, the CUDA ecosystem has grown drastically.

“This dominance extends beyond just hardware, as it heavily influences the software, frameworks and ecosystem that surrounds it. The prevalence of NVIDIA GPUs in all the recent AI / ML advances has led to the centralisation of research, models, and software development around CUDA. Nearly every AI researcher currently chooses CUDA as a default due to this,” Mohammed Imran K R, CTO at E2E Networks, told AIM.

To compete with CUDA, Intel is in the process of shifting its developer tools to LLVM to support cross-architecture compatibility. Additionally, they are adopting a specification known as oneAPI for enhanced accelerated computing. This move aims to lessen NVIDIA’s control by reducing its reliance on CUDA.

“For it to become pervasive, accelerated computing needs to be standards-based, scaleable, multi-vendor and ideally multi-architecture. We set out four years ago to do that with oneAPI… and we’ve got to the point where we’re becoming productive for developers,” Joe Curley, VP and general manager for software products at Intel said in an interview. Moreover, an open-source infrastructure that makes it easy to choose among GPU providers is also the need of the hour.