Natural Language Processing(NLP) is one of the most popular domains in ML. It is a collection of methods to make the machine learn and understand the language of humans. The wide adoption of its applications has made it a hot skill amongst top companies. Here are a few frequently asked NLP questions that would give an introductory idea of this domain:

1)How can machines make meaning out of language?

Popular NLP procedure is to use stemming and lemmatization methods along with the parts of speech tagging. The way humans use language varies with context and everything can’t be taken too literally.

Stemming approximates a word to its root i.e identifying the original word by removing the plurals or the verb forms. For example, ‘rides’ and ‘riding’ both denote ‘ride’. So, if a sentence contains more than one form of ride, then all those will be marked to be identified as the same word. Google used stemming back in 2003 for its search engine queries.

Whereas, lemmatization is performed to correctly identify the context in which a particular word is used. To do this, the sentences adjacent to the one under consideration are scanned too. In the above example, riding is the lemma of the word ride.

Removing stop words like a, an, the from a sentence can also enable the machine to get to the ground truth faster.

2)What does a NLP pipeline consist of?

Any typical NLP problem can be proceeded as follows:

- Text gathering(web scraping or available datasets)

- Text cleaning(stemming, lemmatization)

- Feature generation(Bag of words)

- Embedding and sentence representation(word2vec)

- Training the model by leveraging neural nets or regression techniques

- Model evaluation

- Making adjustments to the model

- Deployment of the model.

3)What is Parsing in the context of NLP?

Parsing a document means to working out the grammatical structure of sentences, for instance, which groups of words go together (as “phrases”) and which words are the subject or object of a verb. Probabilistic parsers use knowledge of language gained from hand-parsed sentences to try to produce the most likely analysis of new sentences.

4)What is Named Entity Recognition(NER)?

Named entity recognition is a method to divide a sentence into categories.

Neil Armstong of the US had landed on the moon in 1969 will be categorized as

Neil Armstong- name;The US – country;1969 – time(temporal token).

The idea behind NER is to enable the machine to pull out entities like people, places, things, locations, monetary figures, and more.

5) Where can NER be used?

Scanning documents for classification, customer support(chatbots, understanding feedback) and entity identification in molecular biology(names of genes etc.,)

6) How is feature extraction done in NLP

The features of a sentence can be used to conduct sentiment analysis or document classification. For example if a product review on Amazon or a movie review on IMDB consists of certain words like ‘good’, ‘great’ more, it could then be concluded/classified that a particular review is positive.

Bag of words is a popular model which is used for feature generation. A sentence can be tokenized and then a group or category can be formed out of these individual words, which further explored or exploited for certain characteristics(number of times a certain word appears etc).

7) Name some popular models other than Bag of words?

Latent semantic indexing, word2vec.

8) Explain briefly about word2vec

Word2Vec embeds words in a lower-dimensional vector space using a shallow neural network. The result is a set of word-vectors where vectors close together in vector space have similar meanings based on context, and word-vectors distant to each other have differing meanings. For example, apple and orange would be close together and apple and gravity would be relatively far. There are two versions of this model based on skip-grams (SG) and continuous-bag-of-words (CBOW).

9) What is Latent Semantic Indexing?

Latent semantic indexing is a mathematical technique to extract information from unstructured data. It is based on the principle that words used in the same context carry the same meaning.

In order to identify relevant (concept) components, or in other words, aims to group words into classes that represent concepts or semantic fields, this method applies Singular Value Decomposition to the Term-Document matrix. As the name suggests this matrix consists of words as rows and document as columns.

LSI is computation heavy when compared to other models. But it equips an NLP model with better contextual awareness, which is relatively closer to NLU

10) What are the metrics used to test an NLP model?

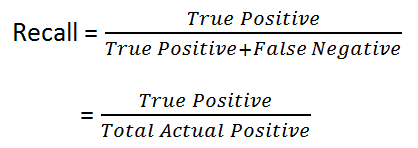

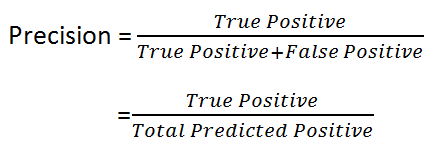

Accuracy, Precision, Recall and F1. Accuracy is the usual ratio of the prediction to the desired output. But going just be accuracy is naive considering the complexities involved. Whereas, precision and recall consider false positive and false negative making them more reliable metrics.

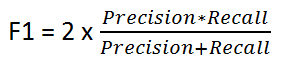

And, F1 is the sweet spot between precision and recall.

11) What are some popular Python libraries used for NLP

Stanford’s CoreNLP, SpaCy , NLTK and TextBlob.

There is more to explore about NLP. Advancements like Google’s BERT, where a transformer network is preferred to CNN or RNN. A Transformer network applies self-attention mechanism which scans through every word and appends attention scores(weights) to the words. For example, homonyms will be given higher scores for their ambiguity and these weights are used to calculate weighted average which gives a different representation of the same word.

Know more about how to build an NLP model here