Markov chains are a stochastic model that represents a succession of probable events, with predictions or probabilities for the next state based purely on the prior event state, rather than the states before. Markov chains are used in a variety of situations because they can be designed to model many real-world processes. These areas range from animal population mapping to search engine algorithms, music composition, and speech recognition. In this article, we will be discussing a few real-life applications of the Markov chain. Following are the topics to be covered.

Table of contents

- The “Memoryless” Markov chain

- The functionality of the chain

- Real-life applications

- “Pagerank” used by Google

- Predicting Market trends

- Subreddit Simulation

- Next word prediction

- Election polls

Let’s start with an understanding of the Markov chain and why it is called a“Memoryless” chain.

The “Memoryless” Markov chain

Markov chains are an essential component of stochastic systems. They are frequently used in a variety of areas. A Markov chain is a stochastic process that meets the Markov property, which states that while the present is known, the past and future are independent. This suggests that if one knows the process’s current state, no extra knowledge about its previous states is needed to provide the best possible forecast of its future. It is “Memoryless” due to this characteristic of the Markov Chain.

This simplicity can significantly reduce the number of parameters when studying such a process. Markov chains are used to calculate the probability of an event occurring by considering it as a state transitioning to another state or a state transitioning to the same state as before.

Are you looking for a complete repository of Python libraries used in data science, check out here.

The functionality of the chain

A probabilistic mechanism is a Markov chain. The transition matrix of the Markov chain is commonly used to describe the probability distribution of state transitions. If the Markov chain includes N states, the matrix will be N x N, with the entry (I, J) representing the chance of migrating from the state I to state J.

To formalize this, we wish to calculate the likelihood of travelling from state I to state J over M steps. The Markov chain model relies on two important pieces of information.

- The Transition Matrix (abbreviated P) reflects the probability distribution of the state’s transitions. The total of the probabilities in each row of the matrix will equal one, indicating that it is a stochastic matrix.

- Initial State Vector (abbreviated S) reflects the probability distribution of starting in any of the N possible states. Every entry in the vector indicates the likelihood of starting in that condition.

Given these two dependencies, the starting state of the Markov chain may be calculated by taking the product of P x I. To anticipate the likelihood of future states happening, elevate your transition matrix P to the Mth power.

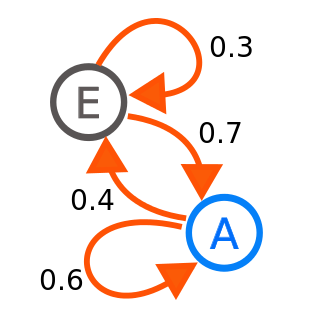

The above representation is a schematic of a two-state Markov process, with states labeled E and A. Each number shows the likelihood of the Markov process transitioning from one state to another, with the arrow indicating the direction. For instance, if the Markov process is in state A, the likelihood that it will transition to state E is 0.4, whereas the probability that it will continue in state A is 0.6.

Real-life applications

After the explanation, let’s examine some of the actual applications where they are useful. You’ll be amazed at how long you’ve been using Markov chains without your knowledge.

“Pagerank” used by Google

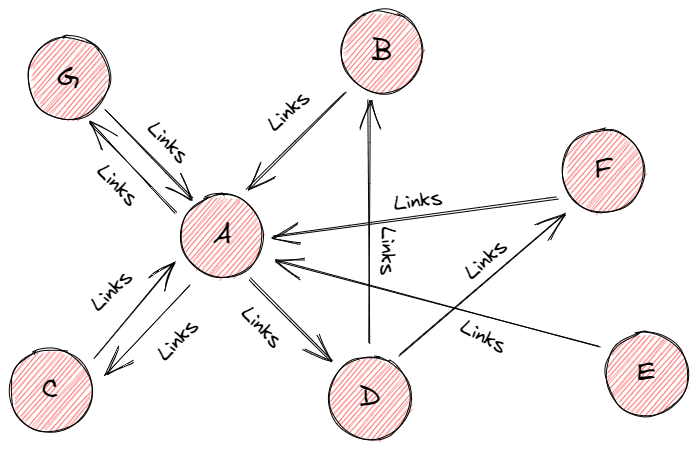

Page and Brin created the algorithm, which was dubbed “PageRank” after Larry Page. PageRank is one of the strategies Google uses to assess the relevance or value of a page. To use the PageRank algorithm, we assume the web to be a directed graph, with web pages acting as nodes and hyperlinks acting as edges. PageRank assigns a value to a page depending on the number of backlinks referring to it. A page that is connected to many other pages earns a high rank.

To calculate the page score, keep in mind that the surfer can choose any page. However, they do not always choose the pages in the same order. Most of the time, a surfer will follow links from a page sequentially, for example, from page “A,” the surfer will follow the outbound connections and then go on to one of page “A’s” neighbors. However, this is not always the case.

A lesser but significant proportion of the time, the surfer will abandon the current page and select a random page from the web to “teleport” to. To account for such a scenario, Page and Brin devised the damping factor, which quantifies the likelihood that the surfer abandons the current page and “teleports” to a new one. Because the user can teleport to any web page, each page has a chance of being picked by the nth page.

Predicting Market Trends

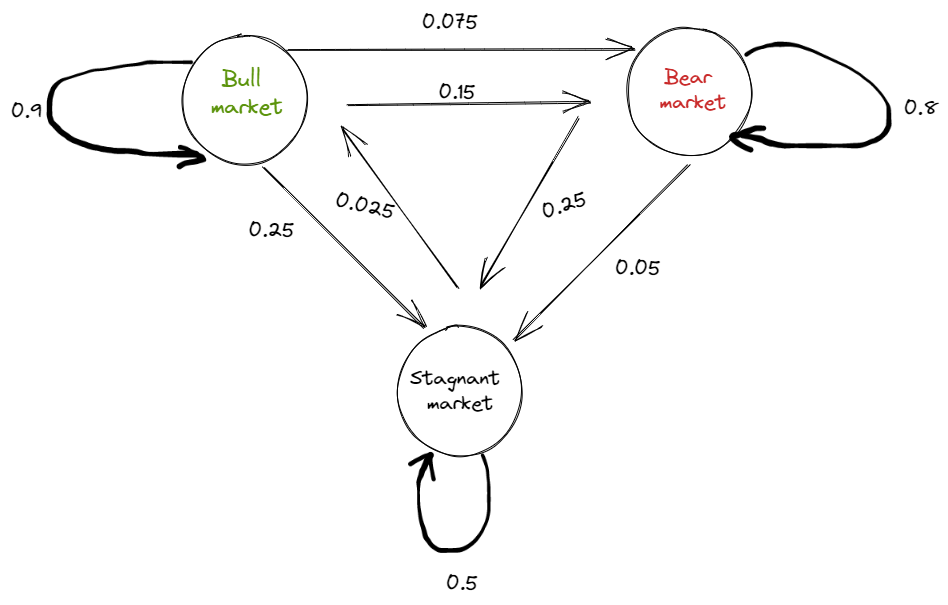

The stock market is a volatile system with a high degree of unpredictability. Markov chains and their associated diagrams may be used to estimate the probability of various financial market climates and so forecast the likelihood of future market circumstances. We can see that this system switches between a certain number of states at random. State-space refers to all conceivable combinations of these states. In our situation, we can see that a stock market movement can only take three forms.

- Bull markets are times in which prices normally rise as a result of the players’ positive outlook for the future.

- Bear markets are times in which prices typically fall as a result of the players’ negative outlook on the future.

- Stagnant markets are those in which there is neither a drop nor an increase in overall prices.

Fair markets believe that market information is dispersed evenly among its participants and that prices vary randomly. This indicates that all actors have equal access to information, hence no actor has an advantage owing to inside information. Certain patterns, as well as their estimated probability, can be discovered through the technical examination of historical data.

Consider the following patterns from historical data in a hypothetical market with Markov properties. There is a 90% possibility that another bullish week will follow a week defined by a bull market trend. Furthermore, there is a 7.5%possibility that the bullish week will be followed by a negative one and a 2.5% chance that it will stay static. Following a bearish week, there is an 80% likelihood that the following week will also be bearish, and so on.

Subreddit Simulation

There is a bot on Reddit that generates random and meaningful text messages. It uses GTP3 and Markov Chain to generate text and random the text but still tends to be meaningful.

Simply said, Subreddit Simulator pulls in a significant chunk of ALL the comments and titles published throughout Reddit’s many communities, then analyzes the word-by-word structure of each statement. Using this data, it produces word-to-word probabilities and then utilizes those probabilities to build titles and comments from scratch.

In the above example, different Reddit bots are talking to each other using the GPT3 and Markov chain.

Next word prediction

The Markov chain helps to build a system that when given an incomplete sentence, the system tries to predict the next word in the sentence. Since every word has a state and predicts the next word based on the previous state.

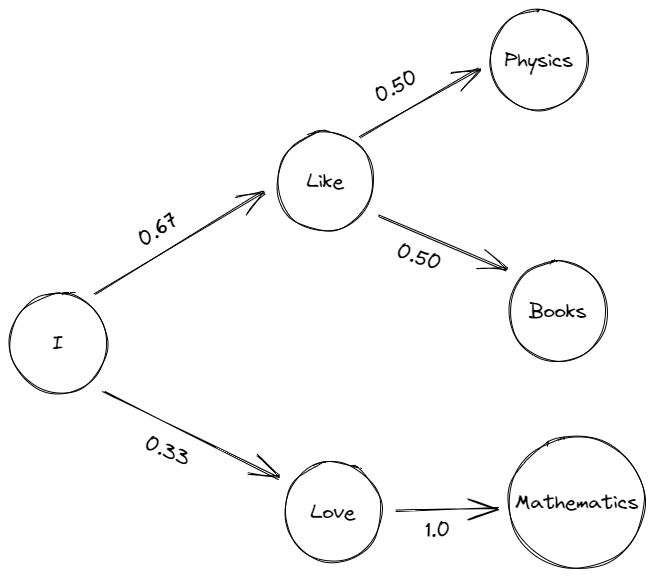

To understand that let’s take a simple example. Consider three simple sentences.

- I like Physics

- I love Cycling

- I like Books

All of the unique words from the preceding statements, namely ‘I,’ ‘like,’ ‘love,’ ‘Physics,’ ‘Cycling,’ and ‘Books,’ might construct the various states. The probability distribution is concerned with assessing the likelihood of transitioning from one state to another, in our instance from one word to another. The preceding examples show that the first word in our situation always begins with the word ‘I.’

As a result, there is a 100% probability that the first word of the phrase will be ‘I.’ We must select between the terms ‘like’ and ‘love’ for the second state. The probability distribution now is all about calculating the likelihood that the following word will be ‘like’ or ‘love’ if the preceding word is ‘I.’

In our example, the word ‘like’ comes in two of the three phrases after ‘I,’ but the word ‘love’ appears just once. As a result, there is a 67 % probability that ‘like’ will prevail after ‘I,’ and a 33 % (1/3) probability that ‘love’ will succeed after ‘I.’ Similarly, there is a 50% probability that ‘Physics’ and ‘books’ would succeed ‘like’. And the word ‘love’ is always followed by the word ‘cycling.’

Election polls

The primary objective of every political party is to devise plans to help them win an election, particularly a presidential one. Political experts and the media are particularly interested in this because they want to debate and compare the campaign methods of various parties. The Markov chains were used to forecast the election outcomes in Ghana in 2016.

Elections in Ghana may be characterized as a random process, and knowledge of prior election outcomes can be used to forecast future elections in the same way that incremental approaches do.

Ghana General elections from the fourth republic frequently appear to “flip-flop” after two terms (i.e., a National Democratic Congress (NDC) candidate will win two terms and a National Patriotic Party (NPP) candidate will win the next two terms).

As a result, MCs should be a valuable tool for forecasting election results. Bootstrap percentiles are used to calculate confidence ranges for these forecasts.

Closing thoughts

The Markov chain can be used to greatly simplify processes that satisfy the Markov property, knowing the previous history of the process will not improve the future predictions which of course significantly reduces the amount of data that needs to be taken into account. It has vast use cases in the field of science, mathematics, gaming, and information theory. With this article, we could understand a bunch of real-life use cases from different fields of life.