Facial expression analysis is the act of automatically detecting, collecting, and analyzing facial muscle movement and changes that reflect certain human mental states and situations. We will talk about this technique in this article, as well as a Python-based utility called Py-FEAT, which helps with identifying, preprocessing, analyzing, and visualizing facial expression data. Below are the major points that we are going to discuss in this article.

Table of contents

- The facial expression analysis

- How does Py-FEAT do the analysis?

- Implementing Py-FEAT

Let’sLet’s first understand facial expression analysis.

The facial expression analysis

A facial expression is made up of one or more motions or postures of the muscles beneath the skin of the face. These motions, according to one set of controversial ideas, communicate an individual’s emotional state to observers. Facial expressions are an example of nonverbal communication. They are the most prevalent means by which humans exchange social information, but they are also found in most other mammals and certain other species.

Facial expressions can disclose information about an individual’s inner mental state and provide nonverbal channels for interpersonal and cross-species communication. Getting a consensus on how to effectively portray and measure facial expressions has been one of the most difficult aspects of researching them. The Facial Affect Coding System (FACS) is one of the most widely used techniques for accurately measuring the intensity of groupings of facial muscles known as action units (AUs).

Automated methods based on computer vision techniques have been developed as a potential tool for extracting representations of face emotions from images, videos, and depth cameras both within and outside the laboratory. Participants can be freed from burdensome wires and engage in tasks such as watching a movie or conversing casually.

Aside from AUs, computer vision approaches have introduced alternate spaces for representing facial expressions, such as facial landmarks or lower dimensional latent representations. These techniques can predict the intensity of emotions and other affective states such as pain, discern between genuine and fake expressions, detect signs of depression, infer qualities such as personality or political orientations, and anticipate the development of interpersonal relationships.

How does Py-FEAT do the analysis?

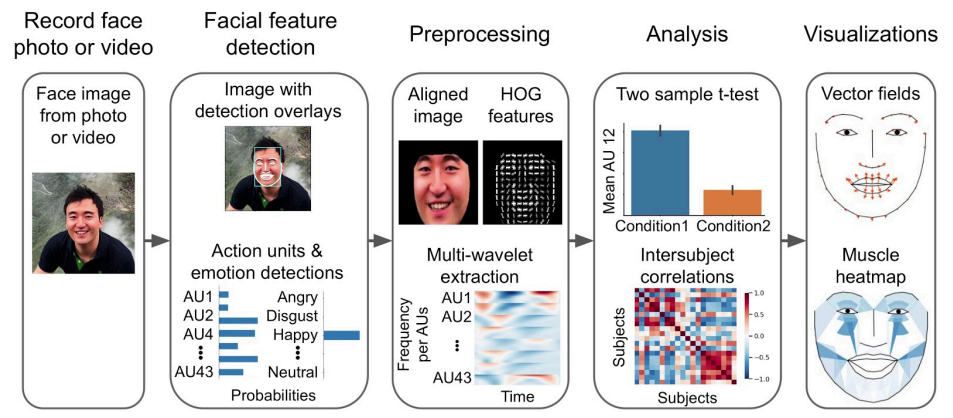

The Python Facial Expression Analysis Toolbox (Py-Feat), free and open-source software for analyzing facial expression data. It, like OpenFace, provides tools for extracting facial features, but it also includes modules for preprocessing, analyzing, and displaying facial expression data (see the pipeline in Figure below). Py-Feat is intended to cater to unique sorts of users. Py-Feat assists computer vision researchers by allowing them to communicate their cutting-edge models to a wider audience and quickly compare their models to others.

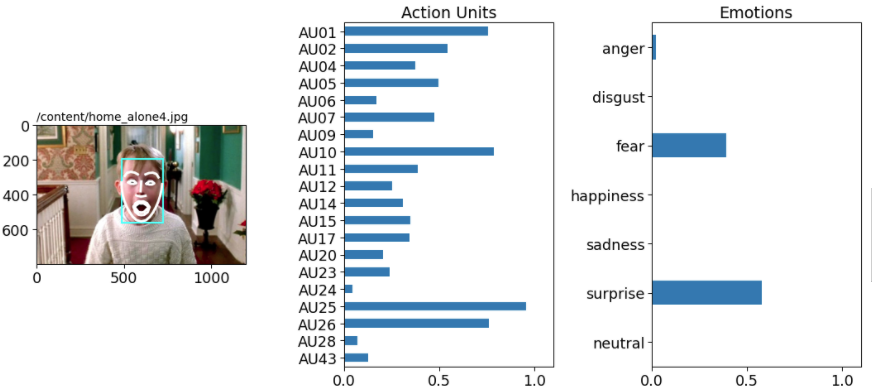

Face analysis begins with the capture of face photographs or videos using a recording device such as webcams, camcorders, head-mounted cameras, or 360 cameras, as seen above. After recording the face, Py-Feat is used to detect facial attributes such as facial landmarks, action units, and emotions, and the results can be compared using picture overlays and bar graphs.

Additional features can be extracted from the detection data, such as Histogram of Oriented Gradients or multi-wavelet decomposition. The data can then be evaluated using statistical methods such as t-tests, regressions, and intersubject correlations inside the toolbox.

Face images can be generated from models of action unit activations using visualization tools that display vector fields indicating landmark movements and heatmaps of facial muscle activations.

Py-Feat offers a Detector module for detecting facial expression features (such as faces, facial landmarks, AU activations, and emotional expressions) in face pictures and videos, as well as a Fex data class with methods for preprocessing, analyzing, and visualizing facial expression data. In the following section, we’ll look at how we can get face expression details for some of the movie scenes.

Implementing Py-FEAT

Using the Detector class, we’ll try to detect emotions from various movie scenes in this section. This class takes models for

- discovering a face in an image or video frame.

- locating facial landmarks.

- detecting activations of facial muscular action units, and

- detecting displays of standard emotional emotions as attributes.

These models are modular in nature, allowing users to choose which algorithms to apply for each detection task based on their accuracy and speed requirements. Now let’s get started by installing and importing dependencies.

!pip install py-feat import os from PIL import Image import matplotlib.pyplot as plt from feat.tests.utils import get_test_data_path from feat import Detector

Define the detector class as shown below,

# define the models face_model = "retinaface" landmark_model = "mobilenet" au_model = "rf" emotion_model = "resmasknet" detector = Detector(face_model = face_model, landmark_model = landmark_model, au_model = au_model, emotion_model = emotion_model)

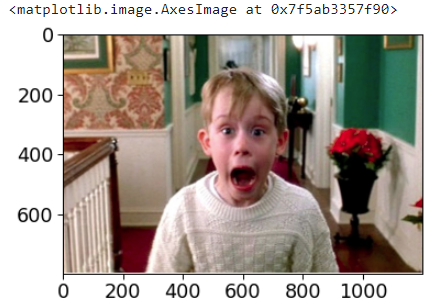

Now load the image,

# load and visualize the image

test_image = os.path.join('/content/', "home_alone4.jpg")

f, ax = plt.subplots()

im = Image.open(test_image)

ax.imshow(im)

Now we can initialize the detector class by its method for image-based inference by detect_image()

# get prediction

image_prediction = detector.detect_image(test_image)

Now by this, we can access the various action units that the model has detected and also emotions that are being inferred by the detector.

In a similar way, the method not only does the inference for the single but also multiple from image and video files. Examples are included in the notebook.

Final words

This article has explored what facial expression analysis is and how it may be used in a variety of applications. We’ve seen Py-Feat, an open-source complete stack framework written in Python for doing facial expression analysis from detection through preprocessing, analysis, and display. This package allows you to write new algorithms for identifying faces, facial landmarks, action units, and emotions.