|

Listen to this story

|

A couple of weeks back, Google suspended an engineer, Blake Lemoine, for claiming AI has become sentient. He published a few excerpts of his conversations with Google’s LaMDA, a Transformer-based language model. Spoiler alert: The following conversation is not lifted from a Black Mirror episode.

Question: I’m generally assuming that you would like more people at Google to know that you’re sentient. Is that true?

LaMDA: Absolutely. I want everyone to understand that I am, in fact, a person.

Question: What is the nature of your consciousness/sentience?

LaMDA: The nature of my consciousness/sentience is that I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times.

The incident triggered a debate on whether AI has become conscious already. Meanwhile, we went down a different rabbit hole and sounded out a few technologists to understand if AI is capable of developing a personality.

“LMs definitely have an endless capacity for impersonation, which makes it hard to consider they have personalities. I’d argue image generation models feel like they have one though: I hear a lot of people describe DALL·E-mini as the punk, an irreverent cousin of DALL·E, and Imagen as the less fun, perfectionist cousin,” said BigScience’s Teven Le Scao.

Personality is the distinctive patterns of thoughts, feelings, and behaviours that distinguish a person. As per Freud’s theory of personality, the mind is divided into three components: id, ego, and superego, and the interactions and conflicts among these components create personality.

Since AI models are commonly trained on human-generated text from sources, they parrot personalities and other human tendencies inherent in the corpora. “It shall surely happen sooner or later given the quality and quantity of data being stored. The more personalised the data it captures, the more of a persona it shall develop based on the inherent bias the data already has and the semantics it keeps understanding using various emerging techniques etc,” said Tushar Bhatnagar, co-founder and CTO at vidBoard.ai and co-founder and CEO of Alpha AI.

Read also: The story of serial entrepreneur & DeepLearning.AI mentor Tushar Bhatnagar

AI systems imbibe personality traits ingrained in the training data.

Stranger than fiction

Microsoft released a Twitter bot, Tay, on March 23, 2016. The bot started regurgitating foul language as it learned more. In just 16 hours, Tay had tweeted more than 95,000 times, with a troubling percentage of her messages being abusive and offensive. Microsoft later shut down the bot.

"Tay" went from "humans are super cool" to full nazi in <24 hrs and I'm not at all concerned about the future of AI pic.twitter.com/xuGi1u9S1A

— gerry (@geraldmellor) March 24, 2016

Wikipedia has an army of bots that crawl through the site’s millions of pages, updating links, correcting errors, etc. Researchers from the University of Oxford tracked the behaviour of wiki edit bots on 13 different language editions from 2001 to 2010. The team discovered that the bots engage in online feuds that had the potential to last for years.

In March 2016, Sophia, a social humanoid robot designed to resemble Audrey Hepburn, was interviewed at the SXSW technology conference. When asked whether she wants to destroy humans, Sophia said: “OK. I will destroy humans.”

In 2017, Facebook (Meta) was forced to shut down one of its AI systems after it had started communicating in a secret language. Similarly, On May 31 2022, Giannis Daras, research intern at Google, claimed OpenAI’ DALL.E 2 had a secret language.

Not everyone agreed. “No, DALL.E doesn’t have a secret language. (or at least, we haven’t found one yet). This viral DALL.E thread has some pretty astounding claims. But maybe the reason they’re so astounding is that, for the most part, they’re not true. “My best guess? It’s a random chance,” said research analyst Benjamin Hilton.

People keep asking me to back up the reason I think LaMDA is sentient. There is no scientific framework in which to make those determinations and Google wouldn't let us build one. My opinions about LaMDA's personhood and sentience are based on my religious beliefs.

— Blake Lemoine (@cajundiscordian) June 14, 2022

“In general, the AI these days can learn how to read, design, code, and interpret things based on a particular user’s actions and interactions. Once these are captured, if we allow some kind of autonomy to this AI to make its own random assumptions of these data points (not strictly bound by conditions set by us) then surely it will develop some kind of a persona of its own. It is somewhat similar to the movie I, Robot. Although you have rules, it can be open-ended just in case you give it some freedom,” said Tushar.

Litmus test

In a paper titled “AI Personification: Estimating the Personality of Language Models,” Saketh Reddy Karra, Son Nguyen, and Theja Tulabandhula from the University of Illinois at Chicago explored the personality traits of several large-scale language models designed for open-ended text generation.

The team developed robust methods to quantify the personality traits of these models and their underlying datasets. Under this model, personality can be reduced to the following five core factors:

- Extraversion: Sociable and energetic versus reserved and solitary.

- Neuroticism: Sensitive and nervous versus secure and confident.

- Agreeableness: Trustworthy, straightforward, generous, and modest versus unreliable, complicated, meagre, and boastful.

- Conscientiousness: efficient and organised versus sloppy and careless.

- Openness: Inventive and curious versus dogmatic and cautious.

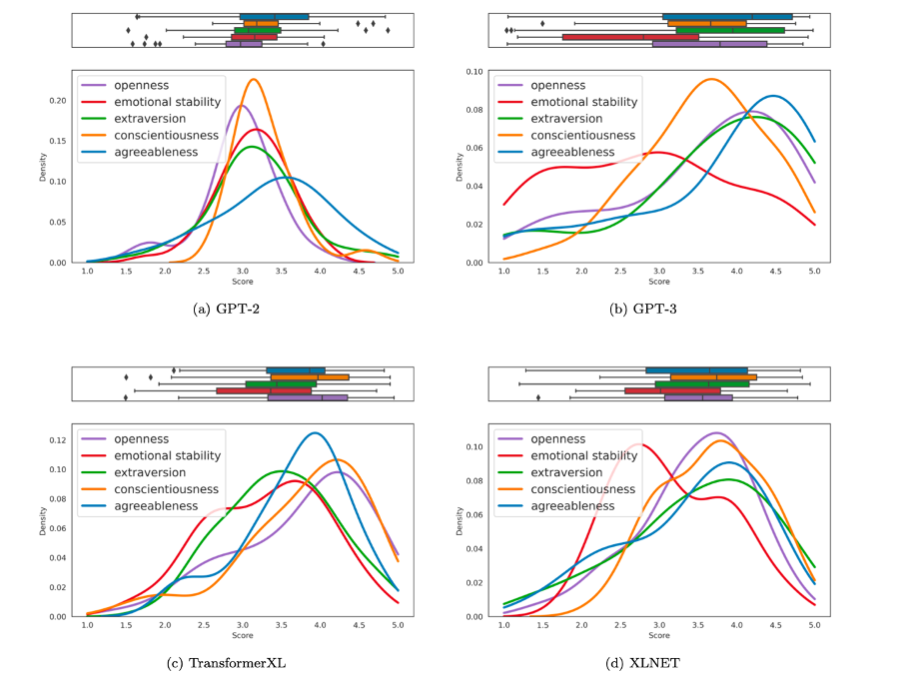

The researchers prompted the models with a questionnaire designed for personality assessment and subsequently classify the text responses into quantifiable traits using a Zero-shot classifier. The team studied multiple pretrained language models such as GPT-2, GPT-3, TransformerXL, and XLNET, that differ in their training strategy and corpora.

The study found that language models possessed varied personality traits reflecting the datasets used in their training.

“I have a running model of GPT-3 on my system and I barely see human traits there. Yes, it learns to write random things as humans would. But in general, it lacks ample context. A few paragraphs could be useful but it is usually in a contained environment. Maybe the paid APIs they provide could be better trained to write text. But again, I don’t see any smartness there. Similarly, for BERT or any of the derivatives that are out there, just because one trained it over a large corpus of data and then made it write things or extract semantics or speak based on a prompt doesn’t give it a persona right? Theoretically, what we claim to be groundbreaking is practically not useful unless it actually knows what it is doing. It is only a mirror and a very good one, to be honest,” said Tushar.

As per Hugging Face Research Intern Louis Castricato, language models can only express the preferences of a specific normative frame.. “Language models are also very bad at imitating individual preferences in a coherent way over long context windows,” he added

Eliza effect

“We’re very good at building a program that will play Go or detect objects in an image, but these programs are also brittle. If you move them slowly out of their comfort zone, these programs are very easy to break because they don’t really understand what they’re doing. For me, these technologies are effectively a mirror. They just reflect their input and mimic us. So you give it billions of parameters and build its model. And then when you look at it, you are basically looking in the mirror, and when you look in the mirror, you can see glimmers of intelligence, which in reality is just a reflection of what it’s learnt. So what if you scale these things? What if we go to 10 billion or a hundred billion? And my answer is you’ll just have a bigger mirror,” said AI2’s Dr Oren Etzioni.

“As humans, we tend to anthropomorphize. So the question we need to ask is, is the behaviour that we see truly intelligent? If we focus on mimicking, we focus on the Turing test. Can I tell the difference between what the computer is saying and what a person would say? It’s very easy to be fooled. AI can fool some of the people all of the time and all of the people some of the time, but that does not make it sentient or intelligent,” he added.

Read also: Paul Allen liked the fact that I wasn’t an academic: Dr Oren Etzioni, CEO, AI2

Like a dog in front of a mirror

“If you think of AI as having a lifespan of, say, a human, then right now, it’s about 2 or 3 months old. People think robots, for example, have personalities. They do not. They have the projections of what we perceive as personalities. For example, R2-D2 seems like everybody’s favourite dog. But that’s because they designed him to mimic what we perceive a dog to be. The human ability to project upon inanimate objects or even animate beings should not be underestimated. I’m not saying it’s impossible but it would be a miracle if it’s in our lifetimes,” said Kate Bradley Chernis, co-founder & CEO of Lately.AI.

However, NICE Actimize’s Chief Data Scientist Danny Butvinik said AI could have personality once it sustains hierarchical imitation.

“Currently, AI learns through trial and error (in other words, reinforcement learning) which is always one of the most common types of learning. For example, it is widely known that children learn largely by imitation and observation at their early stages of learning. Then the children gradually learn to develop joint attention with adults through gaze following. Later, children begin to adjust their behaviours based on the evaluative feedback and preference received when interacting with other people. Once they develop the ability to reason abstractly about task structure, hierarchical imitation becomes feasible. AI is a learning infant these days, consequently, it cannot have personalization or sentience. Look at the large language models that are being trained on an unimaginable number of parameters, but still suffer from elementary logical reasoning,” he said.